Project import generated by Copybara.

GitOrigin-RevId: 1e13be30e2c6838d4a2ff768a39c414bc80534bb

12

MANIFEST.in

|

|

@ -7,14 +7,4 @@ include MANIFEST.in

|

||||||

include README.md

|

include README.md

|

||||||

include requirements.txt

|

include requirements.txt

|

||||||

|

|

||||||

recursive-include mediapipe/modules *.tflite *.txt *.binarypb

|

recursive-include mediapipe/modules *.txt

|

||||||

exclude mediapipe/modules/face_detection/face_detection_full_range.tflite

|

|

||||||

exclude mediapipe/modules/objectron/object_detection_3d_chair_1stage.tflite

|

|

||||||

exclude mediapipe/modules/objectron/object_detection_3d_sneakers_1stage.tflite

|

|

||||||

exclude mediapipe/modules/objectron/object_detection_3d_sneakers.tflite

|

|

||||||

exclude mediapipe/modules/objectron/object_detection_3d_chair.tflite

|

|

||||||

exclude mediapipe/modules/objectron/object_detection_3d_camera.tflite

|

|

||||||

exclude mediapipe/modules/objectron/object_detection_3d_cup.tflite

|

|

||||||

exclude mediapipe/modules/objectron/object_detection_ssd_mobilenetv2_oidv4_fp16.tflite

|

|

||||||

exclude mediapipe/modules/pose_landmark/pose_landmark_lite.tflite

|

|

||||||

exclude mediapipe/modules/pose_landmark/pose_landmark_heavy.tflite

|

|

||||||

|

|

|

||||||

10

README.md

|

|

@ -4,7 +4,7 @@ title: Home

|

||||||

nav_order: 1

|

nav_order: 1

|

||||||

---

|

---

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

|

@ -13,21 +13,21 @@ nav_order: 1

|

||||||

[MediaPipe](https://google.github.io/mediapipe/) offers cross-platform, customizable

|

[MediaPipe](https://google.github.io/mediapipe/) offers cross-platform, customizable

|

||||||

ML solutions for live and streaming media.

|

ML solutions for live and streaming media.

|

||||||

|

|

||||||

|

|

|

|

||||||

:------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------:

|

:------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------:

|

||||||

***End-to-End acceleration***: *Built-in fast ML inference and processing accelerated even on common hardware* | ***Build once, deploy anywhere***: *Unified solution works across Android, iOS, desktop/cloud, web and IoT*

|

***End-to-End acceleration***: *Built-in fast ML inference and processing accelerated even on common hardware* | ***Build once, deploy anywhere***: *Unified solution works across Android, iOS, desktop/cloud, web and IoT*

|

||||||

|

|

|

|

||||||

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

||||||

|

|

||||||

## ML solutions in MediaPipe

|

## ML solutions in MediaPipe

|

||||||

|

|

||||||

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

||||||

:----------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :------:

|

:----------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :------:

|

||||||

[](https://google.github.io/mediapipe/solutions/face_detection) | [](https://google.github.io/mediapipe/solutions/face_mesh) | [](https://google.github.io/mediapipe/solutions/iris) | [](https://google.github.io/mediapipe/solutions/hands) | [](https://google.github.io/mediapipe/solutions/pose) | [](https://google.github.io/mediapipe/solutions/holistic)

|

[](https://google.github.io/mediapipe/solutions/face_detection) | [](https://google.github.io/mediapipe/solutions/face_mesh) | [](https://google.github.io/mediapipe/solutions/iris) | [](https://google.github.io/mediapipe/solutions/hands) | [](https://google.github.io/mediapipe/solutions/pose) | [](https://google.github.io/mediapipe/solutions/holistic)

|

||||||

|

|

||||||

Hair Segmentation | Object Detection | Box Tracking | Instant Motion Tracking | Objectron | KNIFT

|

Hair Segmentation | Object Detection | Box Tracking | Instant Motion Tracking | Objectron | KNIFT

|

||||||

:-------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------: | :---:

|

:-------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------: | :---:

|

||||||

[](https://google.github.io/mediapipe/solutions/hair_segmentation) | [](https://google.github.io/mediapipe/solutions/object_detection) | [](https://google.github.io/mediapipe/solutions/box_tracking) | [](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | [](https://google.github.io/mediapipe/solutions/objectron) | [](https://google.github.io/mediapipe/solutions/knift)

|

[](https://google.github.io/mediapipe/solutions/hair_segmentation) | [](https://google.github.io/mediapipe/solutions/object_detection) | [](https://google.github.io/mediapipe/solutions/box_tracking) | [](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | [](https://google.github.io/mediapipe/solutions/objectron) | [](https://google.github.io/mediapipe/solutions/knift)

|

||||||

|

|

||||||

<!-- []() in the first cell is needed to preserve table formatting in GitHub Pages. -->

|

<!-- []() in the first cell is needed to preserve table formatting in GitHub Pages. -->

|

||||||

<!-- Whenever this table is updated, paste a copy to solutions/solutions.md. -->

|

<!-- Whenever this table is updated, paste a copy to solutions/solutions.md. -->

|

||||||

|

|

|

||||||

83

WORKSPACE

|

|

@ -2,6 +2,12 @@ workspace(name = "mediapipe")

|

||||||

|

|

||||||

load("@bazel_tools//tools/build_defs/repo:http.bzl", "http_archive")

|

load("@bazel_tools//tools/build_defs/repo:http.bzl", "http_archive")

|

||||||

|

|

||||||

|

# Protobuf expects an //external:python_headers target

|

||||||

|

bind(

|

||||||

|

name = "python_headers",

|

||||||

|

actual = "@local_config_python//:python_headers",

|

||||||

|

)

|

||||||

|

|

||||||

http_archive(

|

http_archive(

|

||||||

name = "bazel_skylib",

|

name = "bazel_skylib",

|

||||||

type = "tar.gz",

|

type = "tar.gz",

|

||||||

|

|

@ -142,12 +148,50 @@ http_archive(

|

||||||

],

|

],

|

||||||

)

|

)

|

||||||

|

|

||||||

|

load("//third_party/flatbuffers:workspace.bzl", flatbuffers = "repo")

|

||||||

|

flatbuffers()

|

||||||

|

|

||||||

http_archive(

|

http_archive(

|

||||||

name = "com_google_audio_tools",

|

name = "com_google_audio_tools",

|

||||||

strip_prefix = "multichannel-audio-tools-master",

|

strip_prefix = "multichannel-audio-tools-master",

|

||||||

urls = ["https://github.com/google/multichannel-audio-tools/archive/master.zip"],

|

urls = ["https://github.com/google/multichannel-audio-tools/archive/master.zip"],

|

||||||

)

|

)

|

||||||

|

|

||||||

|

# sentencepiece

|

||||||

|

http_archive(

|

||||||

|

name = "com_google_sentencepiece",

|

||||||

|

strip_prefix = "sentencepiece-1.0.0",

|

||||||

|

sha256 = "c05901f30a1d0ed64cbcf40eba08e48894e1b0e985777217b7c9036cac631346",

|

||||||

|

urls = [

|

||||||

|

"https://github.com/google/sentencepiece/archive/1.0.0.zip",

|

||||||

|

],

|

||||||

|

repo_mapping = {"@com_google_glog" : "@com_github_glog_glog"},

|

||||||

|

)

|

||||||

|

|

||||||

|

http_archive(

|

||||||

|

name = "org_tensorflow_text",

|

||||||

|

sha256 = "f64647276f7288d1b1fe4c89581d51404d0ce4ae97f2bcc4c19bd667549adca8",

|

||||||

|

strip_prefix = "text-2.2.0",

|

||||||

|

urls = [

|

||||||

|

"https://github.com/tensorflow/text/archive/v2.2.0.zip",

|

||||||

|

],

|

||||||

|

patches = [

|

||||||

|

"//third_party:tensorflow_text_remove_tf_deps.diff",

|

||||||

|

"//third_party:tensorflow_text_a0f49e63.diff",

|

||||||

|

],

|

||||||

|

patch_args = ["-p1"],

|

||||||

|

repo_mapping = {"@com_google_re2": "@com_googlesource_code_re2"},

|

||||||

|

)

|

||||||

|

|

||||||

|

http_archive(

|

||||||

|

name = "com_googlesource_code_re2",

|

||||||

|

sha256 = "e06b718c129f4019d6e7aa8b7631bee38d3d450dd980246bfaf493eb7db67868",

|

||||||

|

strip_prefix = "re2-fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8",

|

||||||

|

urls = [

|

||||||

|

"https://github.com/google/re2/archive/fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8.tar.gz",

|

||||||

|

],

|

||||||

|

)

|

||||||

|

|

||||||

# 2020-07-09

|

# 2020-07-09

|

||||||

http_archive(

|

http_archive(

|

||||||

name = "pybind11_bazel",

|

name = "pybind11_bazel",

|

||||||

|

|

@ -167,6 +211,15 @@ http_archive(

|

||||||

build_file = "@pybind11_bazel//:pybind11.BUILD",

|

build_file = "@pybind11_bazel//:pybind11.BUILD",

|

||||||

)

|

)

|

||||||

|

|

||||||

|

http_archive(

|

||||||

|

name = "pybind11_protobuf",

|

||||||

|

sha256 = "baa1f53568283630a5055c85f0898b8810f7a6431bd01bbaedd32b4c1defbcb1",

|

||||||

|

strip_prefix = "pybind11_protobuf-3594106f2df3d725e65015ffb4c7886d6eeee683",

|

||||||

|

urls = [

|

||||||

|

"https://github.com/pybind/pybind11_protobuf/archive/3594106f2df3d725e65015ffb4c7886d6eeee683.tar.gz",

|

||||||

|

],

|

||||||

|

)

|

||||||

|

|

||||||

# Point to the commit that deprecates the usage of Eigen::MappedSparseMatrix.

|

# Point to the commit that deprecates the usage of Eigen::MappedSparseMatrix.

|

||||||

http_archive(

|

http_archive(

|

||||||

name = "ceres_solver",

|

name = "ceres_solver",

|

||||||

|

|

@ -377,10 +430,29 @@ http_archive(

|

||||||

],

|

],

|

||||||

)

|

)

|

||||||

|

|

||||||

# Tensorflow repo should always go after the other external dependencies.

|

# Load Zlib before initializing TensorFlow to guarantee that the target

|

||||||

# 2022-02-15

|

# @zlib//:mini_zlib is available

|

||||||

_TENSORFLOW_GIT_COMMIT = "a3419acc751dfc19caf4d34a1594e1f76810ec58"

|

http_archive(

|

||||||

_TENSORFLOW_SHA256 = "b95b2a83632d4055742ae1a2dcc96b45da6c12a339462dbc76c8bca505308e3a"

|

name = "zlib",

|

||||||

|

build_file = "//third_party:zlib.BUILD",

|

||||||

|

sha256 = "c3e5e9fdd5004dcb542feda5ee4f0ff0744628baf8ed2dd5d66f8ca1197cb1a1",

|

||||||

|

strip_prefix = "zlib-1.2.11",

|

||||||

|

urls = [

|

||||||

|

"http://mirror.bazel.build/zlib.net/fossils/zlib-1.2.11.tar.gz",

|

||||||

|

"http://zlib.net/fossils/zlib-1.2.11.tar.gz", # 2017-01-15

|

||||||

|

],

|

||||||

|

patches = [

|

||||||

|

"@//third_party:zlib.diff",

|

||||||

|

],

|

||||||

|

patch_args = [

|

||||||

|

"-p1",

|

||||||

|

],

|

||||||

|

)

|

||||||

|

|

||||||

|

# TensorFlow repo should always go after the other external dependencies.

|

||||||

|

# TF on 2022-08-10.

|

||||||

|

_TENSORFLOW_GIT_COMMIT = "af1d5bc4fbb66d9e6cc1cf89503014a99233583b"

|

||||||

|

_TENSORFLOW_SHA256 = "f85a5443264fc58a12d136ca6a30774b5bc25ceaf7d114d97f252351b3c3a2cb"

|

||||||

http_archive(

|

http_archive(

|

||||||

name = "org_tensorflow",

|

name = "org_tensorflow",

|

||||||

urls = [

|

urls = [

|

||||||

|

|

@ -417,3 +489,6 @@ libedgetpu_dependencies()

|

||||||

|

|

||||||

load("@coral_crosstool//:configure.bzl", "cc_crosstool")

|

load("@coral_crosstool//:configure.bzl", "cc_crosstool")

|

||||||

cc_crosstool(name = "crosstool")

|

cc_crosstool(name = "crosstool")

|

||||||

|

|

||||||

|

load("//third_party:external_files.bzl", "external_files")

|

||||||

|

external_files()

|

||||||

|

|

|

||||||

|

|

@ -20,7 +20,7 @@ aux_links:

|

||||||

- "//github.com/google/mediapipe"

|

- "//github.com/google/mediapipe"

|

||||||

|

|

||||||

# Footer content appears at the bottom of every page's main content

|

# Footer content appears at the bottom of every page's main content

|

||||||

footer_content: "© 2020 GOOGLE LLC | <a href=\"https://policies.google.com/privacy\">PRIVACY POLICY</a> | <a href=\"https://policies.google.com/terms\">TERMS OF SERVICE</a>"

|

footer_content: "© GOOGLE LLC | <a href=\"https://policies.google.com/privacy\">PRIVACY POLICY</a> | <a href=\"https://policies.google.com/terms\">TERMS OF SERVICE</a>"

|

||||||

|

|

||||||

# Color scheme currently only supports "dark", "light"/nil (default), or a custom scheme that you define

|

# Color scheme currently only supports "dark", "light"/nil (default), or a custom scheme that you define

|

||||||

color_scheme: mediapipe

|

color_scheme: mediapipe

|

||||||

|

|

|

||||||

|

|

@ -133,7 +133,7 @@ write outputs. After Close returns, the calculator is destroyed.

|

||||||

Calculators with no inputs are referred to as sources. A source calculator

|

Calculators with no inputs are referred to as sources. A source calculator

|

||||||

continues to have `Process()` called as long as it returns an `Ok` status. A

|

continues to have `Process()` called as long as it returns an `Ok` status. A

|

||||||

source calculator indicates that it is exhausted by returning a stop status

|

source calculator indicates that it is exhausted by returning a stop status

|

||||||

(i.e. MediaPipe::tool::StatusStop).

|

(i.e. [`mediaPipe::tool::StatusStop()`](https://github.com/google/mediapipe/tree/master/mediapipe/framework/tool/status_util.cc).).

|

||||||

|

|

||||||

## Identifying inputs and outputs

|

## Identifying inputs and outputs

|

||||||

|

|

||||||

|

|

@ -459,6 +459,6 @@ node {

|

||||||

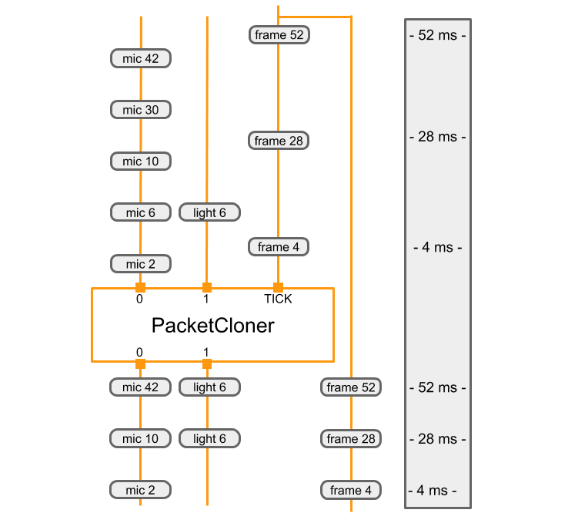

The diagram below shows how the `PacketClonerCalculator` defines its output

|

The diagram below shows how the `PacketClonerCalculator` defines its output

|

||||||

packets (bottom) based on its series of input packets (top).

|

packets (bottom) based on its series of input packets (top).

|

||||||

|

|

||||||

|

|

|

|

||||||

:--------------------------------------------------------------------------: |

|

:--------------------------------------------------------------------------: |

|

||||||

*Each time it receives a packet on its TICK input stream, the PacketClonerCalculator outputs the most recent packet from each of its input streams. The sequence of output packets (bottom) is determined by the sequence of input packets (top) and their timestamps. The timestamps are shown along the right side of the diagram.* |

|

*Each time it receives a packet on its TICK input stream, the PacketClonerCalculator outputs the most recent packet from each of its input streams. The sequence of output packets (bottom) is determined by the sequence of input packets (top) and their timestamps. The timestamps are shown along the right side of the diagram.* |

|

||||||

|

|

|

||||||

|

|

@ -149,7 +149,7 @@ When possible, these calculators use platform-specific functionality to share da

|

||||||

|

|

||||||

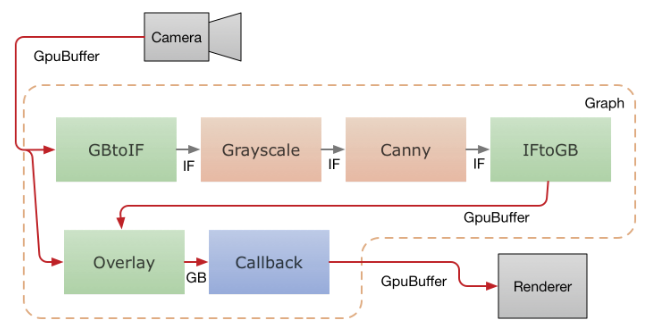

The below diagram shows the data flow in a mobile application that captures video from the camera, runs it through a MediaPipe graph, and renders the output on the screen in real time. The dashed line indicates which parts are inside the MediaPipe graph proper. This application runs a Canny edge-detection filter on the CPU using OpenCV, and overlays it on top of the original video using the GPU.

|

The below diagram shows the data flow in a mobile application that captures video from the camera, runs it through a MediaPipe graph, and renders the output on the screen in real time. The dashed line indicates which parts are inside the MediaPipe graph proper. This application runs a Canny edge-detection filter on the CPU using OpenCV, and overlays it on top of the original video using the GPU.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Video frames from the camera are fed into the graph as `GpuBuffer` packets. The

|

Video frames from the camera are fed into the graph as `GpuBuffer` packets. The

|

||||||

input stream is accessed by two calculators in parallel.

|

input stream is accessed by two calculators in parallel.

|

||||||

|

|

|

||||||

|

|

@ -159,7 +159,7 @@ Please use the `CalculatorGraphTest.Cycle` unit test in

|

||||||

below is the cyclic graph in the test. The `sum` output of the adder is the sum

|

below is the cyclic graph in the test. The `sum` output of the adder is the sum

|

||||||

of the integers generated by the integer source calculator.

|

of the integers generated by the integer source calculator.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

This simple graph illustrates all the issues in supporting cyclic graphs.

|

This simple graph illustrates all the issues in supporting cyclic graphs.

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -102,7 +102,7 @@ each project.

|

||||||

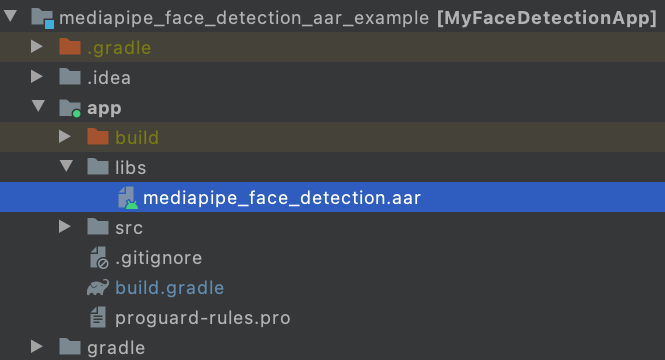

/path/to/your/app/libs/

|

/path/to/your/app/libs/

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

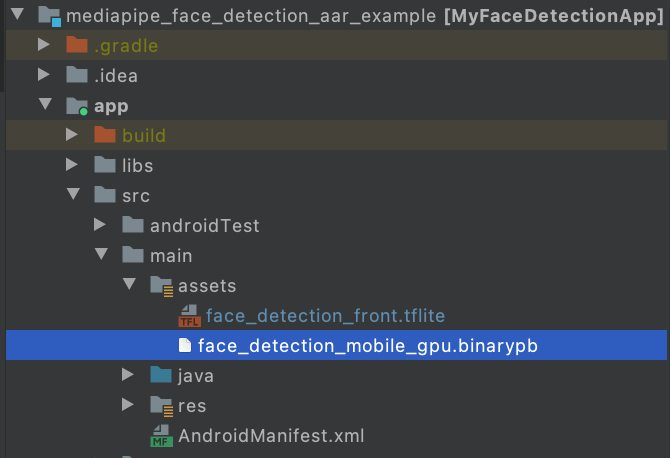

3. Make app/src/main/assets and copy assets (graph, model, and etc) into

|

3. Make app/src/main/assets and copy assets (graph, model, and etc) into

|

||||||

app/src/main/assets.

|

app/src/main/assets.

|

||||||

|

|

@ -120,7 +120,7 @@ each project.

|

||||||

cp mediapipe/modules/face_detection/face_detection_short_range.tflite /path/to/your/app/src/main/assets/

|

cp mediapipe/modules/face_detection/face_detection_short_range.tflite /path/to/your/app/src/main/assets/

|

||||||

```

|

```

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

4. Modify app/build.gradle to add MediaPipe dependencies and MediaPipe AAR.

|

4. Modify app/build.gradle to add MediaPipe dependencies and MediaPipe AAR.

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -55,18 +55,18 @@ To build these apps:

|

||||||

|

|

||||||

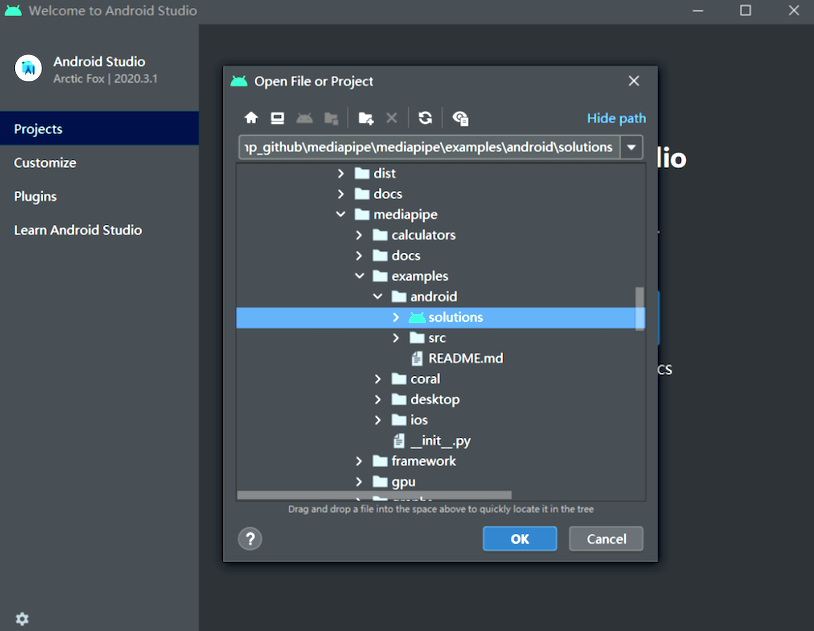

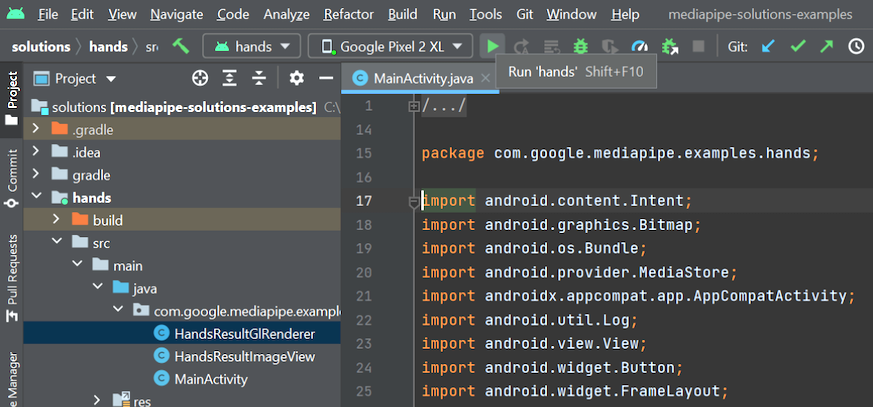

2. Import mediapipe/examples/android/solutions directory into Android Studio.

|

2. Import mediapipe/examples/android/solutions directory into Android Studio.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

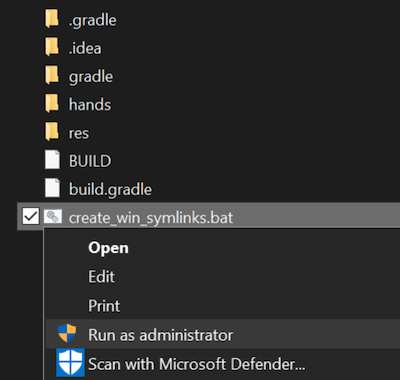

3. For Windows users, run `create_win_symlinks.bat` as administrator to create

|

3. For Windows users, run `create_win_symlinks.bat` as administrator to create

|

||||||

res directory symlinks.

|

res directory symlinks.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

4. Select "File" -> "Sync Project with Gradle Files" to sync project.

|

4. Select "File" -> "Sync Project with Gradle Files" to sync project.

|

||||||

|

|

||||||

5. Run solution example app in Android Studio.

|

5. Run solution example app in Android Studio.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

6. (Optional) Run solutions on CPU.

|

6. (Optional) Run solutions on CPU.

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ graph on Android.

|

||||||

A simple camera app for real-time Sobel edge detection applied to a live video

|

A simple camera app for real-time Sobel edge detection applied to a live video

|

||||||

stream on an Android device.

|

stream on an Android device.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Setup

|

## Setup

|

||||||

|

|

||||||

|

|

@ -69,7 +69,7 @@ node: {

|

||||||

|

|

||||||

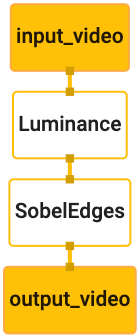

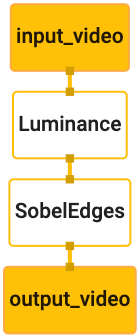

A visualization of the graph is shown below:

|

A visualization of the graph is shown below:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

This graph has a single input stream named `input_video` for all incoming frames

|

This graph has a single input stream named `input_video` for all incoming frames

|

||||||

that will be provided by your device's camera.

|

that will be provided by your device's camera.

|

||||||

|

|

@ -260,7 +260,7 @@ adb install bazel-bin/$APPLICATION_PATH/helloworld.apk

|

||||||

Open the application on your device. It should display a screen with the text

|

Open the application on your device. It should display a screen with the text

|

||||||

`Hello World!`.

|

`Hello World!`.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Using the camera via `CameraX`

|

## Using the camera via `CameraX`

|

||||||

|

|

||||||

|

|

@ -377,7 +377,7 @@ Add the following line in the `$APPLICATION_PATH/res/values/strings.xml` file:

|

||||||

When the user doesn't grant camera permission, the screen will now look like

|

When the user doesn't grant camera permission, the screen will now look like

|

||||||

this:

|

this:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Now, we will add the [`SurfaceTexture`] and [`SurfaceView`] objects to

|

Now, we will add the [`SurfaceTexture`] and [`SurfaceView`] objects to

|

||||||

`MainActivity`:

|

`MainActivity`:

|

||||||

|

|

@ -753,7 +753,7 @@ And that's it! You should now be able to successfully build and run the

|

||||||

application on the device and see Sobel edge detection running on a live camera

|

application on the device and see Sobel edge detection running on a live camera

|

||||||

feed! Congrats!

|

feed! Congrats!

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

If you ran into any issues, please see the full code of the tutorial

|

If you ran into any issues, please see the full code of the tutorial

|

||||||

[here](https://github.com/google/mediapipe/tree/master/mediapipe/examples/android/src/java/com/google/mediapipe/apps/basic).

|

[here](https://github.com/google/mediapipe/tree/master/mediapipe/examples/android/src/java/com/google/mediapipe/apps/basic).

|

||||||

|

|

|

||||||

|

|

@ -85,7 +85,7 @@ nav_order: 1

|

||||||

This graph consists of 1 graph input stream (`in`) and 1 graph output stream

|

This graph consists of 1 graph input stream (`in`) and 1 graph output stream

|

||||||

(`out`), and 2 [`PassThroughCalculator`]s connected serially.

|

(`out`), and 2 [`PassThroughCalculator`]s connected serially.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

4. Before running the graph, an `OutputStreamPoller` object is connected to the

|

4. Before running the graph, an `OutputStreamPoller` object is connected to the

|

||||||

output stream in order to later retrieve the graph output, and a graph run

|

output stream in order to later retrieve the graph output, and a graph run

|

||||||

|

|

|

||||||

|

|

@ -27,7 +27,7 @@ on iOS.

|

||||||

A simple camera app for real-time Sobel edge detection applied to a live video

|

A simple camera app for real-time Sobel edge detection applied to a live video

|

||||||

stream on an iOS device.

|

stream on an iOS device.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Setup

|

## Setup

|

||||||

|

|

||||||

|

|

@ -67,7 +67,7 @@ node: {

|

||||||

|

|

||||||

A visualization of the graph is shown below:

|

A visualization of the graph is shown below:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

This graph has a single input stream named `input_video` for all incoming frames

|

This graph has a single input stream named `input_video` for all incoming frames

|

||||||

that will be provided by your device's camera.

|

that will be provided by your device's camera.

|

||||||

|

|

@ -580,7 +580,7 @@ Update the interface definition of `ViewController` with `MPPGraphDelegate`:

|

||||||

And that is all! Build and run the app on your iOS device. You should see the

|

And that is all! Build and run the app on your iOS device. You should see the

|

||||||

results of running the edge detection graph on a live video feed. Congrats!

|

results of running the edge detection graph on a live video feed. Congrats!

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Please note that the iOS examples now use a [common] template app. The code in

|

Please note that the iOS examples now use a [common] template app. The code in

|

||||||

this tutorial is used in the [common] template app. The [helloworld] app has the

|

this tutorial is used in the [common] template app. The [helloworld] app has the

|

||||||

|

|

|

||||||

|

|

@ -113,9 +113,8 @@ Nvidia Jetson and Raspberry Pi, please read

|

||||||

|

|

||||||

Download the latest protoc win64 zip from

|

Download the latest protoc win64 zip from

|

||||||

[the Protobuf GitHub repo](https://github.com/protocolbuffers/protobuf/releases),

|

[the Protobuf GitHub repo](https://github.com/protocolbuffers/protobuf/releases),

|

||||||

unzip the file, and copy the protoc.exe executable to a preferred

|

unzip the file, and copy the protoc.exe executable to a preferred location.

|

||||||

location. Please ensure that location is added into the Path environment

|

Please ensure that location is added into the Path environment variable.

|

||||||

variable.

|

|

||||||

|

|

||||||

3. Activate a Python virtual environment.

|

3. Activate a Python virtual environment.

|

||||||

|

|

||||||

|

|

@ -131,16 +130,14 @@ Nvidia Jetson and Raspberry Pi, please read

|

||||||

(mp_env)mediapipe$ pip3 install -r requirements.txt

|

(mp_env)mediapipe$ pip3 install -r requirements.txt

|

||||||

```

|

```

|

||||||

|

|

||||||

6. Generate and install MediaPipe package.

|

6. Build and install MediaPipe package.

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

(mp_env)mediapipe$ python3 setup.py gen_protos

|

|

||||||

(mp_env)mediapipe$ python3 setup.py install --link-opencv

|

(mp_env)mediapipe$ python3 setup.py install --link-opencv

|

||||||

```

|

```

|

||||||

|

|

||||||

or

|

or

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

(mp_env)mediapipe$ python3 setup.py gen_protos

|

|

||||||

(mp_env)mediapipe$ python3 setup.py bdist_wheel

|

(mp_env)mediapipe$ python3 setup.py bdist_wheel

|

||||||

```

|

```

|

||||||

|

|

|

||||||

|

Before Width: | Height: | Size: 18 KiB |

|

Before Width: | Height: | Size: 15 KiB |

|

Before Width: | Height: | Size: 51 KiB |

|

Before Width: | Height: | Size: 885 KiB |

|

Before Width: | Height: | Size: 66 KiB |

|

Before Width: | Height: | Size: 797 KiB |

|

Before Width: | Height: | Size: 8.2 MiB |

|

Before Width: | Height: | Size: 170 KiB |

|

Before Width: | Height: | Size: 5.5 MiB |

|

Before Width: | Height: | Size: 39 KiB |

|

Before Width: | Height: | Size: 7.7 KiB |

|

Before Width: | Height: | Size: 11 KiB |

|

Before Width: | Height: | Size: 361 KiB |

|

Before Width: | Height: | Size: 15 KiB |

|

Before Width: | Height: | Size: 5.8 KiB |

|

Before Width: | Height: | Size: 11 KiB |

|

Before Width: | Height: | Size: 27 KiB |

|

Before Width: | Height: | Size: 195 KiB |

|

Before Width: | Height: | Size: 35 KiB |

|

Before Width: | Height: | Size: 118 KiB |

|

Before Width: | Height: | Size: 524 KiB |

|

Before Width: | Height: | Size: 808 KiB |

|

Before Width: | Height: | Size: 6.7 MiB |

|

Before Width: | Height: | Size: 1.1 KiB |

|

Before Width: | Height: | Size: 923 B |

|

Before Width: | Height: | Size: 46 KiB |

|

Before Width: | Height: | Size: 217 KiB |

|

Before Width: | Height: | Size: 163 KiB |

|

Before Width: | Height: | Size: 9.6 KiB |

|

Before Width: | Height: | Size: 4.6 KiB |

|

Before Width: | Height: | Size: 128 KiB |

|

Before Width: | Height: | Size: 1.3 MiB |

|

Before Width: | Height: | Size: 1.8 KiB |

|

Before Width: | Height: | Size: 26 KiB |

|

Before Width: | Height: | Size: 31 KiB |

|

Before Width: | Height: | Size: 20 KiB |

|

Before Width: | Height: | Size: 4.6 KiB |

|

Before Width: | Height: | Size: 302 KiB |

|

Before Width: | Height: | Size: 9.4 KiB |

|

Before Width: | Height: | Size: 34 KiB |

|

Before Width: | Height: | Size: 42 KiB |

|

Before Width: | Height: | Size: 18 KiB |

|

Before Width: | Height: | Size: 37 KiB |

|

Before Width: | Height: | Size: 666 KiB |

|

Before Width: | Height: | Size: 529 KiB |

|

Before Width: | Height: | Size: 12 KiB |

|

Before Width: | Height: | Size: 2.3 MiB |

|

Before Width: | Height: | Size: 808 KiB |

|

Before Width: | Height: | Size: 121 KiB |

|

Before Width: | Height: | Size: 72 KiB |

|

Before Width: | Height: | Size: 121 KiB |

|

Before Width: | Height: | Size: 3.3 MiB |

|

Before Width: | Height: | Size: 1.0 MiB |

|

Before Width: | Height: | Size: 32 KiB |

|

Before Width: | Height: | Size: 59 KiB |

|

Before Width: | Height: | Size: 1.3 MiB |

|

Before Width: | Height: | Size: 460 KiB |

|

Before Width: | Height: | Size: 64 KiB |

|

Before Width: | Height: | Size: 299 KiB |

|

Before Width: | Height: | Size: 3.1 MiB |

|

Before Width: | Height: | Size: 383 KiB |

|

Before Width: | Height: | Size: 84 KiB |

|

Before Width: | Height: | Size: 32 KiB |

|

Before Width: | Height: | Size: 293 KiB |

|

Before Width: | Height: | Size: 156 KiB |

|

Before Width: | Height: | Size: 93 KiB |

|

Before Width: | Height: | Size: 5.6 MiB |

|

Before Width: | Height: | Size: 4.7 MiB |

|

Before Width: | Height: | Size: 448 KiB |

|

Before Width: | Height: | Size: 150 KiB |

|

Before Width: | Height: | Size: 297 KiB |

|

Before Width: | Height: | Size: 4.5 MiB |

|

Before Width: | Height: | Size: 617 KiB |

|

Before Width: | Height: | Size: 925 KiB |

|

Before Width: | Height: | Size: 3.4 MiB |

|

Before Width: | Height: | Size: 1019 KiB |

|

Before Width: | Height: | Size: 10 MiB |

|

Before Width: | Height: | Size: 1.8 MiB |

|

Before Width: | Height: | Size: 182 KiB |

|

Before Width: | Height: | Size: 20 KiB |

|

Before Width: | Height: | Size: 149 KiB |

|

Before Width: | Height: | Size: 193 KiB |

|

Before Width: | Height: | Size: 213 KiB |

|

Before Width: | Height: | Size: 1.5 MiB |

|

Before Width: | Height: | Size: 475 KiB |

|

Before Width: | Height: | Size: 1.3 MiB |

|

Before Width: | Height: | Size: 112 KiB |