diff --git a/MANIFEST.in b/MANIFEST.in

index 14afffebe..37900d0ea 100644

--- a/MANIFEST.in

+++ b/MANIFEST.in

@@ -7,14 +7,4 @@ include MANIFEST.in

include README.md

include requirements.txt

-recursive-include mediapipe/modules *.tflite *.txt *.binarypb

-exclude mediapipe/modules/face_detection/face_detection_full_range.tflite

-exclude mediapipe/modules/objectron/object_detection_3d_chair_1stage.tflite

-exclude mediapipe/modules/objectron/object_detection_3d_sneakers_1stage.tflite

-exclude mediapipe/modules/objectron/object_detection_3d_sneakers.tflite

-exclude mediapipe/modules/objectron/object_detection_3d_chair.tflite

-exclude mediapipe/modules/objectron/object_detection_3d_camera.tflite

-exclude mediapipe/modules/objectron/object_detection_3d_cup.tflite

-exclude mediapipe/modules/objectron/object_detection_ssd_mobilenetv2_oidv4_fp16.tflite

-exclude mediapipe/modules/pose_landmark/pose_landmark_lite.tflite

-exclude mediapipe/modules/pose_landmark/pose_landmark_heavy.tflite

+recursive-include mediapipe/modules *.txt

diff --git a/README.md b/README.md

index 9c81095c5..e10952bcd 100644

--- a/README.md

+++ b/README.md

@@ -4,7 +4,7 @@ title: Home

nav_order: 1

---

-

+

--------------------------------------------------------------------------------

@@ -13,21 +13,21 @@ nav_order: 1

[MediaPipe](https://google.github.io/mediapipe/) offers cross-platform, customizable

ML solutions for live and streaming media.

- |

+ |

:------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------:

***End-to-End acceleration***: *Built-in fast ML inference and processing accelerated even on common hardware* | ***Build once, deploy anywhere***: *Unified solution works across Android, iOS, desktop/cloud, web and IoT*

- |

+ |

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

## ML solutions in MediaPipe

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

:----------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :------:

-[](https://google.github.io/mediapipe/solutions/face_detection) | [](https://google.github.io/mediapipe/solutions/face_mesh) | [](https://google.github.io/mediapipe/solutions/iris) | [](https://google.github.io/mediapipe/solutions/hands) | [](https://google.github.io/mediapipe/solutions/pose) | [](https://google.github.io/mediapipe/solutions/holistic)

+[](https://google.github.io/mediapipe/solutions/face_detection) | [](https://google.github.io/mediapipe/solutions/face_mesh) | [](https://google.github.io/mediapipe/solutions/iris) | [](https://google.github.io/mediapipe/solutions/hands) | [](https://google.github.io/mediapipe/solutions/pose) | [](https://google.github.io/mediapipe/solutions/holistic)

Hair Segmentation | Object Detection | Box Tracking | Instant Motion Tracking | Objectron | KNIFT

:-------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------: | :---:

-[](https://google.github.io/mediapipe/solutions/hair_segmentation) | [](https://google.github.io/mediapipe/solutions/object_detection) | [](https://google.github.io/mediapipe/solutions/box_tracking) | [](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | [](https://google.github.io/mediapipe/solutions/objectron) | [](https://google.github.io/mediapipe/solutions/knift)

+[](https://google.github.io/mediapipe/solutions/hair_segmentation) | [](https://google.github.io/mediapipe/solutions/object_detection) | [](https://google.github.io/mediapipe/solutions/box_tracking) | [](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | [](https://google.github.io/mediapipe/solutions/objectron) | [](https://google.github.io/mediapipe/solutions/knift)

diff --git a/WORKSPACE b/WORKSPACE

index 7a75537db..d3cc40fbe 100644

--- a/WORKSPACE

+++ b/WORKSPACE

@@ -2,6 +2,12 @@ workspace(name = "mediapipe")

load("@bazel_tools//tools/build_defs/repo:http.bzl", "http_archive")

+# Protobuf expects an //external:python_headers target

+bind(

+ name = "python_headers",

+ actual = "@local_config_python//:python_headers",

+)

+

http_archive(

name = "bazel_skylib",

type = "tar.gz",

@@ -142,12 +148,50 @@ http_archive(

],

)

+load("//third_party/flatbuffers:workspace.bzl", flatbuffers = "repo")

+flatbuffers()

+

http_archive(

name = "com_google_audio_tools",

strip_prefix = "multichannel-audio-tools-master",

urls = ["https://github.com/google/multichannel-audio-tools/archive/master.zip"],

)

+# sentencepiece

+http_archive(

+ name = "com_google_sentencepiece",

+ strip_prefix = "sentencepiece-1.0.0",

+ sha256 = "c05901f30a1d0ed64cbcf40eba08e48894e1b0e985777217b7c9036cac631346",

+ urls = [

+ "https://github.com/google/sentencepiece/archive/1.0.0.zip",

+ ],

+ repo_mapping = {"@com_google_glog" : "@com_github_glog_glog"},

+)

+

+http_archive(

+ name = "org_tensorflow_text",

+ sha256 = "f64647276f7288d1b1fe4c89581d51404d0ce4ae97f2bcc4c19bd667549adca8",

+ strip_prefix = "text-2.2.0",

+ urls = [

+ "https://github.com/tensorflow/text/archive/v2.2.0.zip",

+ ],

+ patches = [

+ "//third_party:tensorflow_text_remove_tf_deps.diff",

+ "//third_party:tensorflow_text_a0f49e63.diff",

+ ],

+ patch_args = ["-p1"],

+ repo_mapping = {"@com_google_re2": "@com_googlesource_code_re2"},

+)

+

+http_archive(

+ name = "com_googlesource_code_re2",

+ sha256 = "e06b718c129f4019d6e7aa8b7631bee38d3d450dd980246bfaf493eb7db67868",

+ strip_prefix = "re2-fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8",

+ urls = [

+ "https://github.com/google/re2/archive/fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8.tar.gz",

+ ],

+)

+

# 2020-07-09

http_archive(

name = "pybind11_bazel",

@@ -167,6 +211,15 @@ http_archive(

build_file = "@pybind11_bazel//:pybind11.BUILD",

)

+http_archive(

+ name = "pybind11_protobuf",

+ sha256 = "baa1f53568283630a5055c85f0898b8810f7a6431bd01bbaedd32b4c1defbcb1",

+ strip_prefix = "pybind11_protobuf-3594106f2df3d725e65015ffb4c7886d6eeee683",

+ urls = [

+ "https://github.com/pybind/pybind11_protobuf/archive/3594106f2df3d725e65015ffb4c7886d6eeee683.tar.gz",

+ ],

+)

+

# Point to the commit that deprecates the usage of Eigen::MappedSparseMatrix.

http_archive(

name = "ceres_solver",

@@ -377,10 +430,29 @@ http_archive(

],

)

-# Tensorflow repo should always go after the other external dependencies.

-# 2022-02-15

-_TENSORFLOW_GIT_COMMIT = "a3419acc751dfc19caf4d34a1594e1f76810ec58"

-_TENSORFLOW_SHA256 = "b95b2a83632d4055742ae1a2dcc96b45da6c12a339462dbc76c8bca505308e3a"

+# Load Zlib before initializing TensorFlow to guarantee that the target

+# @zlib//:mini_zlib is available

+http_archive(

+ name = "zlib",

+ build_file = "//third_party:zlib.BUILD",

+ sha256 = "c3e5e9fdd5004dcb542feda5ee4f0ff0744628baf8ed2dd5d66f8ca1197cb1a1",

+ strip_prefix = "zlib-1.2.11",

+ urls = [

+ "http://mirror.bazel.build/zlib.net/fossils/zlib-1.2.11.tar.gz",

+ "http://zlib.net/fossils/zlib-1.2.11.tar.gz", # 2017-01-15

+ ],

+ patches = [

+ "@//third_party:zlib.diff",

+ ],

+ patch_args = [

+ "-p1",

+ ],

+)

+

+# TensorFlow repo should always go after the other external dependencies.

+# TF on 2022-08-10.

+_TENSORFLOW_GIT_COMMIT = "af1d5bc4fbb66d9e6cc1cf89503014a99233583b"

+_TENSORFLOW_SHA256 = "f85a5443264fc58a12d136ca6a30774b5bc25ceaf7d114d97f252351b3c3a2cb"

http_archive(

name = "org_tensorflow",

urls = [

@@ -417,3 +489,6 @@ libedgetpu_dependencies()

load("@coral_crosstool//:configure.bzl", "cc_crosstool")

cc_crosstool(name = "crosstool")

+

+load("//third_party:external_files.bzl", "external_files")

+external_files()

diff --git a/docs/_config.yml b/docs/_config.yml

index a48c21d6d..35d61eff6 100644

--- a/docs/_config.yml

+++ b/docs/_config.yml

@@ -20,7 +20,7 @@ aux_links:

- "//github.com/google/mediapipe"

# Footer content appears at the bottom of every page's main content

-footer_content: "© 2020 GOOGLE LLC | PRIVACY POLICY | TERMS OF SERVICE"

+footer_content: "© GOOGLE LLC | PRIVACY POLICY | TERMS OF SERVICE"

# Color scheme currently only supports "dark", "light"/nil (default), or a custom scheme that you define

color_scheme: mediapipe

diff --git a/docs/framework_concepts/calculators.md b/docs/framework_concepts/calculators.md

index 9548fa461..614abbbfa 100644

--- a/docs/framework_concepts/calculators.md

+++ b/docs/framework_concepts/calculators.md

@@ -133,7 +133,7 @@ write outputs. After Close returns, the calculator is destroyed.

Calculators with no inputs are referred to as sources. A source calculator

continues to have `Process()` called as long as it returns an `Ok` status. A

source calculator indicates that it is exhausted by returning a stop status

-(i.e. MediaPipe::tool::StatusStop).

+(i.e. [`mediaPipe::tool::StatusStop()`](https://github.com/google/mediapipe/tree/master/mediapipe/framework/tool/status_util.cc).).

## Identifying inputs and outputs

@@ -459,6 +459,6 @@ node {

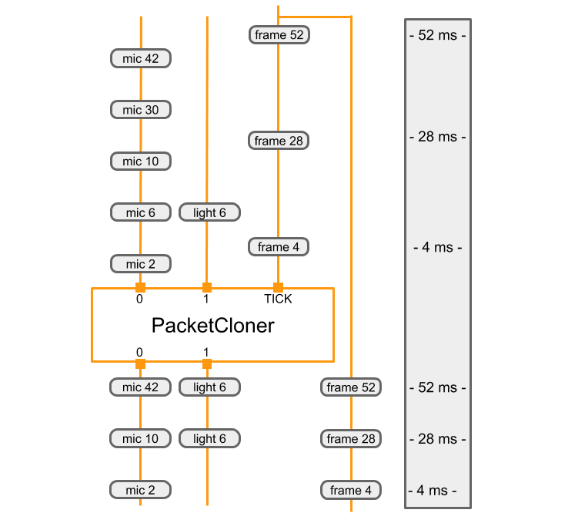

The diagram below shows how the `PacketClonerCalculator` defines its output

packets (bottom) based on its series of input packets (top).

- |

+ |

:--------------------------------------------------------------------------: |

*Each time it receives a packet on its TICK input stream, the PacketClonerCalculator outputs the most recent packet from each of its input streams. The sequence of output packets (bottom) is determined by the sequence of input packets (top) and their timestamps. The timestamps are shown along the right side of the diagram.* |

diff --git a/docs/framework_concepts/gpu.md b/docs/framework_concepts/gpu.md

index 8f9df6067..b089dd6f8 100644

--- a/docs/framework_concepts/gpu.md

+++ b/docs/framework_concepts/gpu.md

@@ -149,7 +149,7 @@ When possible, these calculators use platform-specific functionality to share da

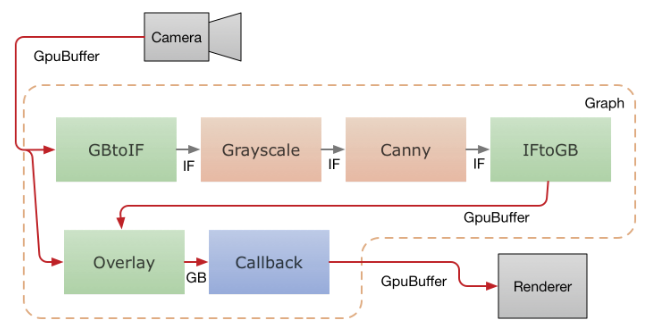

The below diagram shows the data flow in a mobile application that captures video from the camera, runs it through a MediaPipe graph, and renders the output on the screen in real time. The dashed line indicates which parts are inside the MediaPipe graph proper. This application runs a Canny edge-detection filter on the CPU using OpenCV, and overlays it on top of the original video using the GPU.

-

+

Video frames from the camera are fed into the graph as `GpuBuffer` packets. The

input stream is accessed by two calculators in parallel.

diff --git a/docs/framework_concepts/graphs.md b/docs/framework_concepts/graphs.md

index d7d972be5..f951b506d 100644

--- a/docs/framework_concepts/graphs.md

+++ b/docs/framework_concepts/graphs.md

@@ -159,7 +159,7 @@ Please use the `CalculatorGraphTest.Cycle` unit test in

below is the cyclic graph in the test. The `sum` output of the adder is the sum

of the integers generated by the integer source calculator.

-

+

This simple graph illustrates all the issues in supporting cyclic graphs.

diff --git a/docs/getting_started/android_archive_library.md b/docs/getting_started/android_archive_library.md

index a5752c6d5..5cef2b516 100644

--- a/docs/getting_started/android_archive_library.md

+++ b/docs/getting_started/android_archive_library.md

@@ -102,7 +102,7 @@ each project.

/path/to/your/app/libs/

```

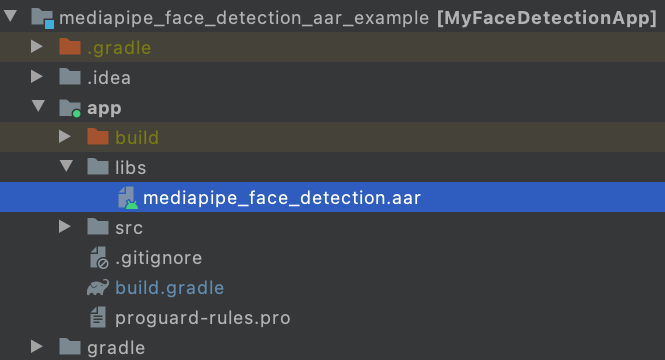

-

+

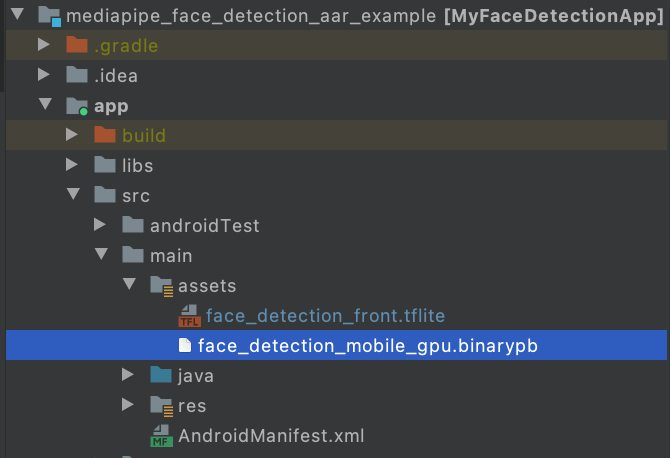

3. Make app/src/main/assets and copy assets (graph, model, and etc) into

app/src/main/assets.

@@ -120,7 +120,7 @@ each project.

cp mediapipe/modules/face_detection/face_detection_short_range.tflite /path/to/your/app/src/main/assets/

```

-

+

4. Modify app/build.gradle to add MediaPipe dependencies and MediaPipe AAR.

diff --git a/docs/getting_started/android_solutions.md b/docs/getting_started/android_solutions.md

index 9df98043f..2333cd664 100644

--- a/docs/getting_started/android_solutions.md

+++ b/docs/getting_started/android_solutions.md

@@ -55,18 +55,18 @@ To build these apps:

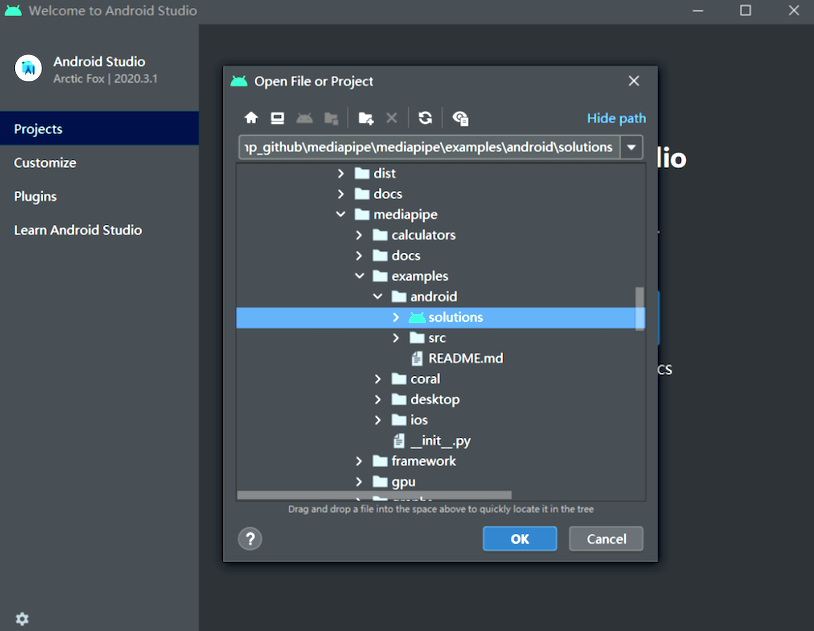

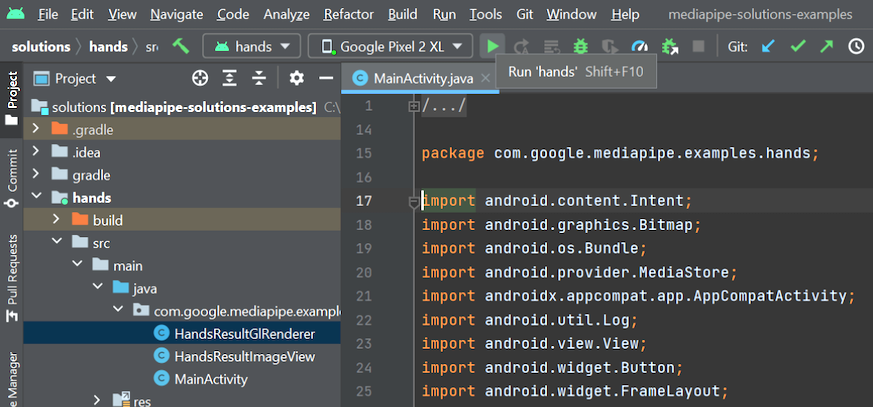

2. Import mediapipe/examples/android/solutions directory into Android Studio.

-

+

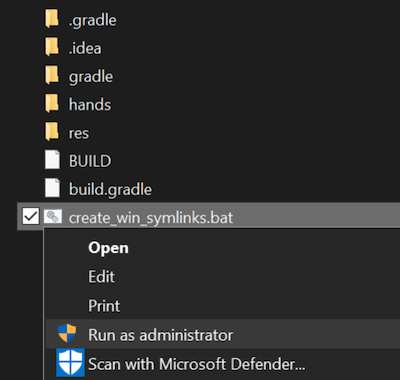

3. For Windows users, run `create_win_symlinks.bat` as administrator to create

res directory symlinks.

-

+

4. Select "File" -> "Sync Project with Gradle Files" to sync project.

5. Run solution example app in Android Studio.

-

+

6. (Optional) Run solutions on CPU.

diff --git a/docs/getting_started/hello_world_android.md b/docs/getting_started/hello_world_android.md

index 6674d4023..a80432173 100644

--- a/docs/getting_started/hello_world_android.md

+++ b/docs/getting_started/hello_world_android.md

@@ -27,7 +27,7 @@ graph on Android.

A simple camera app for real-time Sobel edge detection applied to a live video

stream on an Android device.

-

+

## Setup

@@ -69,7 +69,7 @@ node: {

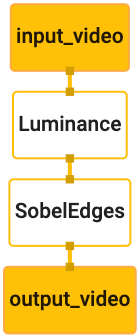

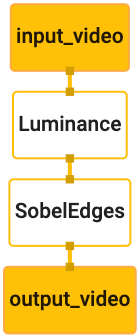

A visualization of the graph is shown below:

-

+

This graph has a single input stream named `input_video` for all incoming frames

that will be provided by your device's camera.

@@ -260,7 +260,7 @@ adb install bazel-bin/$APPLICATION_PATH/helloworld.apk

Open the application on your device. It should display a screen with the text

`Hello World!`.

-

+

## Using the camera via `CameraX`

@@ -377,7 +377,7 @@ Add the following line in the `$APPLICATION_PATH/res/values/strings.xml` file:

When the user doesn't grant camera permission, the screen will now look like

this:

-

+

Now, we will add the [`SurfaceTexture`] and [`SurfaceView`] objects to

`MainActivity`:

@@ -753,7 +753,7 @@ And that's it! You should now be able to successfully build and run the

application on the device and see Sobel edge detection running on a live camera

feed! Congrats!

-

+

If you ran into any issues, please see the full code of the tutorial

[here](https://github.com/google/mediapipe/tree/master/mediapipe/examples/android/src/java/com/google/mediapipe/apps/basic).

diff --git a/docs/getting_started/hello_world_cpp.md b/docs/getting_started/hello_world_cpp.md

index e3d34d9b4..b1bd54bef 100644

--- a/docs/getting_started/hello_world_cpp.md

+++ b/docs/getting_started/hello_world_cpp.md

@@ -85,7 +85,7 @@ nav_order: 1

This graph consists of 1 graph input stream (`in`) and 1 graph output stream

(`out`), and 2 [`PassThroughCalculator`]s connected serially.

-

+

4. Before running the graph, an `OutputStreamPoller` object is connected to the

output stream in order to later retrieve the graph output, and a graph run

diff --git a/docs/getting_started/hello_world_ios.md b/docs/getting_started/hello_world_ios.md

index dd75d416a..b03b995f0 100644

--- a/docs/getting_started/hello_world_ios.md

+++ b/docs/getting_started/hello_world_ios.md

@@ -27,7 +27,7 @@ on iOS.

A simple camera app for real-time Sobel edge detection applied to a live video

stream on an iOS device.

-

+

## Setup

@@ -67,7 +67,7 @@ node: {

A visualization of the graph is shown below:

-

+

This graph has a single input stream named `input_video` for all incoming frames

that will be provided by your device's camera.

@@ -580,7 +580,7 @@ Update the interface definition of `ViewController` with `MPPGraphDelegate`:

And that is all! Build and run the app on your iOS device. You should see the

results of running the edge detection graph on a live video feed. Congrats!

-

+

Please note that the iOS examples now use a [common] template app. The code in

this tutorial is used in the [common] template app. The [helloworld] app has the

diff --git a/docs/getting_started/python.md b/docs/getting_started/python.md

index 83550be84..289988e55 100644

--- a/docs/getting_started/python.md

+++ b/docs/getting_started/python.md

@@ -113,9 +113,8 @@ Nvidia Jetson and Raspberry Pi, please read

Download the latest protoc win64 zip from

[the Protobuf GitHub repo](https://github.com/protocolbuffers/protobuf/releases),

- unzip the file, and copy the protoc.exe executable to a preferred

- location. Please ensure that location is added into the Path environment

- variable.

+ unzip the file, and copy the protoc.exe executable to a preferred location.

+ Please ensure that location is added into the Path environment variable.

3. Activate a Python virtual environment.

@@ -131,16 +130,14 @@ Nvidia Jetson and Raspberry Pi, please read

(mp_env)mediapipe$ pip3 install -r requirements.txt

```

-6. Generate and install MediaPipe package.

+6. Build and install MediaPipe package.

```bash

- (mp_env)mediapipe$ python3 setup.py gen_protos

(mp_env)mediapipe$ python3 setup.py install --link-opencv

```

or

```bash

- (mp_env)mediapipe$ python3 setup.py gen_protos

(mp_env)mediapipe$ python3 setup.py bdist_wheel

```

diff --git a/docs/images/accelerated.png b/docs/images/accelerated.png

deleted file mode 100644

index 8c9d241ca..000000000

Binary files a/docs/images/accelerated.png and /dev/null differ

diff --git a/docs/images/accelerated_small.png b/docs/images/accelerated_small.png

deleted file mode 100644

index 759542dc4..000000000

Binary files a/docs/images/accelerated_small.png and /dev/null differ

diff --git a/docs/images/add_ipa.png b/docs/images/add_ipa.png

deleted file mode 100644

index 6fb793487..000000000

Binary files a/docs/images/add_ipa.png and /dev/null differ

diff --git a/docs/images/app_ipa.png b/docs/images/app_ipa.png

deleted file mode 100644

index ebbe0ec87..000000000

Binary files a/docs/images/app_ipa.png and /dev/null differ

diff --git a/docs/images/app_ipa_added.png b/docs/images/app_ipa_added.png

deleted file mode 100644

index e6b1efd1b..000000000

Binary files a/docs/images/app_ipa_added.png and /dev/null differ

diff --git a/docs/images/attention_mesh_architecture.png b/docs/images/attention_mesh_architecture.png

deleted file mode 100644

index 3a38de5c9..000000000

Binary files a/docs/images/attention_mesh_architecture.png and /dev/null differ

diff --git a/docs/images/autoflip_edited_example.gif b/docs/images/autoflip_edited_example.gif

deleted file mode 100644

index c36fa573c..000000000

Binary files a/docs/images/autoflip_edited_example.gif and /dev/null differ

diff --git a/docs/images/autoflip_graph.png b/docs/images/autoflip_graph.png

deleted file mode 100644

index 55a647f6d..000000000

Binary files a/docs/images/autoflip_graph.png and /dev/null differ

diff --git a/docs/images/autoflip_is_required.gif b/docs/images/autoflip_is_required.gif

deleted file mode 100644

index 7db883470..000000000

Binary files a/docs/images/autoflip_is_required.gif and /dev/null differ

diff --git a/docs/images/bazel_permission.png b/docs/images/bazel_permission.png

deleted file mode 100644

index e67dd72dc..000000000

Binary files a/docs/images/bazel_permission.png and /dev/null differ

diff --git a/docs/images/box_coordinate.svg b/docs/images/box_coordinate.svg

deleted file mode 100644

index f436de896..000000000

--- a/docs/images/box_coordinate.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-

-

-

diff --git a/docs/images/camera_coordinate.svg b/docs/images/camera_coordinate.svg

deleted file mode 100644

index 4cd3158ee..000000000

--- a/docs/images/camera_coordinate.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-

-

-

diff --git a/docs/images/click_subgraph_handdetection.png b/docs/images/click_subgraph_handdetection.png

deleted file mode 100644

index 32cf3a1da..000000000

Binary files a/docs/images/click_subgraph_handdetection.png and /dev/null differ

diff --git a/docs/images/console_error.png b/docs/images/console_error.png

deleted file mode 100644

index 749fdf7a9..000000000

Binary files a/docs/images/console_error.png and /dev/null differ

diff --git a/docs/images/cross_platform.png b/docs/images/cross_platform.png

deleted file mode 100644

index 09dedc96a..000000000

Binary files a/docs/images/cross_platform.png and /dev/null differ

diff --git a/docs/images/cross_platform_small.png b/docs/images/cross_platform_small.png

deleted file mode 100644

index 7476327b2..000000000

Binary files a/docs/images/cross_platform_small.png and /dev/null differ

diff --git a/docs/images/cyclic_integer_sum_graph.svg b/docs/images/cyclic_integer_sum_graph.svg

deleted file mode 100644

index ac7d42a77..000000000

--- a/docs/images/cyclic_integer_sum_graph.svg

+++ /dev/null

@@ -1,4 +0,0 @@

-

-

-

-

diff --git a/docs/images/device.png b/docs/images/device.png

deleted file mode 100644

index d911a24c2..000000000

Binary files a/docs/images/device.png and /dev/null differ

diff --git a/docs/images/editor_view.png b/docs/images/editor_view.png

deleted file mode 100644

index bfeac701f..000000000

Binary files a/docs/images/editor_view.png and /dev/null differ

diff --git a/docs/images/face_detection_desktop.png b/docs/images/face_detection_desktop.png

deleted file mode 100644

index 7f5f8ab1b..000000000

Binary files a/docs/images/face_detection_desktop.png and /dev/null differ

diff --git a/docs/images/face_geometry_metric_3d_space.gif b/docs/images/face_geometry_metric_3d_space.gif

deleted file mode 100644

index 1ecd20921..000000000

Binary files a/docs/images/face_geometry_metric_3d_space.gif and /dev/null differ

diff --git a/docs/images/face_geometry_renderer.gif b/docs/images/face_geometry_renderer.gif

deleted file mode 100644

index 1f18f765f..000000000

Binary files a/docs/images/face_geometry_renderer.gif and /dev/null differ

diff --git a/docs/images/face_mesh_ar_effects.gif b/docs/images/face_mesh_ar_effects.gif

deleted file mode 100644

index cf56ec719..000000000

Binary files a/docs/images/face_mesh_ar_effects.gif and /dev/null differ

diff --git a/docs/images/favicon.ico b/docs/images/favicon.ico

deleted file mode 100644

index 9ac07a20c..000000000

Binary files a/docs/images/favicon.ico and /dev/null differ

diff --git a/docs/images/faviconv2.ico b/docs/images/faviconv2.ico

deleted file mode 100644

index 30dce7213..000000000

Binary files a/docs/images/faviconv2.ico and /dev/null differ

diff --git a/docs/images/gpu_example_graph.png b/docs/images/gpu_example_graph.png

deleted file mode 100644

index e6f995e5a..000000000

Binary files a/docs/images/gpu_example_graph.png and /dev/null differ

diff --git a/docs/images/graph_visual.png b/docs/images/graph_visual.png

deleted file mode 100644

index 691d8df20..000000000

Binary files a/docs/images/graph_visual.png and /dev/null differ

diff --git a/docs/images/hand_tracking_desktop.png b/docs/images/hand_tracking_desktop.png

deleted file mode 100644

index 30ea34de5..000000000

Binary files a/docs/images/hand_tracking_desktop.png and /dev/null differ

diff --git a/docs/images/hello_world.png b/docs/images/hello_world.png

deleted file mode 100644

index 1005d7ffc..000000000

Binary files a/docs/images/hello_world.png and /dev/null differ

diff --git a/docs/images/iconv2.png b/docs/images/iconv2.png

deleted file mode 100644

index 74b3c7ae4..000000000

Binary files a/docs/images/iconv2.png and /dev/null differ

diff --git a/docs/images/import_mp_android_studio_project.png b/docs/images/import_mp_android_studio_project.png

deleted file mode 100644

index aa02b95ce..000000000

Binary files a/docs/images/import_mp_android_studio_project.png and /dev/null differ

diff --git a/docs/images/knift_stop_sign.gif b/docs/images/knift_stop_sign.gif

deleted file mode 100644

index a84b4aa19..000000000

Binary files a/docs/images/knift_stop_sign.gif and /dev/null differ

diff --git a/docs/images/logo.png b/docs/images/logo.png

deleted file mode 100644

index 1cca19eb2..000000000

Binary files a/docs/images/logo.png and /dev/null differ

diff --git a/docs/images/logo_horizontal_black.png b/docs/images/logo_horizontal_black.png

deleted file mode 100644

index 89f708fd0..000000000

Binary files a/docs/images/logo_horizontal_black.png and /dev/null differ

diff --git a/docs/images/logo_horizontal_color.png b/docs/images/logo_horizontal_color.png

deleted file mode 100644

index 6779a0d2a..000000000

Binary files a/docs/images/logo_horizontal_color.png and /dev/null differ

diff --git a/docs/images/logo_horizontal_white.png b/docs/images/logo_horizontal_white.png

deleted file mode 100644

index bd0e6d9ef..000000000

Binary files a/docs/images/logo_horizontal_white.png and /dev/null differ

diff --git a/docs/images/logov2.png b/docs/images/logov2.png

deleted file mode 100644

index 74b3c7ae4..000000000

Binary files a/docs/images/logov2.png and /dev/null differ

diff --git a/docs/images/maingraph_visualizer.png b/docs/images/maingraph_visualizer.png

deleted file mode 100644

index d34865c41..000000000

Binary files a/docs/images/maingraph_visualizer.png and /dev/null differ

diff --git a/docs/images/mediapipe_small.png b/docs/images/mediapipe_small.png

deleted file mode 100644

index 368e2b651..000000000

Binary files a/docs/images/mediapipe_small.png and /dev/null differ

diff --git a/docs/images/mobile/aar_location.png b/docs/images/mobile/aar_location.png

deleted file mode 100644

index 3dde1fa18..000000000

Binary files a/docs/images/mobile/aar_location.png and /dev/null differ

diff --git a/docs/images/mobile/assets_location.png b/docs/images/mobile/assets_location.png

deleted file mode 100644

index d22dbfaa5..000000000

Binary files a/docs/images/mobile/assets_location.png and /dev/null differ

diff --git a/docs/images/mobile/bazel_hello_world_android.png b/docs/images/mobile/bazel_hello_world_android.png

deleted file mode 100644

index 758e68cb8..000000000

Binary files a/docs/images/mobile/bazel_hello_world_android.png and /dev/null differ

diff --git a/docs/images/mobile/box_tracking_subgraph.png b/docs/images/mobile/box_tracking_subgraph.png

deleted file mode 100644

index fccfc65eb..000000000

Binary files a/docs/images/mobile/box_tracking_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/edge_detection_android_gpu.gif b/docs/images/mobile/edge_detection_android_gpu.gif

deleted file mode 100644

index a78a39876..000000000

Binary files a/docs/images/mobile/edge_detection_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/edge_detection_ios_gpu.gif b/docs/images/mobile/edge_detection_ios_gpu.gif

deleted file mode 100644

index 6d1a73060..000000000

Binary files a/docs/images/mobile/edge_detection_ios_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/edge_detection_mobile_gpu.png b/docs/images/mobile/edge_detection_mobile_gpu.png

deleted file mode 100644

index a082ec1d0..000000000

Binary files a/docs/images/mobile/edge_detection_mobile_gpu.png and /dev/null differ

diff --git a/docs/images/mobile/face_detection_android_gpu.gif b/docs/images/mobile/face_detection_android_gpu.gif

deleted file mode 100644

index 75d9228b3..000000000

Binary files a/docs/images/mobile/face_detection_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/face_detection_android_gpu_small.gif b/docs/images/mobile/face_detection_android_gpu_small.gif

deleted file mode 100644

index 0476602a3..000000000

Binary files a/docs/images/mobile/face_detection_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/face_detection_mobile_cpu.png b/docs/images/mobile/face_detection_mobile_cpu.png

deleted file mode 100644

index e57caa23a..000000000

Binary files a/docs/images/mobile/face_detection_mobile_cpu.png and /dev/null differ

diff --git a/docs/images/mobile/face_detection_mobile_gpu.png b/docs/images/mobile/face_detection_mobile_gpu.png

deleted file mode 100644

index 452b1a17f..000000000

Binary files a/docs/images/mobile/face_detection_mobile_gpu.png and /dev/null differ

diff --git a/docs/images/mobile/face_landmark_front_gpu_subgraph.png b/docs/images/mobile/face_landmark_front_gpu_subgraph.png

deleted file mode 100644

index a97b3da0b..000000000

Binary files a/docs/images/mobile/face_landmark_front_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/face_mesh_android_gpu.gif b/docs/images/mobile/face_mesh_android_gpu.gif

deleted file mode 100644

index cdba62021..000000000

Binary files a/docs/images/mobile/face_mesh_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/face_mesh_android_gpu_small.gif b/docs/images/mobile/face_mesh_android_gpu_small.gif

deleted file mode 100644

index 5ab431ef5..000000000

Binary files a/docs/images/mobile/face_mesh_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/face_mesh_mobile.png b/docs/images/mobile/face_mesh_mobile.png

deleted file mode 100644

index 0a109d617..000000000

Binary files a/docs/images/mobile/face_mesh_mobile.png and /dev/null differ

diff --git a/docs/images/mobile/face_renderer_gpu_subgraph.png b/docs/images/mobile/face_renderer_gpu_subgraph.png

deleted file mode 100644

index c53d854bd..000000000

Binary files a/docs/images/mobile/face_renderer_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/hair_segmentation_android_gpu.gif b/docs/images/mobile/hair_segmentation_android_gpu.gif

deleted file mode 100644

index 565f1849a..000000000

Binary files a/docs/images/mobile/hair_segmentation_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/hair_segmentation_android_gpu_small.gif b/docs/images/mobile/hair_segmentation_android_gpu_small.gif

deleted file mode 100644

index 737ef1506..000000000

Binary files a/docs/images/mobile/hair_segmentation_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/hair_segmentation_mobile_gpu.png b/docs/images/mobile/hair_segmentation_mobile_gpu.png

deleted file mode 100644

index 2a87ee834..000000000

Binary files a/docs/images/mobile/hair_segmentation_mobile_gpu.png and /dev/null differ

diff --git a/docs/images/mobile/hand_crops.png b/docs/images/mobile/hand_crops.png

deleted file mode 100644

index 46195aab0..000000000

Binary files a/docs/images/mobile/hand_crops.png and /dev/null differ

diff --git a/docs/images/mobile/hand_detection_android_gpu.gif b/docs/images/mobile/hand_detection_android_gpu.gif

deleted file mode 100644

index 38e32becf..000000000

Binary files a/docs/images/mobile/hand_detection_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/hand_detection_android_gpu_small.gif b/docs/images/mobile/hand_detection_android_gpu_small.gif

deleted file mode 100644

index bd61268fa..000000000

Binary files a/docs/images/mobile/hand_detection_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/hand_detection_gpu_subgraph.png b/docs/images/mobile/hand_detection_gpu_subgraph.png

deleted file mode 100644

index ba1fe9786..000000000

Binary files a/docs/images/mobile/hand_detection_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/hand_detection_mobile.png b/docs/images/mobile/hand_detection_mobile.png

deleted file mode 100644

index a0a763285..000000000

Binary files a/docs/images/mobile/hand_detection_mobile.png and /dev/null differ

diff --git a/docs/images/mobile/hand_landmark_gpu_subgraph.png b/docs/images/mobile/hand_landmark_gpu_subgraph.png

deleted file mode 100644

index 2e66d18d6..000000000

Binary files a/docs/images/mobile/hand_landmark_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/hand_landmarks.png b/docs/images/mobile/hand_landmarks.png

deleted file mode 100644

index f13746a86..000000000

Binary files a/docs/images/mobile/hand_landmarks.png and /dev/null differ

diff --git a/docs/images/mobile/hand_renderer_gpu_subgraph.png b/docs/images/mobile/hand_renderer_gpu_subgraph.png

deleted file mode 100644

index a32117252..000000000

Binary files a/docs/images/mobile/hand_renderer_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/hand_tracking_3d_android_gpu.gif b/docs/images/mobile/hand_tracking_3d_android_gpu.gif

deleted file mode 100644

index 60a95d438..000000000

Binary files a/docs/images/mobile/hand_tracking_3d_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/hand_tracking_android_gpu.gif b/docs/images/mobile/hand_tracking_android_gpu.gif

deleted file mode 100644

index b40e2986b..000000000

Binary files a/docs/images/mobile/hand_tracking_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/hand_tracking_android_gpu_small.gif b/docs/images/mobile/hand_tracking_android_gpu_small.gif

deleted file mode 100644

index c657edae0..000000000

Binary files a/docs/images/mobile/hand_tracking_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/hand_tracking_mobile.png b/docs/images/mobile/hand_tracking_mobile.png

deleted file mode 100644

index fb70f5e66..000000000

Binary files a/docs/images/mobile/hand_tracking_mobile.png and /dev/null differ

diff --git a/docs/images/mobile/holistic_pipeline_example.jpg b/docs/images/mobile/holistic_pipeline_example.jpg

deleted file mode 100644

index a35b3784b..000000000

Binary files a/docs/images/mobile/holistic_pipeline_example.jpg and /dev/null differ

diff --git a/docs/images/mobile/holistic_sports_and_gestures_example.gif b/docs/images/mobile/holistic_sports_and_gestures_example.gif

deleted file mode 100644

index d579e77ab..000000000

Binary files a/docs/images/mobile/holistic_sports_and_gestures_example.gif and /dev/null differ

diff --git a/docs/images/mobile/holistic_tracking_android_gpu_small.gif b/docs/images/mobile/holistic_tracking_android_gpu_small.gif

deleted file mode 100644

index 8cf0c226f..000000000

Binary files a/docs/images/mobile/holistic_tracking_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/instant_motion_tracking_android_small.gif b/docs/images/mobile/instant_motion_tracking_android_small.gif

deleted file mode 100644

index ff6d5537f..000000000

Binary files a/docs/images/mobile/instant_motion_tracking_android_small.gif and /dev/null differ

diff --git a/docs/images/mobile/iris_tracking_android_gpu.gif b/docs/images/mobile/iris_tracking_android_gpu.gif

deleted file mode 100644

index 6214d9e5c..000000000

Binary files a/docs/images/mobile/iris_tracking_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/iris_tracking_android_gpu_small.gif b/docs/images/mobile/iris_tracking_android_gpu_small.gif

deleted file mode 100644

index 050355476..000000000

Binary files a/docs/images/mobile/iris_tracking_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/iris_tracking_depth_from_iris.gif b/docs/images/mobile/iris_tracking_depth_from_iris.gif

deleted file mode 100644

index 2bcc80ea2..000000000

Binary files a/docs/images/mobile/iris_tracking_depth_from_iris.gif and /dev/null differ

diff --git a/docs/images/mobile/iris_tracking_example.gif b/docs/images/mobile/iris_tracking_example.gif

deleted file mode 100644

index 7988f3e95..000000000

Binary files a/docs/images/mobile/iris_tracking_example.gif and /dev/null differ

diff --git a/docs/images/mobile/iris_tracking_eye_and_iris_landmarks.png b/docs/images/mobile/iris_tracking_eye_and_iris_landmarks.png

deleted file mode 100644

index 1afb56395..000000000

Binary files a/docs/images/mobile/iris_tracking_eye_and_iris_landmarks.png and /dev/null differ

diff --git a/docs/images/mobile/missing_camera_permission_android.png b/docs/images/mobile/missing_camera_permission_android.png

deleted file mode 100644

index d492d56b8..000000000

Binary files a/docs/images/mobile/missing_camera_permission_android.png and /dev/null differ

diff --git a/docs/images/mobile/multi_hand_detection_gpu_subgraph.png b/docs/images/mobile/multi_hand_detection_gpu_subgraph.png

deleted file mode 100644

index 6105283b2..000000000

Binary files a/docs/images/mobile/multi_hand_detection_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/multi_hand_landmark_subgraph.png b/docs/images/mobile/multi_hand_landmark_subgraph.png

deleted file mode 100644

index 93f02bc42..000000000

Binary files a/docs/images/mobile/multi_hand_landmark_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/multi_hand_renderer_gpu_subgraph.png b/docs/images/mobile/multi_hand_renderer_gpu_subgraph.png

deleted file mode 100644

index 7da438e3f..000000000

Binary files a/docs/images/mobile/multi_hand_renderer_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/multi_hand_tracking_3d_android_gpu.gif b/docs/images/mobile/multi_hand_tracking_3d_android_gpu.gif

deleted file mode 100644

index 6aae8abca..000000000

Binary files a/docs/images/mobile/multi_hand_tracking_3d_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/multi_hand_tracking_3d_android_gpu_small.gif b/docs/images/mobile/multi_hand_tracking_3d_android_gpu_small.gif

deleted file mode 100644

index 24c101829..000000000

Binary files a/docs/images/mobile/multi_hand_tracking_3d_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/multi_hand_tracking_android_gpu.gif b/docs/images/mobile/multi_hand_tracking_android_gpu.gif

deleted file mode 100644

index 1e20dd082..000000000

Binary files a/docs/images/mobile/multi_hand_tracking_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/multi_hand_tracking_mobile.png b/docs/images/mobile/multi_hand_tracking_mobile.png

deleted file mode 100644

index b9eb410f3..000000000

Binary files a/docs/images/mobile/multi_hand_tracking_mobile.png and /dev/null differ

diff --git a/docs/images/mobile/object_detection_3d_android_gpu.png b/docs/images/mobile/object_detection_3d_android_gpu.png

deleted file mode 100644

index 4b0372d16..000000000

Binary files a/docs/images/mobile/object_detection_3d_android_gpu.png and /dev/null differ

diff --git a/docs/images/mobile/object_detection_android_cpu.gif b/docs/images/mobile/object_detection_android_cpu.gif

deleted file mode 100644

index 66c07d6ca..000000000

Binary files a/docs/images/mobile/object_detection_android_cpu.gif and /dev/null differ

diff --git a/docs/images/mobile/object_detection_android_gpu.gif b/docs/images/mobile/object_detection_android_gpu.gif

deleted file mode 100644

index 25e75f862..000000000

Binary files a/docs/images/mobile/object_detection_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/object_detection_android_gpu_small.gif b/docs/images/mobile/object_detection_android_gpu_small.gif

deleted file mode 100644

index db55678ba..000000000

Binary files a/docs/images/mobile/object_detection_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/object_detection_gpu_subgraph.png b/docs/images/mobile/object_detection_gpu_subgraph.png

deleted file mode 100644

index 8c4a24f95..000000000

Binary files a/docs/images/mobile/object_detection_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/object_detection_mobile_cpu.png b/docs/images/mobile/object_detection_mobile_cpu.png

deleted file mode 100644

index 48d7fb88e..000000000

Binary files a/docs/images/mobile/object_detection_mobile_cpu.png and /dev/null differ

diff --git a/docs/images/mobile/object_detection_mobile_gpu.png b/docs/images/mobile/object_detection_mobile_gpu.png

deleted file mode 100644

index 3f9ee6926..000000000

Binary files a/docs/images/mobile/object_detection_mobile_gpu.png and /dev/null differ

diff --git a/docs/images/mobile/object_tracking_android_gpu.gif b/docs/images/mobile/object_tracking_android_gpu.gif

deleted file mode 100644

index ed6f84ce7..000000000

Binary files a/docs/images/mobile/object_tracking_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/object_tracking_android_gpu_detection_only.gif b/docs/images/mobile/object_tracking_android_gpu_detection_only.gif

deleted file mode 100644

index b2c68520e..000000000

Binary files a/docs/images/mobile/object_tracking_android_gpu_detection_only.gif and /dev/null differ

diff --git a/docs/images/mobile/object_tracking_android_gpu_small.gif b/docs/images/mobile/object_tracking_android_gpu_small.gif

deleted file mode 100644

index db070efa2..000000000

Binary files a/docs/images/mobile/object_tracking_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/object_tracking_mobile_gpu.png b/docs/images/mobile/object_tracking_mobile_gpu.png

deleted file mode 100644

index de09514c1..000000000

Binary files a/docs/images/mobile/object_tracking_mobile_gpu.png and /dev/null differ

diff --git a/docs/images/mobile/object_tracking_renderer_gpu_subgraph.png b/docs/images/mobile/object_tracking_renderer_gpu_subgraph.png

deleted file mode 100644

index b164643a6..000000000

Binary files a/docs/images/mobile/object_tracking_renderer_gpu_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/object_tracking_subgraph.png b/docs/images/mobile/object_tracking_subgraph.png

deleted file mode 100644

index 8b7aa2143..000000000

Binary files a/docs/images/mobile/object_tracking_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/objectron_camera_android_gpu.gif b/docs/images/mobile/objectron_camera_android_gpu.gif

deleted file mode 100644

index 2ac32104d..000000000

Binary files a/docs/images/mobile/objectron_camera_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/objectron_chair_android_gpu.gif b/docs/images/mobile/objectron_chair_android_gpu.gif

deleted file mode 100644

index d2e0ef671..000000000

Binary files a/docs/images/mobile/objectron_chair_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/objectron_chair_android_gpu_small.gif b/docs/images/mobile/objectron_chair_android_gpu_small.gif

deleted file mode 100644

index 919bc0335..000000000

Binary files a/docs/images/mobile/objectron_chair_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/objectron_cup_android_gpu.gif b/docs/images/mobile/objectron_cup_android_gpu.gif

deleted file mode 100644

index 6b49e8f17..000000000

Binary files a/docs/images/mobile/objectron_cup_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/objectron_detection_subgraph.png b/docs/images/mobile/objectron_detection_subgraph.png

deleted file mode 100644

index 4d3bbc422..000000000

Binary files a/docs/images/mobile/objectron_detection_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/objectron_shoe_android_gpu.gif b/docs/images/mobile/objectron_shoe_android_gpu.gif

deleted file mode 100644

index ad0ae3697..000000000

Binary files a/docs/images/mobile/objectron_shoe_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/objectron_shoe_android_gpu_small.gif b/docs/images/mobile/objectron_shoe_android_gpu_small.gif

deleted file mode 100644

index 611f85dbe..000000000

Binary files a/docs/images/mobile/objectron_shoe_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/objectron_tracking_subgraph.png b/docs/images/mobile/objectron_tracking_subgraph.png

deleted file mode 100644

index 34296a502..000000000

Binary files a/docs/images/mobile/objectron_tracking_subgraph.png and /dev/null differ

diff --git a/docs/images/mobile/pose_classification_pairwise_distances.png b/docs/images/mobile/pose_classification_pairwise_distances.png

deleted file mode 100644

index 1aa2206df..000000000

Binary files a/docs/images/mobile/pose_classification_pairwise_distances.png and /dev/null differ

diff --git a/docs/images/mobile/pose_classification_pushups_and_squats.gif b/docs/images/mobile/pose_classification_pushups_and_squats.gif

deleted file mode 100644

index fe75f3bca..000000000

Binary files a/docs/images/mobile/pose_classification_pushups_and_squats.gif and /dev/null differ

diff --git a/docs/images/mobile/pose_classification_pushups_un_and_down_samples.jpg b/docs/images/mobile/pose_classification_pushups_un_and_down_samples.jpg

deleted file mode 100644

index 269e1b86b..000000000

Binary files a/docs/images/mobile/pose_classification_pushups_un_and_down_samples.jpg and /dev/null differ

diff --git a/docs/images/mobile/pose_segmentation.mp4 b/docs/images/mobile/pose_segmentation.mp4

deleted file mode 100644

index e0a68da70..000000000

Binary files a/docs/images/mobile/pose_segmentation.mp4 and /dev/null differ

diff --git a/docs/images/mobile/pose_tracking_android_gpu.gif b/docs/images/mobile/pose_tracking_android_gpu.gif

deleted file mode 100644

index deff2f02e..000000000

Binary files a/docs/images/mobile/pose_tracking_android_gpu.gif and /dev/null differ

diff --git a/docs/images/mobile/pose_tracking_android_gpu_small.gif b/docs/images/mobile/pose_tracking_android_gpu_small.gif

deleted file mode 100644

index 9d3ec1522..000000000

Binary files a/docs/images/mobile/pose_tracking_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/pose_tracking_detector_vitruvian_man.png b/docs/images/mobile/pose_tracking_detector_vitruvian_man.png

deleted file mode 100644

index ca25a5063..000000000

Binary files a/docs/images/mobile/pose_tracking_detector_vitruvian_man.png and /dev/null differ

diff --git a/docs/images/mobile/pose_tracking_example.gif b/docs/images/mobile/pose_tracking_example.gif

deleted file mode 100644

index e88f12f11..000000000

Binary files a/docs/images/mobile/pose_tracking_example.gif and /dev/null differ

diff --git a/docs/images/mobile/pose_tracking_full_body_landmarks.png b/docs/images/mobile/pose_tracking_full_body_landmarks.png

deleted file mode 100644

index 89530d9e4..000000000

Binary files a/docs/images/mobile/pose_tracking_full_body_landmarks.png and /dev/null differ

diff --git a/docs/images/mobile/pose_tracking_pck_chart.png b/docs/images/mobile/pose_tracking_pck_chart.png

deleted file mode 100644

index 1fa4bf97d..000000000

Binary files a/docs/images/mobile/pose_tracking_pck_chart.png and /dev/null differ

diff --git a/docs/images/mobile/pose_tracking_upper_body_landmarks.png b/docs/images/mobile/pose_tracking_upper_body_landmarks.png

deleted file mode 100644

index e2e964ec1..000000000

Binary files a/docs/images/mobile/pose_tracking_upper_body_landmarks.png and /dev/null differ

diff --git a/docs/images/mobile/pose_world_landmarks.mp4 b/docs/images/mobile/pose_world_landmarks.mp4

deleted file mode 100644

index 4a5bf3016..000000000

Binary files a/docs/images/mobile/pose_world_landmarks.mp4 and /dev/null differ

diff --git a/docs/images/mobile/renderer_gpu.png b/docs/images/mobile/renderer_gpu.png

deleted file mode 100644

index 9b062b9b1..000000000

Binary files a/docs/images/mobile/renderer_gpu.png and /dev/null differ

diff --git a/docs/images/mobile/template_matching_android_cpu.gif b/docs/images/mobile/template_matching_android_cpu.gif

deleted file mode 100644

index 9aa0229e7..000000000

Binary files a/docs/images/mobile/template_matching_android_cpu.gif and /dev/null differ

diff --git a/docs/images/mobile/template_matching_android_cpu_small.gif b/docs/images/mobile/template_matching_android_cpu_small.gif

deleted file mode 100644

index 68f64aea6..000000000

Binary files a/docs/images/mobile/template_matching_android_cpu_small.gif and /dev/null differ

diff --git a/docs/images/mobile/template_matching_mobile_graph.png b/docs/images/mobile/template_matching_mobile_graph.png

deleted file mode 100644

index 3e8c2b5d1..000000000

Binary files a/docs/images/mobile/template_matching_mobile_graph.png and /dev/null differ

diff --git a/docs/images/mobile/template_matching_mobile_template.jpg b/docs/images/mobile/template_matching_mobile_template.jpg

deleted file mode 100644

index 2843efdf6..000000000

Binary files a/docs/images/mobile/template_matching_mobile_template.jpg and /dev/null differ

diff --git a/docs/images/multi_hand_tracking_android_gpu.gif b/docs/images/multi_hand_tracking_android_gpu.gif

deleted file mode 100644

index 2cc920c86..000000000

Binary files a/docs/images/multi_hand_tracking_android_gpu.gif and /dev/null differ

diff --git a/docs/images/multi_hand_tracking_android_gpu_small.gif b/docs/images/multi_hand_tracking_android_gpu_small.gif

deleted file mode 100644

index 572b3658f..000000000

Binary files a/docs/images/multi_hand_tracking_android_gpu_small.gif and /dev/null differ

diff --git a/docs/images/multi_hand_tracking_desktop.png b/docs/images/multi_hand_tracking_desktop.png

deleted file mode 100644

index 5f84ab2f8..000000000

Binary files a/docs/images/multi_hand_tracking_desktop.png and /dev/null differ

diff --git a/docs/images/ndc_coordinate.svg b/docs/images/ndc_coordinate.svg

deleted file mode 100644

index 038660fd4..000000000

--- a/docs/images/ndc_coordinate.svg

+++ /dev/null

@@ -1,3 +0,0 @@

-

-

-

diff --git a/docs/images/object_detection_desktop_tensorflow.png b/docs/images/object_detection_desktop_tensorflow.png

deleted file mode 100644

index 50d7597f1..000000000

Binary files a/docs/images/object_detection_desktop_tensorflow.png and /dev/null differ

diff --git a/docs/images/object_detection_desktop_tflite.png b/docs/images/object_detection_desktop_tflite.png

deleted file mode 100644

index 27963d13d..000000000

Binary files a/docs/images/object_detection_desktop_tflite.png and /dev/null differ

diff --git a/docs/images/objectron_2stage_network_architecture.png b/docs/images/objectron_2stage_network_architecture.png

deleted file mode 100644

index 591f31f64..000000000

Binary files a/docs/images/objectron_2stage_network_architecture.png and /dev/null differ

diff --git a/docs/images/objectron_data_annotation.gif b/docs/images/objectron_data_annotation.gif

deleted file mode 100644

index 6466f7735..000000000

Binary files a/docs/images/objectron_data_annotation.gif and /dev/null differ

diff --git a/docs/images/objectron_example_results.png b/docs/images/objectron_example_results.png

deleted file mode 100644

index 977da33cc..000000000

Binary files a/docs/images/objectron_example_results.png and /dev/null differ

diff --git a/docs/images/objectron_network_architecture.png b/docs/images/objectron_network_architecture.png

deleted file mode 100644

index 2f0b6d9b2..000000000

Binary files a/docs/images/objectron_network_architecture.png and /dev/null differ

diff --git a/docs/images/objectron_sample_network_results.png b/docs/images/objectron_sample_network_results.png

deleted file mode 100644

index e3ae90f8a..000000000

Binary files a/docs/images/objectron_sample_network_results.png and /dev/null differ

diff --git a/docs/images/objectron_synthetic_data_generation.gif b/docs/images/objectron_synthetic_data_generation.gif

deleted file mode 100644

index 77705cca3..000000000

Binary files a/docs/images/objectron_synthetic_data_generation.gif and /dev/null differ

diff --git a/docs/images/open_source.png b/docs/images/open_source.png

deleted file mode 100644

index f337c8748..000000000

Binary files a/docs/images/open_source.png and /dev/null differ

diff --git a/docs/images/open_source_small.png b/docs/images/open_source_small.png

deleted file mode 100644

index c64ca50d3..000000000

Binary files a/docs/images/open_source_small.png and /dev/null differ

diff --git a/docs/images/packet_cloner_calculator.png b/docs/images/packet_cloner_calculator.png

deleted file mode 100644

index f2c2102ff..000000000

Binary files a/docs/images/packet_cloner_calculator.png and /dev/null differ

diff --git a/docs/images/ready_to_use.png b/docs/images/ready_to_use.png

deleted file mode 100644

index fbccbe830..000000000

Binary files a/docs/images/ready_to_use.png and /dev/null differ

diff --git a/docs/images/ready_to_use_small.png b/docs/images/ready_to_use_small.png

deleted file mode 100644

index 5091faaf6..000000000

Binary files a/docs/images/ready_to_use_small.png and /dev/null differ

diff --git a/docs/images/realtime_face_detection.gif b/docs/images/realtime_face_detection.gif

deleted file mode 100644

index a517a68d2..000000000

Binary files a/docs/images/realtime_face_detection.gif and /dev/null differ

diff --git a/docs/images/run_android_solution_app.png b/docs/images/run_android_solution_app.png

deleted file mode 100644

index aa21f3c24..000000000

Binary files a/docs/images/run_android_solution_app.png and /dev/null differ

diff --git a/docs/images/run_create_win_symlinks.png b/docs/images/run_create_win_symlinks.png

deleted file mode 100644

index 69b94b75f..000000000

Binary files a/docs/images/run_create_win_symlinks.png and /dev/null differ

diff --git a/docs/images/selfie_segmentation_web.mp4 b/docs/images/selfie_segmentation_web.mp4

deleted file mode 100644

index d9e62838e..000000000

Binary files a/docs/images/selfie_segmentation_web.mp4 and /dev/null differ

diff --git a/docs/images/side_packet.png b/docs/images/side_packet.png

deleted file mode 100644

index 5155835c0..000000000

Binary files a/docs/images/side_packet.png and /dev/null differ

diff --git a/docs/images/side_packet_code.png b/docs/images/side_packet_code.png

deleted file mode 100644

index 88a610305..000000000

Binary files a/docs/images/side_packet_code.png and /dev/null differ

diff --git a/docs/images/special_nodes.png b/docs/images/special_nodes.png

deleted file mode 100644

index bcb7763c0..000000000

Binary files a/docs/images/special_nodes.png and /dev/null differ

diff --git a/docs/images/special_nodes_code.png b/docs/images/special_nodes_code.png

deleted file mode 100644

index 148c54a3b..000000000

Binary files a/docs/images/special_nodes_code.png and /dev/null differ

diff --git a/docs/images/startup_screen.png b/docs/images/startup_screen.png

deleted file mode 100644

index a841ee759..000000000

Binary files a/docs/images/startup_screen.png and /dev/null differ

diff --git a/docs/images/stream_code.png b/docs/images/stream_code.png

deleted file mode 100644

index eabcbfe3f..000000000

Binary files a/docs/images/stream_code.png and /dev/null differ

diff --git a/docs/images/stream_ui.png b/docs/images/stream_ui.png

deleted file mode 100644

index 553e75143..000000000

Binary files a/docs/images/stream_ui.png and /dev/null differ

diff --git a/docs/images/upload_2pbtxt.png b/docs/images/upload_2pbtxt.png

deleted file mode 100644

index 02a079ae8..000000000

Binary files a/docs/images/upload_2pbtxt.png and /dev/null differ

diff --git a/docs/images/upload_button.png b/docs/images/upload_button.png

deleted file mode 100644

index 086f8379b..000000000

Binary files a/docs/images/upload_button.png and /dev/null differ

diff --git a/docs/images/upload_graph_button.png b/docs/images/upload_graph_button.png

deleted file mode 100644

index 9cbf31a8e..000000000

Binary files a/docs/images/upload_graph_button.png and /dev/null differ

diff --git a/docs/images/visualizer/ios_download_container.png b/docs/images/visualizer/ios_download_container.png

deleted file mode 100644

index 375b5410f..000000000

Binary files a/docs/images/visualizer/ios_download_container.png and /dev/null differ

diff --git a/docs/images/visualizer/ios_window_devices.png b/docs/images/visualizer/ios_window_devices.png

deleted file mode 100644

index c778afeaa..000000000

Binary files a/docs/images/visualizer/ios_window_devices.png and /dev/null differ

diff --git a/docs/images/visualizer/viz_chart_view.png b/docs/images/visualizer/viz_chart_view.png

deleted file mode 100644

index f18061397..000000000

Binary files a/docs/images/visualizer/viz_chart_view.png and /dev/null differ

diff --git a/docs/images/visualizer/viz_click_upload.png b/docs/images/visualizer/viz_click_upload.png

deleted file mode 100644

index c2f0ab127..000000000

Binary files a/docs/images/visualizer/viz_click_upload.png and /dev/null differ

diff --git a/docs/images/visualizer/viz_click_upload_trace_file.png b/docs/images/visualizer/viz_click_upload_trace_file.png

deleted file mode 100644

index d1ba8a223..000000000

Binary files a/docs/images/visualizer/viz_click_upload_trace_file.png and /dev/null differ

diff --git a/docs/images/visualizer_runner.png b/docs/images/visualizer_runner.png

deleted file mode 100644

index 5224a0949..000000000

Binary files a/docs/images/visualizer_runner.png and /dev/null differ

diff --git a/docs/images/web_effect.gif b/docs/images/web_effect.gif

deleted file mode 100644

index dac8e236b..000000000

Binary files a/docs/images/web_effect.gif and /dev/null differ

diff --git a/docs/images/web_segmentation.gif b/docs/images/web_segmentation.gif

deleted file mode 100644

index 516a07d6c..000000000

Binary files a/docs/images/web_segmentation.gif and /dev/null differ

diff --git a/docs/solutions/autoflip.md b/docs/solutions/autoflip.md

index 676abcae8..820478dca 100644

--- a/docs/solutions/autoflip.md

+++ b/docs/solutions/autoflip.md

@@ -27,7 +27,7 @@ to arbitrary aspect ratios.

For overall context on AutoFlip, please read this

[Google AI Blog](https://ai.googleblog.com/2020/02/autoflip-open-source-framework-for.html).

-

+

## Building

@@ -61,7 +61,7 @@ command above accordingly to run AutoFlip against the videos.

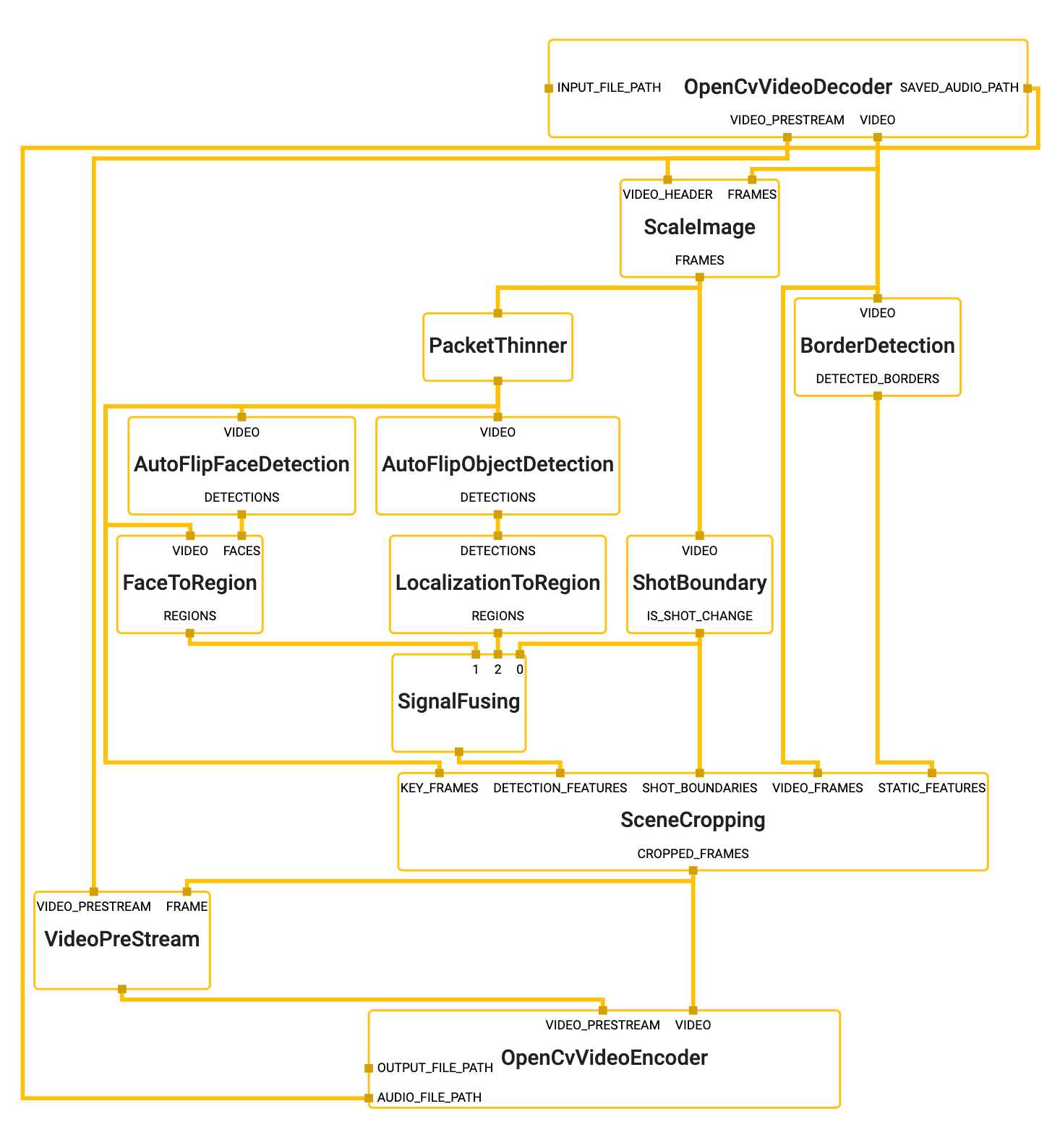

## MediaPipe Graph

-

+

To visualize the graph as shown above, copy the text specification of the graph

below and paste it into [MediaPipe Visualizer](https://viz.mediapipe.dev).

@@ -297,7 +297,7 @@ the required features cannot be all covered (for example, when they are too

spread out in the video), AutoFlip will apply a padding effect to cover as much

salient content as possible. See an illustration below.

-

+

### Stable vs Tracking Camera Motion

diff --git a/docs/solutions/box_tracking.md b/docs/solutions/box_tracking.md

index b84a015d1..0f65fbfca 100644

--- a/docs/solutions/box_tracking.md

+++ b/docs/solutions/box_tracking.md

@@ -80,7 +80,7 @@ frame (e.g., [MediaPipe Object Detection](./object_detection.md)):

* Object localization is temporally consistent with the help of tracking,

meaning less jitter is observable across frames.

- |

+ |

:----------------------------------------------------------------------------------: |

*Fig 1. Box tracking paired with ML-based object detection.* |

diff --git a/docs/solutions/face_detection.md b/docs/solutions/face_detection.md

index 4eccf17f5..9a56024ce 100644

--- a/docs/solutions/face_detection.md

+++ b/docs/solutions/face_detection.md

@@ -37,7 +37,7 @@ improved tie resolution strategy alternative to non-maximum suppression. For

more information about BlazeFace, please see the [Resources](#resources)

section.

-

+

## Solution APIs

diff --git a/docs/solutions/face_mesh.md b/docs/solutions/face_mesh.md

index ec43fb4ef..24ee760fc 100644

--- a/docs/solutions/face_mesh.md

+++ b/docs/solutions/face_mesh.md

@@ -38,7 +38,7 @@ lightweight statistical analysis method called

employed to drive a robust, performant and portable logic. The analysis runs on

CPU and has a minimal speed/memory footprint on top of the ML model inference.

- |

+ |

:-------------------------------------------------------------: |

*Fig 1. AR effects utilizing the 3D facial surface.* |

@@ -107,7 +107,7 @@ angle and occlusions.

You can find more information about the face landmark model in this

[paper](https://arxiv.org/abs/1907.06724).

- |

+ |

:------------------------------------------------------------------------: |

*Fig 2. Face landmarks: the red box indicates the cropped area as input to the landmark model, the red dots represent the 468 landmarks in 3D, and the green lines connecting landmarks illustrate the contours around the eyes, eyebrows, lips and the entire face.* |

@@ -124,7 +124,7 @@ The attention mesh model can be selected in the Solution APIs via the

[refine_landmarks](#refine_landmarks) option. You can also find more information

about the model in this [paper](https://arxiv.org/abs/2006.10962).

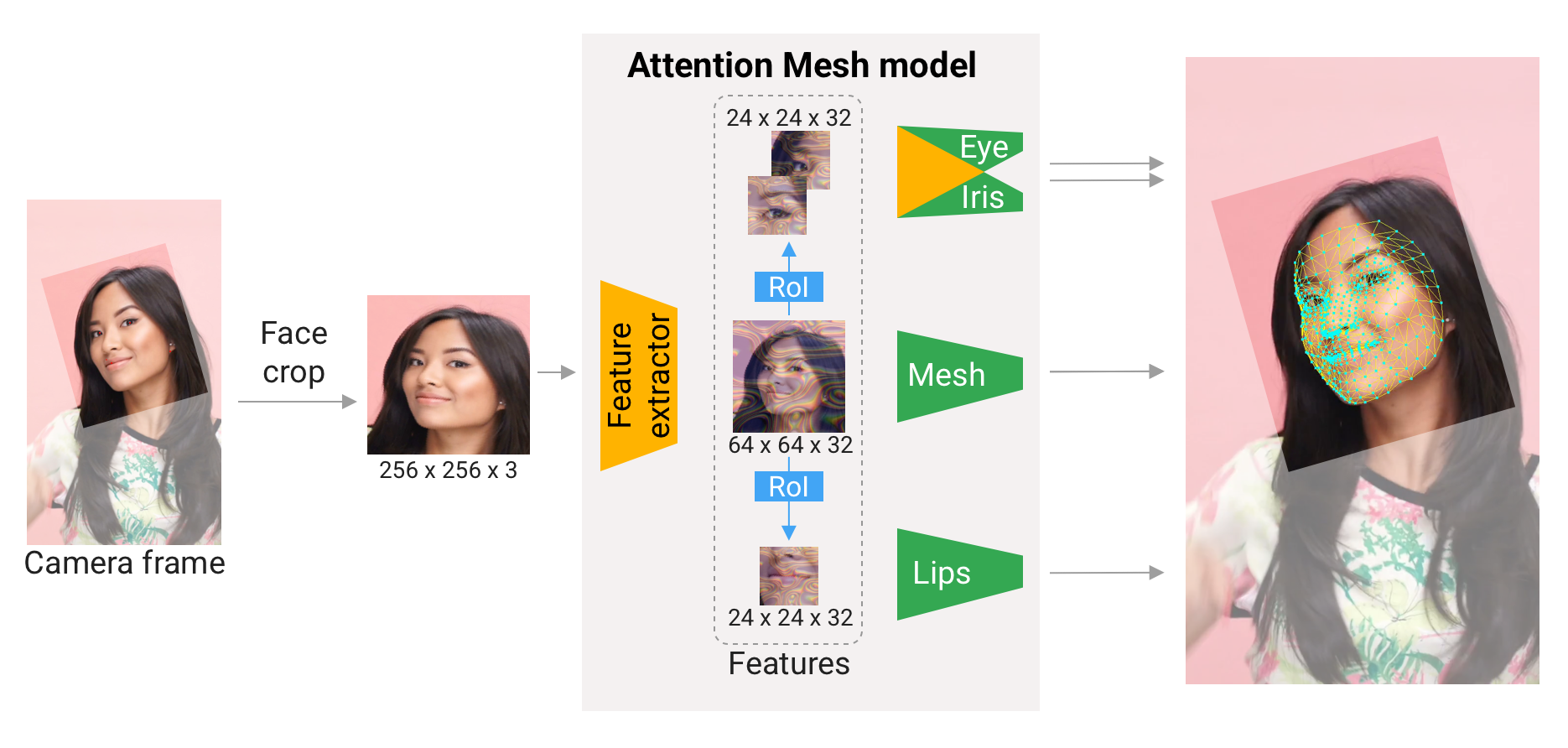

- |

+ |

:---------------------------------------------------------------------------: |

*Fig 3. Attention Mesh: Overview of model architecture.* |

@@ -161,7 +161,7 @@ coordinates back into the Metric 3D space. The *virtual camera parameters* can

be set freely, however for better results it is advised to set them as close to

the *real physical camera parameters* as possible.

- |

+ |

:-------------------------------------------------------------------------------: |

*Fig 4. A visualization of multiple key elements in the Metric 3D space.* |

@@ -225,7 +225,7 @@ hiding invisible elements behind the face surface.

The effect renderer is implemented as a MediaPipe

[calculator](https://github.com/google/mediapipe/tree/master/mediapipe/modules/face_geometry/effect_renderer_calculator.cc).

-|  |

+|  |

| :---------------------------------------------------------------------: |

| *Fig 5. An example of face effects rendered by the Face Transform Effect Renderer.* |

diff --git a/docs/solutions/hair_segmentation.md b/docs/solutions/hair_segmentation.md

index 9dd997b95..e94a4c79d 100644

--- a/docs/solutions/hair_segmentation.md

+++ b/docs/solutions/hair_segmentation.md

@@ -18,7 +18,7 @@ nav_order: 8

---

-

+

## Example Apps

@@ -58,7 +58,7 @@ processed all locally in real-time and never leaves your device. Please see

[MediaPipe on the Web](https://developers.googleblog.com/2020/01/mediapipe-on-web.html)

in Google Developers Blog for details.

-

+

## Resources

diff --git a/docs/solutions/hands.md b/docs/solutions/hands.md

index d73e32598..d3c245b76 100644

--- a/docs/solutions/hands.md

+++ b/docs/solutions/hands.md

@@ -38,7 +38,7 @@ hand perception functionality to the wider research and development community

will result in an emergence of creative use cases, stimulating new applications

and new research avenues.

- |

+ |

:------------------------------------------------------------------------------------: |

*Fig 1. Tracked 3D hand landmarks are represented by dots in different shades, with the brighter ones denoting landmarks closer to the camera.* |

@@ -91,9 +91,9 @@ To detect initial hand locations, we designed a

mobile real-time uses in a manner similar to the face detection model in

[MediaPipe Face Mesh](./face_mesh.md). Detecting hands is a decidedly complex

task: our

-[lite model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/palm_detection/palm_detection_lite.tflite)

+[lite model](https://storage.googleapis.com/mediapipe-assets/palm_detection_lite.tflite)

and

-[full model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/palm_detection/palm_detection_full.tflite)

+[full model](https://storage.googleapis.com/mediapipe-assets/palm_detection_full.tflite)

have to work across a variety of hand sizes with a large scale span (~20x)

relative to the image frame and be able to detect occluded and self-occluded

hands. Whereas faces have high contrast patterns, e.g., in the eye and mouth

@@ -122,7 +122,7 @@ just 86.22%.

### Hand Landmark Model

After the palm detection over the whole image our subsequent hand landmark

-[model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/hand_landmark/hand_landmark_full.tflite)

+[model](https://storage.googleapis.com/mediapipe-assets/hand_landmark_full.tflite)

performs precise keypoint localization of 21 3D hand-knuckle coordinates inside

the detected hand regions via regression, that is direct coordinate prediction.

The model learns a consistent internal hand pose representation and is robust

@@ -135,11 +135,11 @@ and provide additional supervision on the nature of hand geometry, we also

render a high-quality synthetic hand model over various backgrounds and map it

to the corresponding 3D coordinates.

- |

+ |

:--------------------------------------------------------: |

*Fig 2. 21 hand landmarks.* |

- |

+ |

:-------------------------------------------------------------------------: |

*Fig 3. Top: Aligned hand crops passed to the tracking network with ground truth annotation. Bottom: Rendered synthetic hand images with ground truth annotation.* |

diff --git a/docs/solutions/holistic.md b/docs/solutions/holistic.md

index d0ab0b801..8c552834e 100644

--- a/docs/solutions/holistic.md

+++ b/docs/solutions/holistic.md

@@ -29,7 +29,7 @@ solutions for these tasks. Combining them all in real-time into a semantically

consistent end-to-end solution is a uniquely difficult problem requiring

simultaneous inference of multiple, dependent neural networks.

- |

+ |

:----------------------------------------------------------------------------------------------------: |

*Fig 1. Example of MediaPipe Holistic.* |

@@ -54,7 +54,7 @@ full-resolution input frame to these ROIs and apply task-specific face and hand

models to estimate their corresponding landmarks. Finally, we merge all

landmarks with those of the pose model to yield the full 540+ landmarks.

- |

+ |

:------------------------------------------------------------------------------: |

*Fig 2. MediaPipe Holistic Pipeline Overview.* |

diff --git a/docs/solutions/instant_motion_tracking.md b/docs/solutions/instant_motion_tracking.md

index 9fea7ec1c..6bdbe5e02 100644

--- a/docs/solutions/instant_motion_tracking.md

+++ b/docs/solutions/instant_motion_tracking.md

@@ -30,7 +30,7 @@ platforms without initialization or calibration. It is built upon the

Tracking, you can easily place virtual 2D and 3D content on static or moving

surfaces, allowing them to seamlessly interact with the real-world environment.

- |

+ |

:-----------------------------------------------------------------------: |

*Fig 1. Instant Motion Tracking is used to augment the world with a 3D sticker.* |

diff --git a/docs/solutions/iris.md b/docs/solutions/iris.md

index af71c895f..1d36f74ca 100644

--- a/docs/solutions/iris.md

+++ b/docs/solutions/iris.md

@@ -43,7 +43,7 @@ of the MediaPipe framework, MediaPipe Iris can run on most modern

[mobile phones](#mobile), [desktops/laptops](#desktop) and even on the

[web](#web).

- |

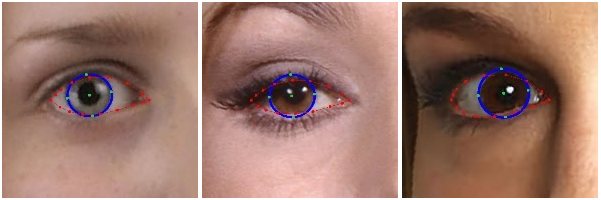

+ |

:------------------------------------------------------------------------: |

*Fig 1. Example of MediaPipe Iris: eyelid (red) and iris (blue) contours.* |

@@ -102,7 +102,7 @@ The iris model takes an image patch of the eye region and estimates both the eye

landmarks (along the eyelid) and iris landmarks (along ths iris contour). You

can find more details in this [paper](https://arxiv.org/abs/2006.11341).

- |

+ |

:----------------------------------------------------------------------------------------------------: |

*Fig 2. Eye landmarks (red) and iris landmarks (green).* |

@@ -115,7 +115,7 @@ human eye remains roughly constant at 11.7±0.5 mm across a wide population,

along with some simple geometric arguments. For more details please refer to our

[Google AI Blog post](https://ai.googleblog.com/2020/08/mediapipe-iris-real-time-iris-tracking.html).

- |

+ |

:--------------------------------------------------------------------------------------------: |

*Fig 3. (Left) MediaPipe Iris predicting metric distance in cm on a Pixel 2 from iris tracking without use of a depth sensor. (Right) Ground-truth depth.* |

@@ -200,7 +200,7 @@ never leaves your device. Please see

[MediaPipe on the Web](https://developers.googleblog.com/2020/01/mediapipe-on-web.html)

in Google Developers Blog for details.

-

+

* [MediaPipe Iris](https://viz.mediapipe.dev/demo/iris_tracking)

* [MediaPipe Iris: Depth-from-Iris](https://viz.mediapipe.dev/demo/iris_depth)

diff --git a/docs/solutions/knift.md b/docs/solutions/knift.md

index b008f1496..f2ec398ff 100644

--- a/docs/solutions/knift.md

+++ b/docs/solutions/knift.md

@@ -23,7 +23,7 @@ nav_order: 13

MediaPipe KNIFT is a template-based feature matching solution using KNIFT

(Keypoint Neural Invariant Feature Transform).

- |

+ |

:-----------------------------------------------------------------------: |

*Fig 1. Matching a real Stop Sign with a Stop Sign template using KNIFT.* |

@@ -56,7 +56,7 @@ For more information, please see

[MediaPipe KNIFT: Template-based feature matching](https://developers.googleblog.com/2020/04/mediapipe-knift-template-based-feature-matching.html)

in Google Developers Blog.

- |

+ |

:-------------------------------------------------------------------------------------: |

*Fig 2. Matching US dollar bills using KNIFT.* |

@@ -70,7 +70,7 @@ pre-computed from the 3 template images (of US dollar bills) shown below. If

you'd like to use your own template images, see

[Matching Your Own Template Images](#matching-your-own-template-images).

-

+

Please first see general instructions for

[Android](../getting_started/android.md) on how to build MediaPipe examples.

diff --git a/docs/solutions/models.md b/docs/solutions/models.md

index b2f59a9c8..18bcf0c8b 100644

--- a/docs/solutions/models.md

+++ b/docs/solutions/models.md

@@ -15,14 +15,14 @@ nav_order: 30

### [Face Detection](https://google.github.io/mediapipe/solutions/face_detection)

* Short-range model (best for faces within 2 meters from the camera):

- [TFLite model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/face_detection/face_detection_short_range.tflite),

+ [TFLite model](https://storage.googleapis.com/mediapipe-assets/face_detection_short_range.tflite),

[TFLite model quantized for EdgeTPU/Coral](https://github.com/google/mediapipe/tree/master/mediapipe/examples/coral/models/face-detector-quantized_edgetpu.tflite),

[Model card](https://mediapipe.page.link/blazeface-mc)

* Full-range model (dense, best for faces within 5 meters from the camera):

- [TFLite model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/face_detection/face_detection_full_range.tflite),

+ [TFLite model](https://storage.googleapis.com/mediapipe-assets/face_detection_full_range.tflite),

[Model card](https://mediapipe.page.link/blazeface-back-mc)

* Full-range model (sparse, best for faces within 5 meters from the camera):

- [TFLite model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/face_detection/face_detection_full_range_sparse.tflite),

+ [TFLite model](https://storage.googleapis.com/mediapipe-assets/face_detection_full_range_sparse.tflite),

[Model card](https://mediapipe.page.link/blazeface-back-sparse-mc)

Full-range dense and sparse models have the same quality in terms of

@@ -39,77 +39,77 @@ one over the other.

### [Face Mesh](https://google.github.io/mediapipe/solutions/face_mesh)

* Face landmark model:

- [TFLite model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/face_landmark/face_landmark.tflite),

+ [TFLite model](https://storage.googleapis.com/mediapipe-assets/face_landmark.tflite),

[TF.js model](https://tfhub.dev/mediapipe/facemesh/1)

* Face landmark model w/ attention (aka Attention Mesh):

- [TFLite model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/face_landmark/face_landmark_with_attention.tflite)

+ [TFLite model](https://storage.googleapis.com/mediapipe-assets/face_landmark_with_attention.tflite)

* [Model card](https://mediapipe.page.link/facemesh-mc),

[Model card (w/ attention)](https://mediapipe.page.link/attentionmesh-mc)

### [Iris](https://google.github.io/mediapipe/solutions/iris)

* Iris landmark model:

- [TFLite model](https://github.com/google/mediapipe/tree/master/mediapipe/modules/iris_landmark/iris_landmark.tflite)

+ [TFLite model](https://storage.googleapis.com/mediapipe-assets/iris_landmark.tflite)

* [Model card](https://mediapipe.page.link/iris-mc)

### [Hands](https://google.github.io/mediapipe/solutions/hands)

* Palm detection model:

- [TFLite model (lite)](https://github.com/google/mediapipe/tree/master/mediapipe/modules/palm_detection/palm_detection_lite.tflite),

- [TFLite model (full)](https://github.com/google/mediapipe/tree/master/mediapipe/modules/palm_detection/palm_detection_full.tflite),

+ [TFLite model (lite)](https://storage.googleapis.com/mediapipe-assets/palm_detection_lite.tflite),

+ [TFLite model (full)](https://storage.googleapis.com/mediapipe-assets/palm_detection_full.tflite),

[TF.js model](https://tfhub.dev/mediapipe/handdetector/1)

* Hand landmark model:

- [TFLite model (lite)](https://github.com/google/mediapipe/tree/master/mediapipe/modules/hand_landmark/hand_landmark_lite.tflite),

- [TFLite model (full)](https://github.com/google/mediapipe/tree/master/mediapipe/modules/hand_landmark/hand_landmark_full.tflite),

+ [TFLite model (lite)](https://storage.googleapis.com/mediapipe-assets/hand_landmark_lite.tflite),

+ [TFLite model (full)](https://storage.googleapis.com/mediapipe-assets/hand_landmark_full.tflite),

[TF.js model](https://tfhub.dev/mediapipe/handskeleton/1)

* [Model card](https://mediapipe.page.link/handmc)

### [Pose](https://google.github.io/mediapipe/solutions/pose)

* Pose detection model: