Compare commits

1 Commits

master

...

revert-428

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

7d05709f62 |

6

.bazelrc

6

.bazelrc

|

|

@ -87,9 +87,6 @@ build:ios_fat --config=ios

|

|||

build:ios_fat --ios_multi_cpus=armv7,arm64

|

||||

build:ios_fat --watchos_cpus=armv7k

|

||||

|

||||

build:ios_sim_fat --config=ios

|

||||

build:ios_sim_fat --ios_multi_cpus=x86_64,sim_arm64

|

||||

|

||||

build:darwin_x86_64 --apple_platform_type=macos

|

||||

build:darwin_x86_64 --macos_minimum_os=10.12

|

||||

build:darwin_x86_64 --cpu=darwin_x86_64

|

||||

|

|

@ -98,9 +95,6 @@ build:darwin_arm64 --apple_platform_type=macos

|

|||

build:darwin_arm64 --macos_minimum_os=10.16

|

||||

build:darwin_arm64 --cpu=darwin_arm64

|

||||

|

||||

# Turn off maximum stdout size

|

||||

build --experimental_ui_max_stdouterr_bytes=-1

|

||||

|

||||

# This bazelrc file is meant to be written by a setup script.

|

||||

try-import %workspace%/.configure.bazelrc

|

||||

|

||||

|

|

|

|||

|

|

@ -48,16 +48,18 @@ body:

|

|||

placeholder: e.g. C++, Python, Java

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: current_model

|

||||

attributes:

|

||||

label: Describe the actual behavior

|

||||

render: shell

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: expected_model

|

||||

attributes:

|

||||

label: Describe the expected behaviour

|

||||

render: shell

|

||||

validations:

|

||||

required: false

|

||||

- type: textarea

|

||||

|

|

@ -80,16 +80,18 @@ body:

|

|||

label: Xcode & Tulsi version (if issue is related to building for iOS)

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: current_model

|

||||

attributes:

|

||||

label: Describe the actual behavior

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: expected_model

|

||||

attributes:

|

||||

label: Describe the expected behaviour

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

|

|

@ -87,13 +87,14 @@ body:

|

|||

placeholder:

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: what-happened

|

||||

attributes:

|

||||

label: Describe the problem

|

||||

description: Provide the exact sequence of commands / steps that you executed before running into the [problem](https://google.github.io/mediapipe/getting_started/getting_started.html)

|

||||

placeholder: Tell us what you see!

|

||||

value: "A bug happened!"

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

|

|

@ -28,33 +28,37 @@ body:

|

|||

- 'No'

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: behaviour

|

||||

attributes:

|

||||

label: Describe the feature and the current behaviour/state

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: api_change

|

||||

attributes:

|

||||

label: Will this change the current API? How?

|

||||

render: shell

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: benifit

|

||||

attributes:

|

||||

label: Who will benefit with this feature?

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: use_case

|

||||

attributes:

|

||||

label: Please specify the use cases for this feature

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: info_other

|

||||

attributes:

|

||||

label: Any Other info

|

||||

render: shell

|

||||

validations:

|

||||

required: false

|

||||

|

|

@ -41,16 +41,18 @@ body:

|

|||

label: Task name (e.g. Image classification, Gesture recognition etc.)

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: current_model

|

||||

attributes:

|

||||

label: Describe the actual behavior

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: expected_model

|

||||

attributes:

|

||||

label: Describe the expected behaviour

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

|

|

@ -31,16 +31,18 @@ body:

|

|||

label: URL that shows the problem

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: current_model

|

||||

attributes:

|

||||

label: Describe the actual behavior

|

||||

render: shell

|

||||

validations:

|

||||

required: false

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: expected_model

|

||||

attributes:

|

||||

label: Describe the expected behaviour

|

||||

render: shell

|

||||

validations:

|

||||

required: false

|

||||

- type: textarea

|

||||

|

|

@ -40,16 +40,18 @@ body:

|

|||

label: Programming Language and version (e.g. C++, Python, Java)

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: current_model

|

||||

attributes:

|

||||

label: Describe the actual behavior

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

- type: textarea

|

||||

id: expected_model

|

||||

attributes:

|

||||

label: Describe the expected behaviour

|

||||

render: shell

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

34

.github/stale.yml

vendored

Normal file

34

.github/stale.yml

vendored

Normal file

|

|

@ -0,0 +1,34 @@

|

|||

# Copyright 2021 The MediaPipe Authors.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ============================================================================

|

||||

#

|

||||

# This file was assembled from multiple pieces, whose use is documented

|

||||

# throughout. Please refer to the TensorFlow dockerfiles documentation

|

||||

# for more information.

|

||||

|

||||

# Number of days of inactivity before an Issue or Pull Request becomes stale

|

||||

daysUntilStale: 7

|

||||

# Number of days of inactivity before a stale Issue or Pull Request is closed

|

||||

daysUntilClose: 7

|

||||

# Only issues or pull requests with all of these labels are checked if stale. Defaults to `[]` (disabled)

|

||||

onlyLabels:

|

||||

- stat:awaiting response

|

||||

# Comment to post when marking as stale. Set to `false` to disable

|

||||

markComment: >

|

||||

This issue has been automatically marked as stale because it has not had

|

||||

recent activity. It will be closed if no further activity occurs. Thank you.

|

||||

# Comment to post when removing the stale label. Set to `false` to disable

|

||||

unmarkComment: false

|

||||

closeComment: >

|

||||

Closing as stale. Please reopen if you'd like to work on this further.

|

||||

68

.github/workflows/stale.yaml

vendored

68

.github/workflows/stale.yaml

vendored

|

|

@ -1,68 +0,0 @@

|

|||

# Copyright 2023 The TensorFlow Authors. All Rights Reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

# ==============================================================================

|

||||

|

||||

# This workflow alerts and then closes the stale issues/PRs after specific time

|

||||

# You can adjust the behavior by modifying this file.

|

||||

# For more information, see:

|

||||

# https://github.com/actions/stale

|

||||

|

||||

name: 'Close stale issues and PRs'

|

||||

"on":

|

||||

schedule:

|

||||

- cron: "30 1 * * *"

|

||||

permissions:

|

||||

contents: read

|

||||

issues: write

|

||||

pull-requests: write

|

||||

jobs:

|

||||

stale:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: 'actions/stale@v7'

|

||||

with:

|

||||

# Comma separated list of labels that can be assigned to issues to exclude them from being marked as stale.

|

||||

exempt-issue-labels: 'override-stale'

|

||||

# Comma separated list of labels that can be assigned to PRs to exclude them from being marked as stale.

|

||||

exempt-pr-labels: "override-stale"

|

||||

# Limit the No. of API calls in one run default value is 30.

|

||||

operations-per-run: 500

|

||||

# Prevent to remove stale label when PRs or issues are updated.

|

||||

remove-stale-when-updated: true

|

||||

# List of labels to remove when issues/PRs unstale.

|

||||

labels-to-remove-when-unstale: 'stat:awaiting response'

|

||||

# comment on issue if not active for more then 7 days.

|

||||

stale-issue-message: 'This issue has been marked stale because it has no recent activity since 7 days. It will be closed if no further activity occurs. Thank you.'

|

||||

# comment on PR if not active for more then 14 days.

|

||||

stale-pr-message: 'This PR has been marked stale because it has no recent activity since 14 days. It will be closed if no further activity occurs. Thank you.'

|

||||

# comment on issue if stale for more then 7 days.

|

||||

close-issue-message: This issue was closed due to lack of activity after being marked stale for past 7 days.

|

||||

# comment on PR if stale for more then 14 days.

|

||||

close-pr-message: This PR was closed due to lack of activity after being marked stale for past 14 days.

|

||||

# Number of days of inactivity before an Issue Request becomes stale

|

||||

days-before-issue-stale: 7

|

||||

# Number of days of inactivity before a stale Issue is closed

|

||||

days-before-issue-close: 7

|

||||

# reason for closed the issue default value is not_planned

|

||||

close-issue-reason: completed

|

||||

# Number of days of inactivity before a stale PR is closed

|

||||

days-before-pr-close: 14

|

||||

# Number of days of inactivity before an PR Request becomes stale

|

||||

days-before-pr-stale: 14

|

||||

# Check for label to stale or close the issue/PR

|

||||

any-of-labels: 'stat:awaiting response'

|

||||

# override stale to stalled for PR

|

||||

stale-pr-label: 'stale'

|

||||

# override stale to stalled for Issue

|

||||

stale-issue-label: "stale"

|

||||

200

README.md

200

README.md

|

|

@ -1,121 +1,99 @@

|

|||

---

|

||||

layout: forward

|

||||

target: https://developers.google.com/mediapipe

|

||||

layout: default

|

||||

title: Home

|

||||

nav_order: 1

|

||||

---

|

||||

|

||||

|

||||

|

||||

----

|

||||

|

||||

**Attention:** *We have moved to

|

||||

**Attention:** *Thanks for your interest in MediaPipe! We have moved to

|

||||

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||

as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

||||

|

||||

|

||||

*This notice and web page will be removed on June 1, 2023.*

|

||||

|

||||

**Attention**: MediaPipe Solutions Preview is an early release. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/about#notice).

|

||||

----

|

||||

|

||||

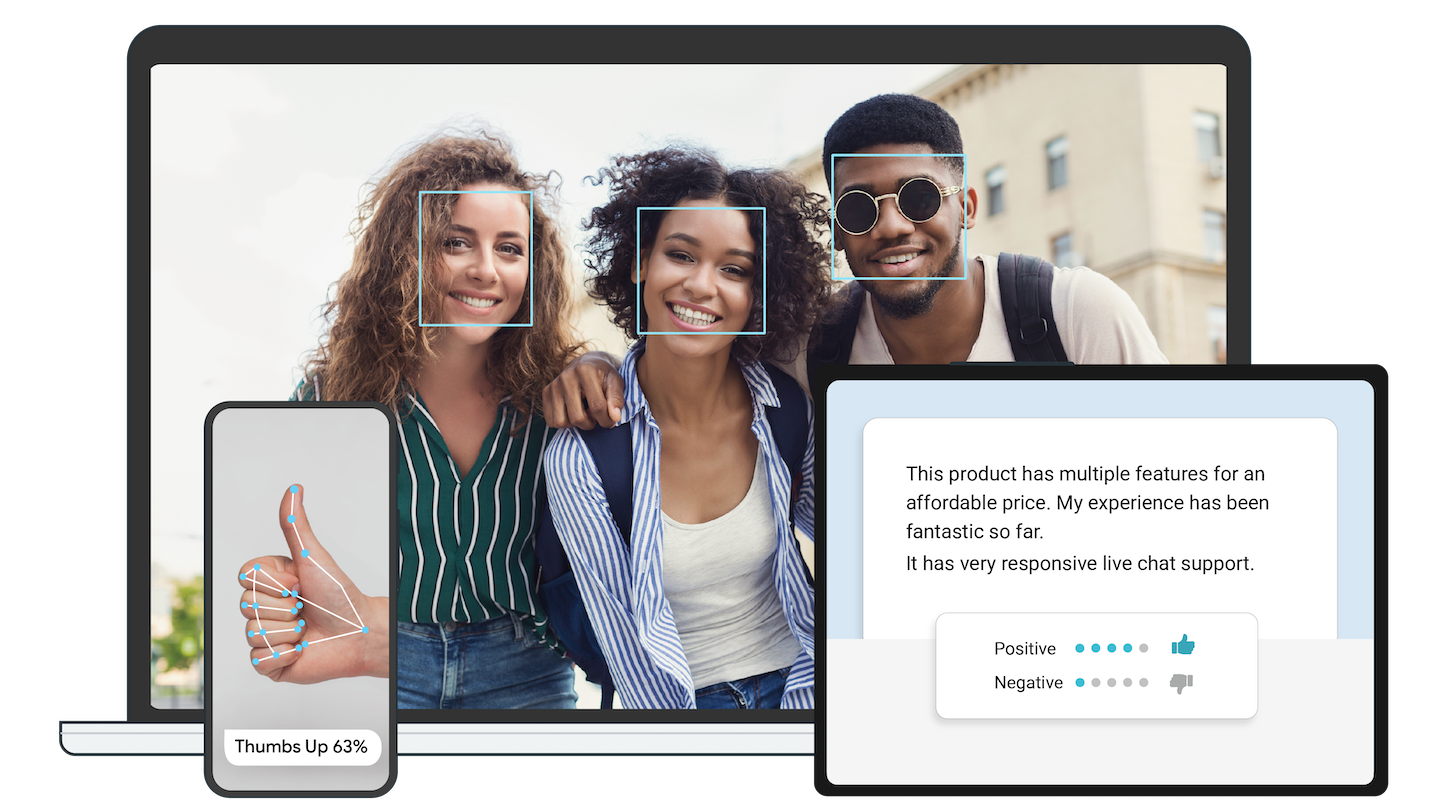

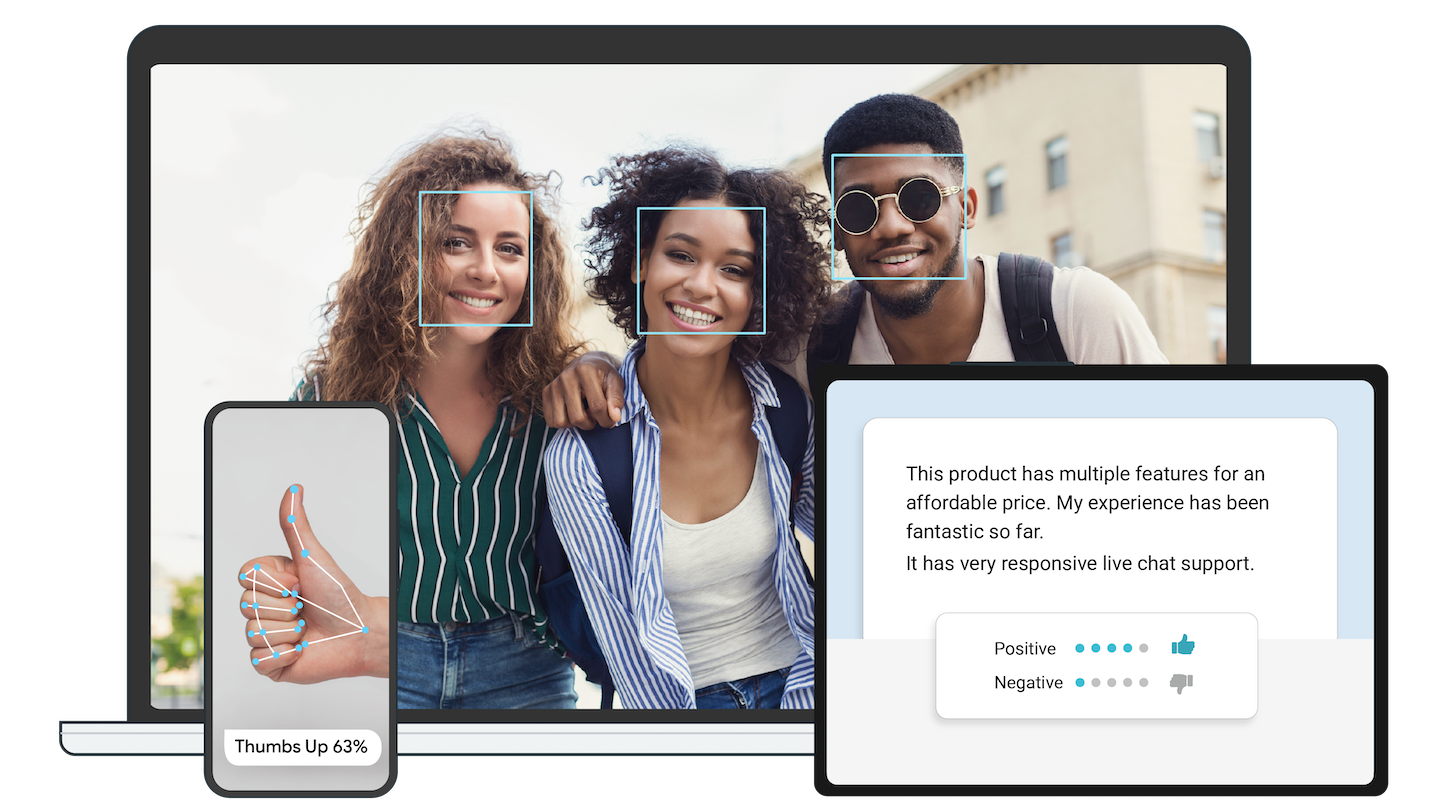

**On-device machine learning for everyone**

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

|

||||

Delight your customers with innovative machine learning features. MediaPipe

|

||||

contains everything that you need to customize and deploy to mobile (Android,

|

||||

iOS), web, desktop, edge devices, and IoT, effortlessly.

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

* [See demos](https://goo.gle/mediapipe-studio)

|

||||

* [Learn more](https://developers.google.com/mediapipe/solutions)

|

||||

## Live ML anywhere

|

||||

|

||||

## Get started

|

||||

[MediaPipe](https://google.github.io/mediapipe/) offers cross-platform, customizable

|

||||

ML solutions for live and streaming media.

|

||||

|

||||

You can get started with MediaPipe Solutions by by checking out any of the

|

||||

developer guides for

|

||||

[vision](https://developers.google.com/mediapipe/solutions/vision/object_detector),

|

||||

[text](https://developers.google.com/mediapipe/solutions/text/text_classifier),

|

||||

and

|

||||

[audio](https://developers.google.com/mediapipe/solutions/audio/audio_classifier)

|

||||

tasks. If you need help setting up a development environment for use with

|

||||

MediaPipe Tasks, check out the setup guides for

|

||||

[Android](https://developers.google.com/mediapipe/solutions/setup_android), [web

|

||||

apps](https://developers.google.com/mediapipe/solutions/setup_web), and

|

||||

[Python](https://developers.google.com/mediapipe/solutions/setup_python).

|

||||

|

|

||||

:------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------:

|

||||

***End-to-End acceleration***: *Built-in fast ML inference and processing accelerated even on common hardware* | ***Build once, deploy anywhere***: *Unified solution works across Android, iOS, desktop/cloud, web and IoT*

|

||||

|

|

||||

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

||||

|

||||

## Solutions

|

||||

----

|

||||

|

||||

MediaPipe Solutions provides a suite of libraries and tools for you to quickly

|

||||

apply artificial intelligence (AI) and machine learning (ML) techniques in your

|

||||

applications. You can plug these solutions into your applications immediately,

|

||||

customize them to your needs, and use them across multiple development

|

||||

platforms. MediaPipe Solutions is part of the MediaPipe [open source

|

||||

project](https://github.com/google/mediapipe), so you can further customize the

|

||||

solutions code to meet your application needs.

|

||||

## ML solutions in MediaPipe

|

||||

|

||||

These libraries and resources provide the core functionality for each MediaPipe

|

||||

Solution:

|

||||

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

||||

:----------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :------:

|

||||

[](https://google.github.io/mediapipe/solutions/face_detection) | [](https://google.github.io/mediapipe/solutions/face_mesh) | [](https://google.github.io/mediapipe/solutions/iris) | [](https://google.github.io/mediapipe/solutions/hands) | [](https://google.github.io/mediapipe/solutions/pose) | [](https://google.github.io/mediapipe/solutions/holistic)

|

||||

|

||||

* **MediaPipe Tasks**: Cross-platform APIs and libraries for deploying

|

||||

solutions. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/tasks).

|

||||

* **MediaPipe models**: Pre-trained, ready-to-run models for use with each

|

||||

solution.

|

||||

Hair Segmentation | Object Detection | Box Tracking | Instant Motion Tracking | Objectron | KNIFT

|

||||

:-------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------: | :---:

|

||||

[](https://google.github.io/mediapipe/solutions/hair_segmentation) | [](https://google.github.io/mediapipe/solutions/object_detection) | [](https://google.github.io/mediapipe/solutions/box_tracking) | [](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | [](https://google.github.io/mediapipe/solutions/objectron) | [](https://google.github.io/mediapipe/solutions/knift)

|

||||

|

||||

These tools let you customize and evaluate solutions:

|

||||

<!-- []() in the first cell is needed to preserve table formatting in GitHub Pages. -->

|

||||

<!-- Whenever this table is updated, paste a copy to solutions/solutions.md. -->

|

||||

|

||||

* **MediaPipe Model Maker**: Customize models for solutions with your data.

|

||||

[Learn more](https://developers.google.com/mediapipe/solutions/model_maker).

|

||||

* **MediaPipe Studio**: Visualize, evaluate, and benchmark solutions in your

|

||||

browser. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/studio).

|

||||

[]() | [Android](https://google.github.io/mediapipe/getting_started/android) | [iOS](https://google.github.io/mediapipe/getting_started/ios) | [C++](https://google.github.io/mediapipe/getting_started/cpp) | [Python](https://google.github.io/mediapipe/getting_started/python) | [JS](https://google.github.io/mediapipe/getting_started/javascript) | [Coral](https://github.com/google/mediapipe/tree/master/mediapipe/examples/coral/README.md)

|

||||

:---------------------------------------------------------------------------------------- | :-------------------------------------------------------------: | :-----------------------------------------------------: | :-----------------------------------------------------: | :-----------------------------------------------------------: | :-----------------------------------------------------------: | :--------------------------------------------------------------------:

|

||||

[Face Detection](https://google.github.io/mediapipe/solutions/face_detection) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅

|

||||

[Face Mesh](https://google.github.io/mediapipe/solutions/face_mesh) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Iris](https://google.github.io/mediapipe/solutions/iris) | ✅ | ✅ | ✅ | | |

|

||||

[Hands](https://google.github.io/mediapipe/solutions/hands) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Pose](https://google.github.io/mediapipe/solutions/pose) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Holistic](https://google.github.io/mediapipe/solutions/holistic) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Selfie Segmentation](https://google.github.io/mediapipe/solutions/selfie_segmentation) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Hair Segmentation](https://google.github.io/mediapipe/solutions/hair_segmentation) | ✅ | | ✅ | | |

|

||||

[Object Detection](https://google.github.io/mediapipe/solutions/object_detection) | ✅ | ✅ | ✅ | | | ✅

|

||||

[Box Tracking](https://google.github.io/mediapipe/solutions/box_tracking) | ✅ | ✅ | ✅ | | |

|

||||

[Instant Motion Tracking](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | ✅ | | | | |

|

||||

[Objectron](https://google.github.io/mediapipe/solutions/objectron) | ✅ | | ✅ | ✅ | ✅ |

|

||||

[KNIFT](https://google.github.io/mediapipe/solutions/knift) | ✅ | | | | |

|

||||

[AutoFlip](https://google.github.io/mediapipe/solutions/autoflip) | | | ✅ | | |

|

||||

[MediaSequence](https://google.github.io/mediapipe/solutions/media_sequence) | | | ✅ | | |

|

||||

[YouTube 8M](https://google.github.io/mediapipe/solutions/youtube_8m) | | | ✅ | | |

|

||||

|

||||

### Legacy solutions

|

||||

See also

|

||||

[MediaPipe Models and Model Cards](https://google.github.io/mediapipe/solutions/models)

|

||||

for ML models released in MediaPipe.

|

||||

|

||||

We have ended support for [these MediaPipe Legacy Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||

as of March 1, 2023. All other MediaPipe Legacy Solutions will be upgraded to

|

||||

a new MediaPipe Solution. See the [Solutions guide](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||

for details. The [code repository](https://github.com/google/mediapipe/tree/master/mediapipe)

|

||||

and prebuilt binaries for all MediaPipe Legacy Solutions will continue to be

|

||||

provided on an as-is basis.

|

||||

## Getting started

|

||||

|

||||

For more on the legacy solutions, see the [documentation](https://github.com/google/mediapipe/tree/master/docs/solutions).

|

||||

To start using MediaPipe

|

||||

[solutions](https://google.github.io/mediapipe/solutions/solutions) with only a few

|

||||

lines code, see example code and demos in

|

||||

[MediaPipe in Python](https://google.github.io/mediapipe/getting_started/python) and

|

||||

[MediaPipe in JavaScript](https://google.github.io/mediapipe/getting_started/javascript).

|

||||

|

||||

## Framework

|

||||

To use MediaPipe in C++, Android and iOS, which allow further customization of

|

||||

the [solutions](https://google.github.io/mediapipe/solutions/solutions) as well as

|

||||

building your own, learn how to

|

||||

[install](https://google.github.io/mediapipe/getting_started/install) MediaPipe and

|

||||

start building example applications in

|

||||

[C++](https://google.github.io/mediapipe/getting_started/cpp),

|

||||

[Android](https://google.github.io/mediapipe/getting_started/android) and

|

||||

[iOS](https://google.github.io/mediapipe/getting_started/ios).

|

||||

|

||||

To start using MediaPipe Framework, [install MediaPipe

|

||||

Framework](https://developers.google.com/mediapipe/framework/getting_started/install)

|

||||

and start building example applications in C++, Android, and iOS.

|

||||

The source code is hosted in the

|

||||

[MediaPipe Github repository](https://github.com/google/mediapipe), and you can

|

||||

run code search using

|

||||

[Google Open Source Code Search](https://cs.opensource.google/mediapipe/mediapipe).

|

||||

|

||||

[MediaPipe Framework](https://developers.google.com/mediapipe/framework) is the

|

||||

low-level component used to build efficient on-device machine learning

|

||||

pipelines, similar to the premade MediaPipe Solutions.

|

||||

|

||||

Before using MediaPipe Framework, familiarize yourself with the following key

|

||||

[Framework

|

||||

concepts](https://developers.google.com/mediapipe/framework/framework_concepts/overview.md):

|

||||

|

||||

* [Packets](https://developers.google.com/mediapipe/framework/framework_concepts/packets.md)

|

||||

* [Graphs](https://developers.google.com/mediapipe/framework/framework_concepts/graphs.md)

|

||||

* [Calculators](https://developers.google.com/mediapipe/framework/framework_concepts/calculators.md)

|

||||

|

||||

## Community

|

||||

|

||||

* [Slack community](https://mediapipe.page.link/joinslack) for MediaPipe

|

||||

users.

|

||||

* [Discuss](https://groups.google.com/forum/#!forum/mediapipe) - General

|

||||

community discussion around MediaPipe.

|

||||

* [Awesome MediaPipe](https://mediapipe.page.link/awesome-mediapipe) - A

|

||||

curated list of awesome MediaPipe related frameworks, libraries and

|

||||

software.

|

||||

|

||||

## Contributing

|

||||

|

||||

We welcome contributions. Please follow these

|

||||

[guidelines](https://github.com/google/mediapipe/blob/master/CONTRIBUTING.md).

|

||||

|

||||

We use GitHub issues for tracking requests and bugs. Please post questions to

|

||||

the MediaPipe Stack Overflow with a `mediapipe` tag.

|

||||

|

||||

## Resources

|

||||

|

||||

### Publications

|

||||

## Publications

|

||||

|

||||

* [Bringing artworks to life with AR](https://developers.googleblog.com/2021/07/bringing-artworks-to-life-with-ar.html)

|

||||

in Google Developers Blog

|

||||

|

|

@ -124,8 +102,7 @@ the MediaPipe Stack Overflow with a `mediapipe` tag.

|

|||

* [SignAll SDK: Sign language interface using MediaPipe is now available for

|

||||

developers](https://developers.googleblog.com/2021/04/signall-sdk-sign-language-interface-using-mediapipe-now-available.html)

|

||||

in Google Developers Blog

|

||||

* [MediaPipe Holistic - Simultaneous Face, Hand and Pose Prediction, on

|

||||

Device](https://ai.googleblog.com/2020/12/mediapipe-holistic-simultaneous-face.html)

|

||||

* [MediaPipe Holistic - Simultaneous Face, Hand and Pose Prediction, on Device](https://ai.googleblog.com/2020/12/mediapipe-holistic-simultaneous-face.html)

|

||||

in Google AI Blog

|

||||

* [Background Features in Google Meet, Powered by Web ML](https://ai.googleblog.com/2020/10/background-features-in-google-meet.html)

|

||||

in Google AI Blog

|

||||

|

|

@ -153,6 +130,43 @@ the MediaPipe Stack Overflow with a `mediapipe` tag.

|

|||

in Google AI Blog

|

||||

* [MediaPipe: A Framework for Building Perception Pipelines](https://arxiv.org/abs/1906.08172)

|

||||

|

||||

### Videos

|

||||

## Videos

|

||||

|

||||

* [YouTube Channel](https://www.youtube.com/c/MediaPipe)

|

||||

|

||||

## Events

|

||||

|

||||

* [MediaPipe Seattle Meetup, Google Building Waterside, 13 Feb 2020](https://mediapipe.page.link/seattle2020)

|

||||

* [AI Nextcon 2020, 12-16 Feb 2020, Seattle](http://aisea20.xnextcon.com/)

|

||||

* [MediaPipe Madrid Meetup, 16 Dec 2019](https://www.meetup.com/Madrid-AI-Developers-Group/events/266329088/)

|

||||

* [MediaPipe London Meetup, Google 123 Building, 12 Dec 2019](https://www.meetup.com/London-AI-Tech-Talk/events/266329038)

|

||||

* [ML Conference, Berlin, 11 Dec 2019](https://mlconference.ai/machine-learning-advanced-development/mediapipe-building-real-time-cross-platform-mobile-web-edge-desktop-video-audio-ml-pipelines/)

|

||||

* [MediaPipe Berlin Meetup, Google Berlin, 11 Dec 2019](https://www.meetup.com/Berlin-AI-Tech-Talk/events/266328794/)

|

||||

* [The 3rd Workshop on YouTube-8M Large Scale Video Understanding Workshop,

|

||||

Seoul, Korea ICCV

|

||||

2019](https://research.google.com/youtube8m/workshop2019/index.html)

|

||||

* [AI DevWorld 2019, 10 Oct 2019, San Jose, CA](https://aidevworld.com)

|

||||

* [Google Industry Workshop at ICIP 2019, 24 Sept 2019, Taipei, Taiwan](http://2019.ieeeicip.org/?action=page4&id=14#Google)

|

||||

([presentation](https://docs.google.com/presentation/d/e/2PACX-1vRIBBbO_LO9v2YmvbHHEt1cwyqH6EjDxiILjuT0foXy1E7g6uyh4CesB2DkkEwlRDO9_lWfuKMZx98T/pub?start=false&loop=false&delayms=3000&slide=id.g556cc1a659_0_5))

|

||||

* [Open sourced at CVPR 2019, 17~20 June, Long Beach, CA](https://sites.google.com/corp/view/perception-cv4arvr/mediapipe)

|

||||

|

||||

## Community

|

||||

|

||||

* [Awesome MediaPipe](https://mediapipe.page.link/awesome-mediapipe) - A

|

||||

curated list of awesome MediaPipe related frameworks, libraries and software

|

||||

* [Slack community](https://mediapipe.page.link/joinslack) for MediaPipe users

|

||||

* [Discuss](https://groups.google.com/forum/#!forum/mediapipe) - General

|

||||

community discussion around MediaPipe

|

||||

|

||||

## Alpha disclaimer

|

||||

|

||||

MediaPipe is currently in alpha at v0.7. We may be still making breaking API

|

||||

changes and expect to get to stable APIs by v1.0.

|

||||

|

||||

## Contributing

|

||||

|

||||

We welcome contributions. Please follow these

|

||||

[guidelines](https://github.com/google/mediapipe/blob/master/CONTRIBUTING.md).

|

||||

|

||||

We use GitHub issues for tracking requests and bugs. Please post questions to

|

||||

the MediaPipe Stack Overflow with a `mediapipe` tag.

|

||||

|

|

|

|||

106

WORKSPACE

106

WORKSPACE

|

|

@ -45,13 +45,12 @@ http_archive(

|

|||

)

|

||||

|

||||

http_archive(

|

||||

name = "rules_foreign_cc",

|

||||

sha256 = "2a4d07cd64b0719b39a7c12218a3e507672b82a97b98c6a89d38565894cf7c51",

|

||||

strip_prefix = "rules_foreign_cc-0.9.0",

|

||||

url = "https://github.com/bazelbuild/rules_foreign_cc/archive/refs/tags/0.9.0.tar.gz",

|

||||

name = "rules_foreign_cc",

|

||||

strip_prefix = "rules_foreign_cc-0.1.0",

|

||||

url = "https://github.com/bazelbuild/rules_foreign_cc/archive/0.1.0.zip",

|

||||

)

|

||||

|

||||

load("@rules_foreign_cc//foreign_cc:repositories.bzl", "rules_foreign_cc_dependencies")

|

||||

load("@rules_foreign_cc//:workspace_definitions.bzl", "rules_foreign_cc_dependencies")

|

||||

|

||||

rules_foreign_cc_dependencies()

|

||||

|

||||

|

|

@ -73,9 +72,12 @@ http_archive(

|

|||

http_archive(

|

||||

name = "zlib",

|

||||

build_file = "@//third_party:zlib.BUILD",

|

||||

sha256 = "b3a24de97a8fdbc835b9833169501030b8977031bcb54b3b3ac13740f846ab30",

|

||||

strip_prefix = "zlib-1.2.13",

|

||||

url = "http://zlib.net/fossils/zlib-1.2.13.tar.gz",

|

||||

sha256 = "c3e5e9fdd5004dcb542feda5ee4f0ff0744628baf8ed2dd5d66f8ca1197cb1a1",

|

||||

strip_prefix = "zlib-1.2.11",

|

||||

urls = [

|

||||

"http://mirror.bazel.build/zlib.net/fossils/zlib-1.2.11.tar.gz",

|

||||

"http://zlib.net/fossils/zlib-1.2.11.tar.gz", # 2017-01-15

|

||||

],

|

||||

patches = [

|

||||

"@//third_party:zlib.diff",

|

||||

],

|

||||

|

|

@ -154,41 +156,22 @@ http_archive(

|

|||

# 2020-08-21

|

||||

http_archive(

|

||||

name = "com_github_glog_glog",

|

||||

strip_prefix = "glog-0.6.0",

|

||||

sha256 = "8a83bf982f37bb70825df71a9709fa90ea9f4447fb3c099e1d720a439d88bad6",

|

||||

strip_prefix = "glog-0a2e5931bd5ff22fd3bf8999eb8ce776f159cda6",

|

||||

sha256 = "58c9b3b6aaa4dd8b836c0fd8f65d0f941441fb95e27212c5eeb9979cfd3592ab",

|

||||

urls = [

|

||||

"https://github.com/google/glog/archive/v0.6.0.tar.gz",

|

||||

"https://github.com/google/glog/archive/0a2e5931bd5ff22fd3bf8999eb8ce776f159cda6.zip",

|

||||

],

|

||||

)

|

||||

http_archive(

|

||||

name = "com_github_glog_glog_no_gflags",

|

||||

strip_prefix = "glog-0.6.0",

|

||||

sha256 = "8a83bf982f37bb70825df71a9709fa90ea9f4447fb3c099e1d720a439d88bad6",

|

||||

strip_prefix = "glog-0a2e5931bd5ff22fd3bf8999eb8ce776f159cda6",

|

||||

sha256 = "58c9b3b6aaa4dd8b836c0fd8f65d0f941441fb95e27212c5eeb9979cfd3592ab",

|

||||

build_file = "@//third_party:glog_no_gflags.BUILD",

|

||||

urls = [

|

||||

"https://github.com/google/glog/archive/v0.6.0.tar.gz",

|

||||

"https://github.com/google/glog/archive/0a2e5931bd5ff22fd3bf8999eb8ce776f159cda6.zip",

|

||||

],

|

||||

patches = [

|

||||

"@//third_party:com_github_glog_glog.diff",

|

||||

],

|

||||

patch_args = [

|

||||

"-p1",

|

||||

],

|

||||

)

|

||||

|

||||

# 2023-06-05

|

||||

# This version of Glog is required for Windows support, but currently causes

|

||||

# crashes on some Android devices.

|

||||

http_archive(

|

||||

name = "com_github_glog_glog_windows",

|

||||

strip_prefix = "glog-3a0d4d22c5ae0b9a2216988411cfa6bf860cc372",

|

||||

sha256 = "170d08f80210b82d95563f4723a15095eff1aad1863000e8eeb569c96a98fefb",

|

||||

urls = [

|

||||

"https://github.com/google/glog/archive/3a0d4d22c5ae0b9a2216988411cfa6bf860cc372.zip",

|

||||

],

|

||||

patches = [

|

||||

"@//third_party:com_github_glog_glog.diff",

|

||||

"@//third_party:com_github_glog_glog_windows_patch.diff",

|

||||

"@//third_party:com_github_glog_glog_9779e5ea6ef59562b030248947f787d1256132ae.diff",

|

||||

],

|

||||

patch_args = [

|

||||

"-p1",

|

||||

|

|

@ -244,24 +227,16 @@ http_archive(

|

|||

# sentencepiece

|

||||

http_archive(

|

||||

name = "com_google_sentencepiece",

|

||||

strip_prefix = "sentencepiece-0.1.96",

|

||||

sha256 = "8409b0126ebd62b256c685d5757150cf7fcb2b92a2f2b98efb3f38fc36719754",

|

||||

strip_prefix = "sentencepiece-1.0.0",

|

||||

sha256 = "c05901f30a1d0ed64cbcf40eba08e48894e1b0e985777217b7c9036cac631346",

|

||||

urls = [

|

||||

"https://github.com/google/sentencepiece/archive/refs/tags/v0.1.96.zip"

|

||||

"https://github.com/google/sentencepiece/archive/1.0.0.zip",

|

||||

],

|

||||

patches = [

|

||||

"@//third_party:com_google_sentencepiece_no_gflag_no_gtest.diff",

|

||||

],

|

||||

build_file = "@//third_party:sentencepiece.BUILD",

|

||||

patches = ["@//third_party:com_google_sentencepiece.diff"],

|

||||

patch_args = ["-p1"],

|

||||

)

|

||||

|

||||

http_archive(

|

||||

name = "darts_clone",

|

||||

build_file = "@//third_party:darts_clone.BUILD",

|

||||

sha256 = "c97f55d05c98da6fcaf7f9ecc6a6dc6bc5b18b8564465f77abff8879d446491c",

|

||||

strip_prefix = "darts-clone-e40ce4627526985a7767444b6ed6893ab6ff8983",

|

||||

urls = [

|

||||

"https://github.com/s-yata/darts-clone/archive/e40ce4627526985a7767444b6ed6893ab6ff8983.zip",

|

||||

],

|

||||

repo_mapping = {"@com_google_glog" : "@com_github_glog_glog_no_gflags"},

|

||||

)

|

||||

|

||||

http_archive(

|

||||

|

|

@ -281,10 +256,10 @@ http_archive(

|

|||

|

||||

http_archive(

|

||||

name = "com_googlesource_code_re2",

|

||||

sha256 = "ef516fb84824a597c4d5d0d6d330daedb18363b5a99eda87d027e6bdd9cba299",

|

||||

strip_prefix = "re2-03da4fc0857c285e3a26782f6bc8931c4c950df4",

|

||||

sha256 = "e06b718c129f4019d6e7aa8b7631bee38d3d450dd980246bfaf493eb7db67868",

|

||||

strip_prefix = "re2-fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8",

|

||||

urls = [

|

||||

"https://github.com/google/re2/archive/03da4fc0857c285e3a26782f6bc8931c4c950df4.tar.gz",

|

||||

"https://github.com/google/re2/archive/fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8.tar.gz",

|

||||

],

|

||||

)

|

||||

|

||||

|

|

@ -390,22 +365,6 @@ http_archive(

|

|||

url = "https://github.com/opencv/opencv/releases/download/3.2.0/opencv-3.2.0-ios-framework.zip",

|

||||

)

|

||||

|

||||

# Building an opencv.xcframework from the OpenCV 4.5.3 sources is necessary for

|

||||

# MediaPipe iOS Task Libraries to be supported on arm64(M1) Macs. An

|

||||

# `opencv.xcframework` archive has not been released and it is recommended to

|

||||

# build the same from source using a script provided in OpenCV 4.5.0 upwards.

|

||||

# OpenCV is fixed to version to 4.5.3 since swift support can only be disabled

|

||||

# from 4.5.3 upwards. This is needed to avoid errors when the library is linked

|

||||

# in Xcode. Swift support will be added in when the final binary MediaPipe iOS

|

||||

# Task libraries are built.

|

||||

http_archive(

|

||||

name = "ios_opencv_source",

|

||||

sha256 = "a61e7a4618d353140c857f25843f39b2abe5f451b018aab1604ef0bc34cd23d5",

|

||||

build_file = "@//third_party:opencv_ios_source.BUILD",

|

||||

type = "zip",

|

||||

url = "https://github.com/opencv/opencv/archive/refs/tags/4.5.3.zip",

|

||||

)

|

||||

|

||||

http_archive(

|

||||

name = "stblib",

|

||||

strip_prefix = "stb-b42009b3b9d4ca35bc703f5310eedc74f584be58",

|

||||

|

|

@ -499,10 +458,9 @@ http_archive(

|

|||

)

|

||||

|

||||

# TensorFlow repo should always go after the other external dependencies.

|

||||

# TF on 2023-07-26.

|

||||

_TENSORFLOW_GIT_COMMIT = "e92261fd4cec0b726692081c4d2966b75abf31dd"

|

||||

# curl -L https://github.com/tensorflow/tensorflow/archive/<TENSORFLOW_GIT_COMMIT>.tar.gz | shasum -a 256

|

||||

_TENSORFLOW_SHA256 = "478a229bd4ec70a5b568ac23b5ea013d9fca46a47d6c43e30365a0412b9febf4"

|

||||

# TF on 2023-03-08.

|

||||

_TENSORFLOW_GIT_COMMIT = "24f7ee636d62e1f8d8330357f8bbd65956dfb84d"

|

||||

_TENSORFLOW_SHA256 = "7f8a96dd99215c0cdc77230d3dbce43e60102b64a89203ad04aa09b0a187a4bd"

|

||||

http_archive(

|

||||

name = "org_tensorflow",

|

||||

urls = [

|

||||

|

|

@ -510,12 +468,8 @@ http_archive(

|

|||

],

|

||||

patches = [

|

||||

"@//third_party:org_tensorflow_compatibility_fixes.diff",

|

||||

"@//third_party:org_tensorflow_system_python.diff",

|

||||

# Diff is generated with a script, don't update it manually.

|

||||

"@//third_party:org_tensorflow_custom_ops.diff",

|

||||

# Works around Bazel issue with objc_library.

|

||||

# See https://github.com/bazelbuild/bazel/issues/19912

|

||||

"@//third_party:org_tensorflow_objc_build_fixes.diff",

|

||||

],

|

||||

patch_args = [

|

||||

"-p1",

|

||||

|

|

|

|||

|

|

@ -1,342 +0,0 @@

|

|||

// !$*UTF8*$!

|

||||

{

|

||||

archiveVersion = 1;

|

||||

classes = {

|

||||

};

|

||||

objectVersion = 56;

|

||||

objects = {

|

||||

|

||||

/* Begin PBXBuildFile section */

|

||||

8566B55D2ABABF9A00AAB22A /* MediaPipeTasksDocGen.h in Headers */ = {isa = PBXBuildFile; fileRef = 8566B55C2ABABF9A00AAB22A /* MediaPipeTasksDocGen.h */; settings = {ATTRIBUTES = (Public, ); }; };

|

||||

/* End PBXBuildFile section */

|

||||

|

||||

/* Begin PBXFileReference section */

|

||||

8566B5592ABABF9A00AAB22A /* MediaPipeTasksDocGen.framework */ = {isa = PBXFileReference; explicitFileType = wrapper.framework; includeInIndex = 0; path = MediaPipeTasksDocGen.framework; sourceTree = BUILT_PRODUCTS_DIR; };

|

||||

8566B55C2ABABF9A00AAB22A /* MediaPipeTasksDocGen.h */ = {isa = PBXFileReference; lastKnownFileType = sourcecode.c.h; path = MediaPipeTasksDocGen.h; sourceTree = "<group>"; };

|

||||

/* End PBXFileReference section */

|

||||

|

||||

/* Begin PBXFrameworksBuildPhase section */

|

||||

8566B5562ABABF9A00AAB22A /* Frameworks */ = {

|

||||

isa = PBXFrameworksBuildPhase;

|

||||

buildActionMask = 2147483647;

|

||||

files = (

|

||||

);

|

||||

runOnlyForDeploymentPostprocessing = 0;

|

||||

};

|

||||

/* End PBXFrameworksBuildPhase section */

|

||||

|

||||

/* Begin PBXGroup section */

|

||||

8566B54F2ABABF9A00AAB22A = {

|

||||

isa = PBXGroup;

|

||||

children = (

|

||||

8566B55B2ABABF9A00AAB22A /* MediaPipeTasksDocGen */,

|

||||

8566B55A2ABABF9A00AAB22A /* Products */,

|

||||

);

|

||||

sourceTree = "<group>";

|

||||

};

|

||||

8566B55A2ABABF9A00AAB22A /* Products */ = {

|

||||

isa = PBXGroup;

|

||||

children = (

|

||||

8566B5592ABABF9A00AAB22A /* MediaPipeTasksDocGen.framework */,

|

||||

);

|

||||

name = Products;

|

||||

sourceTree = "<group>";

|

||||

};

|

||||

8566B55B2ABABF9A00AAB22A /* MediaPipeTasksDocGen */ = {

|

||||

isa = PBXGroup;

|

||||

children = (

|

||||

8566B55C2ABABF9A00AAB22A /* MediaPipeTasksDocGen.h */,

|

||||

);

|

||||

path = MediaPipeTasksDocGen;

|

||||

sourceTree = "<group>";

|

||||

};

|

||||

/* End PBXGroup section */

|

||||

|

||||

/* Begin PBXHeadersBuildPhase section */

|

||||

8566B5542ABABF9A00AAB22A /* Headers */ = {

|

||||

isa = PBXHeadersBuildPhase;

|

||||

buildActionMask = 2147483647;

|

||||

files = (

|

||||

8566B55D2ABABF9A00AAB22A /* MediaPipeTasksDocGen.h in Headers */,

|

||||

);

|

||||

runOnlyForDeploymentPostprocessing = 0;

|

||||

};

|

||||

/* End PBXHeadersBuildPhase section */

|

||||

|

||||

/* Begin PBXNativeTarget section */

|

||||

8566B5582ABABF9A00AAB22A /* MediaPipeTasksDocGen */ = {

|

||||

isa = PBXNativeTarget;

|

||||

buildConfigurationList = 8566B5602ABABF9A00AAB22A /* Build configuration list for PBXNativeTarget "MediaPipeTasksDocGen" */;

|

||||

buildPhases = (

|

||||

8566B5542ABABF9A00AAB22A /* Headers */,

|

||||

8566B5552ABABF9A00AAB22A /* Sources */,

|

||||

8566B5562ABABF9A00AAB22A /* Frameworks */,

|

||||

8566B5572ABABF9A00AAB22A /* Resources */,

|

||||

);

|

||||

buildRules = (

|

||||

);

|

||||

dependencies = (

|

||||

);

|

||||

name = MediaPipeTasksDocGen;

|

||||

productName = MediaPipeTasksDocGen;

|

||||

productReference = 8566B5592ABABF9A00AAB22A /* MediaPipeTasksDocGen.framework */;

|

||||

productType = "com.apple.product-type.framework";

|

||||

};

|

||||

/* End PBXNativeTarget section */

|

||||

|

||||

/* Begin PBXProject section */

|

||||

8566B5502ABABF9A00AAB22A /* Project object */ = {

|

||||

isa = PBXProject;

|

||||

attributes = {

|

||||

BuildIndependentTargetsInParallel = 1;

|

||||

LastUpgradeCheck = 1430;

|

||||

TargetAttributes = {

|

||||

8566B5582ABABF9A00AAB22A = {

|

||||

CreatedOnToolsVersion = 14.3.1;

|

||||

};

|

||||

};

|

||||

};

|

||||

buildConfigurationList = 8566B5532ABABF9A00AAB22A /* Build configuration list for PBXProject "MediaPipeTasksDocGen" */;

|

||||

compatibilityVersion = "Xcode 14.0";

|

||||

developmentRegion = en;

|

||||

hasScannedForEncodings = 0;

|

||||

knownRegions = (

|

||||

en,

|

||||

Base,

|

||||

);

|

||||

mainGroup = 8566B54F2ABABF9A00AAB22A;

|

||||

productRefGroup = 8566B55A2ABABF9A00AAB22A /* Products */;

|

||||

projectDirPath = "";

|

||||

projectRoot = "";

|

||||

targets = (

|

||||

8566B5582ABABF9A00AAB22A /* MediaPipeTasksDocGen */,

|

||||

);

|

||||

};

|

||||

/* End PBXProject section */

|

||||

|

||||

/* Begin PBXResourcesBuildPhase section */

|

||||

8566B5572ABABF9A00AAB22A /* Resources */ = {

|

||||

isa = PBXResourcesBuildPhase;

|

||||

buildActionMask = 2147483647;

|

||||

files = (

|

||||

);

|

||||

runOnlyForDeploymentPostprocessing = 0;

|

||||

};

|

||||

/* End PBXResourcesBuildPhase section */

|

||||

|

||||

/* Begin PBXSourcesBuildPhase section */

|

||||

8566B5552ABABF9A00AAB22A /* Sources */ = {

|

||||

isa = PBXSourcesBuildPhase;

|

||||

buildActionMask = 2147483647;

|

||||

files = (

|

||||

);

|

||||

runOnlyForDeploymentPostprocessing = 0;

|

||||

};

|

||||

/* End PBXSourcesBuildPhase section */

|

||||

|

||||

/* Begin XCBuildConfiguration section */

|

||||

8566B55E2ABABF9A00AAB22A /* Debug */ = {

|

||||

isa = XCBuildConfiguration;

|

||||

buildSettings = {

|

||||

ALWAYS_SEARCH_USER_PATHS = NO;

|

||||

CLANG_ANALYZER_NONNULL = YES;

|

||||

CLANG_ANALYZER_NUMBER_OBJECT_CONVERSION = YES_AGGRESSIVE;

|

||||

CLANG_CXX_LANGUAGE_STANDARD = "gnu++20";

|

||||

CLANG_ENABLE_MODULES = YES;

|

||||

CLANG_ENABLE_OBJC_ARC = YES;

|

||||

CLANG_ENABLE_OBJC_WEAK = YES;

|

||||

CLANG_WARN_BLOCK_CAPTURE_AUTORELEASING = YES;

|

||||

CLANG_WARN_BOOL_CONVERSION = YES;

|

||||

CLANG_WARN_COMMA = YES;

|

||||

CLANG_WARN_CONSTANT_CONVERSION = YES;

|

||||

CLANG_WARN_DEPRECATED_OBJC_IMPLEMENTATIONS = YES;

|

||||

CLANG_WARN_DIRECT_OBJC_ISA_USAGE = YES_ERROR;

|

||||

CLANG_WARN_DOCUMENTATION_COMMENTS = YES;

|

||||

CLANG_WARN_EMPTY_BODY = YES;

|

||||

CLANG_WARN_ENUM_CONVERSION = YES;

|

||||

CLANG_WARN_INFINITE_RECURSION = YES;

|

||||

CLANG_WARN_INT_CONVERSION = YES;

|

||||

CLANG_WARN_NON_LITERAL_NULL_CONVERSION = YES;

|

||||

CLANG_WARN_OBJC_IMPLICIT_RETAIN_SELF = YES;

|

||||

CLANG_WARN_OBJC_LITERAL_CONVERSION = YES;

|

||||

CLANG_WARN_OBJC_ROOT_CLASS = YES_ERROR;

|

||||

CLANG_WARN_QUOTED_INCLUDE_IN_FRAMEWORK_HEADER = YES;

|

||||

CLANG_WARN_RANGE_LOOP_ANALYSIS = YES;

|

||||

CLANG_WARN_STRICT_PROTOTYPES = YES;

|

||||

CLANG_WARN_SUSPICIOUS_MOVE = YES;

|

||||

CLANG_WARN_UNGUARDED_AVAILABILITY = YES_AGGRESSIVE;

|

||||

CLANG_WARN_UNREACHABLE_CODE = YES;

|

||||

CLANG_WARN__DUPLICATE_METHOD_MATCH = YES;

|

||||

COPY_PHASE_STRIP = NO;

|

||||

CURRENT_PROJECT_VERSION = 1;

|

||||

DEBUG_INFORMATION_FORMAT = dwarf;

|

||||

ENABLE_STRICT_OBJC_MSGSEND = YES;

|

||||

ENABLE_TESTABILITY = YES;

|

||||

GCC_C_LANGUAGE_STANDARD = gnu11;

|

||||

GCC_DYNAMIC_NO_PIC = NO;

|

||||

GCC_NO_COMMON_BLOCKS = YES;

|

||||

GCC_OPTIMIZATION_LEVEL = 0;

|

||||

GCC_PREPROCESSOR_DEFINITIONS = (

|

||||

"DEBUG=1",

|

||||

"$(inherited)",

|

||||

);

|

||||

GCC_WARN_64_TO_32_BIT_CONVERSION = YES;

|

||||

GCC_WARN_ABOUT_RETURN_TYPE = YES_ERROR;

|

||||

GCC_WARN_UNDECLARED_SELECTOR = YES;

|

||||

GCC_WARN_UNINITIALIZED_AUTOS = YES_AGGRESSIVE;

|

||||

GCC_WARN_UNUSED_FUNCTION = YES;

|

||||

GCC_WARN_UNUSED_VARIABLE = YES;

|

||||

IPHONEOS_DEPLOYMENT_TARGET = 16.4;

|

||||

MTL_ENABLE_DEBUG_INFO = INCLUDE_SOURCE;

|

||||

MTL_FAST_MATH = YES;

|

||||

ONLY_ACTIVE_ARCH = YES;

|

||||

SDKROOT = iphoneos;

|

||||

SWIFT_ACTIVE_COMPILATION_CONDITIONS = DEBUG;

|

||||

SWIFT_OPTIMIZATION_LEVEL = "-Onone";

|

||||

VERSIONING_SYSTEM = "apple-generic";

|

||||

VERSION_INFO_PREFIX = "";

|

||||

};

|

||||

name = Debug;

|

||||

};

|

||||

8566B55F2ABABF9A00AAB22A /* Release */ = {

|

||||

isa = XCBuildConfiguration;

|

||||

buildSettings = {

|

||||

ALWAYS_SEARCH_USER_PATHS = NO;

|

||||

CLANG_ANALYZER_NONNULL = YES;

|

||||

CLANG_ANALYZER_NUMBER_OBJECT_CONVERSION = YES_AGGRESSIVE;

|

||||

CLANG_CXX_LANGUAGE_STANDARD = "gnu++20";

|

||||

CLANG_ENABLE_MODULES = YES;

|

||||

CLANG_ENABLE_OBJC_ARC = YES;

|

||||

CLANG_ENABLE_OBJC_WEAK = YES;

|

||||

CLANG_WARN_BLOCK_CAPTURE_AUTORELEASING = YES;

|

||||

CLANG_WARN_BOOL_CONVERSION = YES;

|

||||

CLANG_WARN_COMMA = YES;

|

||||

CLANG_WARN_CONSTANT_CONVERSION = YES;

|

||||

CLANG_WARN_DEPRECATED_OBJC_IMPLEMENTATIONS = YES;

|

||||

CLANG_WARN_DIRECT_OBJC_ISA_USAGE = YES_ERROR;

|

||||

CLANG_WARN_DOCUMENTATION_COMMENTS = YES;

|

||||

CLANG_WARN_EMPTY_BODY = YES;

|

||||

CLANG_WARN_ENUM_CONVERSION = YES;

|

||||

CLANG_WARN_INFINITE_RECURSION = YES;

|

||||

CLANG_WARN_INT_CONVERSION = YES;

|

||||

CLANG_WARN_NON_LITERAL_NULL_CONVERSION = YES;

|

||||

CLANG_WARN_OBJC_IMPLICIT_RETAIN_SELF = YES;

|

||||

CLANG_WARN_OBJC_LITERAL_CONVERSION = YES;

|

||||

CLANG_WARN_OBJC_ROOT_CLASS = YES_ERROR;

|

||||

CLANG_WARN_QUOTED_INCLUDE_IN_FRAMEWORK_HEADER = YES;

|

||||

CLANG_WARN_RANGE_LOOP_ANALYSIS = YES;

|

||||

CLANG_WARN_STRICT_PROTOTYPES = YES;

|

||||

CLANG_WARN_SUSPICIOUS_MOVE = YES;

|

||||

CLANG_WARN_UNGUARDED_AVAILABILITY = YES_AGGRESSIVE;

|

||||

CLANG_WARN_UNREACHABLE_CODE = YES;

|

||||

CLANG_WARN__DUPLICATE_METHOD_MATCH = YES;

|

||||

COPY_PHASE_STRIP = NO;

|

||||

CURRENT_PROJECT_VERSION = 1;

|

||||

DEBUG_INFORMATION_FORMAT = "dwarf-with-dsym";

|

||||

ENABLE_NS_ASSERTIONS = NO;

|

||||

ENABLE_STRICT_OBJC_MSGSEND = YES;

|

||||

GCC_C_LANGUAGE_STANDARD = gnu11;

|

||||

GCC_NO_COMMON_BLOCKS = YES;

|

||||

GCC_WARN_64_TO_32_BIT_CONVERSION = YES;

|

||||

GCC_WARN_ABOUT_RETURN_TYPE = YES_ERROR;

|

||||

GCC_WARN_UNDECLARED_SELECTOR = YES;

|

||||

GCC_WARN_UNINITIALIZED_AUTOS = YES_AGGRESSIVE;

|

||||

GCC_WARN_UNUSED_FUNCTION = YES;

|

||||

GCC_WARN_UNUSED_VARIABLE = YES;

|

||||

IPHONEOS_DEPLOYMENT_TARGET = 16.4;

|

||||

MTL_ENABLE_DEBUG_INFO = NO;

|

||||

MTL_FAST_MATH = YES;

|

||||

SDKROOT = iphoneos;

|

||||

SWIFT_COMPILATION_MODE = wholemodule;

|

||||

SWIFT_OPTIMIZATION_LEVEL = "-O";

|

||||

VALIDATE_PRODUCT = YES;

|

||||

VERSIONING_SYSTEM = "apple-generic";

|

||||

VERSION_INFO_PREFIX = "";

|

||||

};

|

||||

name = Release;

|

||||

};

|

||||

8566B5612ABABF9A00AAB22A /* Debug */ = {

|

||||

isa = XCBuildConfiguration;

|

||||

buildSettings = {

|

||||

CODE_SIGN_STYLE = Automatic;

|

||||

CURRENT_PROJECT_VERSION = 1;

|

||||

DEFINES_MODULE = YES;

|

||||

DYLIB_COMPATIBILITY_VERSION = 1;

|

||||

DYLIB_CURRENT_VERSION = 1;

|

||||

DYLIB_INSTALL_NAME_BASE = "@rpath";

|

||||

ENABLE_MODULE_VERIFIER = YES;

|

||||

GENERATE_INFOPLIST_FILE = YES;

|

||||

INFOPLIST_KEY_NSHumanReadableCopyright = "";

|

||||

INSTALL_PATH = "$(LOCAL_LIBRARY_DIR)/Frameworks";

|

||||

LD_RUNPATH_SEARCH_PATHS = (

|

||||

"$(inherited)",

|

||||

"@executable_path/Frameworks",

|

||||

"@loader_path/Frameworks",

|

||||

);

|

||||

MARKETING_VERSION = 1.0;

|

||||

MODULE_VERIFIER_SUPPORTED_LANGUAGES = "objective-c objective-c++";

|

||||

MODULE_VERIFIER_SUPPORTED_LANGUAGE_STANDARDS = "gnu11 gnu++20";

|

||||

PRODUCT_BUNDLE_IDENTIFIER = com.google.mediapipe.MediaPipeTasksDocGen;

|

||||

PRODUCT_NAME = "$(TARGET_NAME:c99extidentifier)";

|

||||

SKIP_INSTALL = YES;

|

||||

SWIFT_EMIT_LOC_STRINGS = YES;

|

||||

SWIFT_VERSION = 5.0;

|

||||

TARGETED_DEVICE_FAMILY = "1,2";

|

||||

};

|

||||

name = Debug;

|

||||

};

|

||||

8566B5622ABABF9A00AAB22A /* Release */ = {

|

||||

isa = XCBuildConfiguration;

|

||||

buildSettings = {

|

||||

CODE_SIGN_STYLE = Automatic;

|

||||

CURRENT_PROJECT_VERSION = 1;

|

||||

DEFINES_MODULE = YES;

|

||||

DYLIB_COMPATIBILITY_VERSION = 1;

|

||||

DYLIB_CURRENT_VERSION = 1;

|

||||

DYLIB_INSTALL_NAME_BASE = "@rpath";

|

||||

ENABLE_MODULE_VERIFIER = YES;

|

||||

GENERATE_INFOPLIST_FILE = YES;

|

||||

INFOPLIST_KEY_NSHumanReadableCopyright = "";

|

||||

INSTALL_PATH = "$(LOCAL_LIBRARY_DIR)/Frameworks";

|

||||

LD_RUNPATH_SEARCH_PATHS = (

|

||||

"$(inherited)",

|

||||

"@executable_path/Frameworks",

|

||||

"@loader_path/Frameworks",

|

||||

);

|

||||

MARKETING_VERSION = 1.0;

|

||||

MODULE_VERIFIER_SUPPORTED_LANGUAGES = "objective-c objective-c++";

|

||||

MODULE_VERIFIER_SUPPORTED_LANGUAGE_STANDARDS = "gnu11 gnu++20";

|

||||

PRODUCT_BUNDLE_IDENTIFIER = com.google.mediapipe.MediaPipeTasksDocGen;

|

||||

PRODUCT_NAME = "$(TARGET_NAME:c99extidentifier)";

|

||||

SKIP_INSTALL = YES;

|

||||

SWIFT_EMIT_LOC_STRINGS = YES;

|

||||

SWIFT_VERSION = 5.0;

|

||||

TARGETED_DEVICE_FAMILY = "1,2";

|

||||

};

|

||||

name = Release;

|

||||

};

|

||||

/* End XCBuildConfiguration section */

|

||||

|

||||

/* Begin XCConfigurationList section */

|

||||

8566B5532ABABF9A00AAB22A /* Build configuration list for PBXProject "MediaPipeTasksDocGen" */ = {

|

||||

isa = XCConfigurationList;

|

||||

buildConfigurations = (

|

||||

8566B55E2ABABF9A00AAB22A /* Debug */,

|

||||

8566B55F2ABABF9A00AAB22A /* Release */,

|

||||

);

|

||||

defaultConfigurationIsVisible = 0;

|

||||

defaultConfigurationName = Release;

|

||||

};

|

||||

8566B5602ABABF9A00AAB22A /* Build configuration list for PBXNativeTarget "MediaPipeTasksDocGen" */ = {

|

||||

isa = XCConfigurationList;

|

||||

buildConfigurations = (

|

||||

8566B5612ABABF9A00AAB22A /* Debug */,

|

||||

8566B5622ABABF9A00AAB22A /* Release */,

|

||||

);

|

||||

defaultConfigurationIsVisible = 0;

|

||||

defaultConfigurationName = Release;

|

||||

};

|

||||

/* End XCConfigurationList section */

|

||||

};

|

||||

rootObject = 8566B5502ABABF9A00AAB22A /* Project object */;

|

||||

}

|

||||

|

|

@ -1,7 +0,0 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<Workspace

|

||||

version = "1.0">

|

||||

<FileRef

|

||||

location = "self:">

|

||||

</FileRef>

|

||||

</Workspace>

|

||||

|

|

@ -1,8 +0,0 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

|

||||

<plist version="1.0">

|

||||

<dict>

|

||||

<key>IDEDidComputeMac32BitWarning</key>

|

||||

<true/>

|

||||

</dict>

|

||||

</plist>

|

||||

Binary file not shown.

|

|

@ -1,14 +0,0 @@

|

|||

<?xml version="1.0" encoding="UTF-8"?>

|

||||

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

|

||||

<plist version="1.0">

|

||||

<dict>

|

||||

<key>SchemeUserState</key>

|

||||

<dict>

|

||||

<key>MediaPipeTasksDocGen.xcscheme_^#shared#^_</key>

|

||||

<dict>

|

||||

<key>orderHint</key>

|

||||

<integer>0</integer>

|

||||

</dict>

|

||||

</dict>

|

||||

</dict>

|

||||

</plist>

|

||||

|

|

@ -1,17 +0,0 @@

|

|||

//

|

||||

// MediaPipeTasksDocGen.h

|

||||

// MediaPipeTasksDocGen

|

||||

//

|

||||

// Created by Mark McDonald on 20/9/2023.

|

||||

//

|

||||

|

||||

#import <Foundation/Foundation.h>

|

||||

|

||||

//! Project version number for MediaPipeTasksDocGen.

|

||||

FOUNDATION_EXPORT double MediaPipeTasksDocGenVersionNumber;

|

||||

|

||||

//! Project version string for MediaPipeTasksDocGen.

|

||||

FOUNDATION_EXPORT const unsigned char MediaPipeTasksDocGenVersionString[];

|

||||

|

||||

// In this header, you should import all the public headers of your framework using statements like

|

||||

// #import <MediaPipeTasksDocGen/PublicHeader.h>

|

||||

|

|

@ -1,11 +0,0 @@

|

|||

# Uncomment the next line to define a global platform for your project

|

||||

platform :ios, '15.0'

|

||||

|

||||

target 'MediaPipeTasksDocGen' do

|

||||

# Comment the next line if you don't want to use dynamic frameworks

|

||||

use_frameworks!

|

||||

|

||||

# Pods for MediaPipeTasksDocGen

|

||||

pod 'MediaPipeTasksText'

|

||||

pod 'MediaPipeTasksVision'

|

||||

end

|

||||

|

|

@ -1,9 +0,0 @@

|

|||

# MediaPipeTasksDocGen

|

||||

|

||||

This empty project is used to generate reference documentation for the

|

||||

ObjectiveC and Swift libraries.

|

||||

|

||||

Docs are generated using [Jazzy](https://github.com/realm/jazzy) and published

|

||||

to [the developer site](https://developers.google.com/mediapipe/solutions/).

|

||||

|

||||

To bump the API version used, edit [`Podfile`](./Podfile).

|

||||

|

|

@ -1,4 +1,4 @@

|

|||

# Copyright 2022 The MediaPipe Authors.

|

||||

# Copyright 2022 The MediaPipe Authors. All Rights Reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

|

|

@ -14,7 +14,6 @@

|

|||

# ==============================================================================

|

||||

"""Generate Java reference docs for MediaPipe."""

|

||||

import pathlib

|

||||

import shutil

|

||||

|

||||

from absl import app

|

||||

from absl import flags

|

||||

|

|

@ -42,9 +41,7 @@ def main(_) -> None:

|

|||

mp_root = pathlib.Path(__file__)

|

||||

while (mp_root := mp_root.parent).name != 'mediapipe':

|

||||

# Find the nearest `mediapipe` dir.

|

||||

if not mp_root.name:

|

||||

# We've hit the filesystem root - abort.

|

||||

raise FileNotFoundError('"mediapipe" root not found')

|

||||

pass

|

||||

|

||||

# Find the root from which all packages are relative.

|

||||

root = mp_root.parent

|

||||

|

|

@ -54,14 +51,6 @@ def main(_) -> None:

|

|||

if (mp_root / 'mediapipe').exists():

|

||||

mp_root = mp_root / 'mediapipe'

|

||||

|

||||

# We need to copy this into the tasks dir to ensure we don't leave broken

|

||||

# links in the generated docs.

|

||||

old_api_dir = 'java/com/google/mediapipe/framework/image'

|

||||

shutil.copytree(

|

||||

mp_root / old_api_dir,

|

||||

mp_root / 'tasks' / old_api_dir,

|

||||

dirs_exist_ok=True)

|

||||

|

||||

gen_java.gen_java_docs(

|

||||

package='com.google.mediapipe',

|

||||

source_path=mp_root / 'tasks/java',

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

# Copyright 2022 The MediaPipe Authors.

|

||||

# Copyright 2022 The MediaPipe Authors. All Rights Reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

|

|

|

|||

|

|

@ -1,4 +1,4 @@

|

|||

# Copyright 2022 The MediaPipe Authors.

|

||||

# Copyright 2022 The MediaPipe Authors. All Rights Reserved.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

|

|

|

|||

|

|

@ -50,7 +50,7 @@ as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

|||

3. The [`hello world`] example uses a simple MediaPipe graph in the

|

||||

`PrintHelloWorld()` function, defined in a [`CalculatorGraphConfig`] proto.

|

||||

|

||||

```c++

|

||||

```C++

|

||||

absl::Status PrintHelloWorld() {

|

||||

// Configures a simple graph, which concatenates 2 PassThroughCalculators.

|

||||

CalculatorGraphConfig config = ParseTextProtoOrDie<CalculatorGraphConfig>(R"(

|

||||

|

|

@ -126,7 +126,7 @@ as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

|||

```c++

|

||||

mediapipe::Packet packet;

|

||||

while (poller.Next(&packet)) {

|

||||

ABSL_LOG(INFO) << packet.Get<string>();

|

||||

LOG(INFO) << packet.Get<string>();

|

||||

}

|

||||

```

|

||||

|

||||

|

|

|

|||

|

|

@ -138,7 +138,7 @@ Create a `BUILD` file in the `$APPLICATION_PATH` and add the following build

|

|||

rules:

|

||||

|

||||

```

|

||||

MIN_IOS_VERSION = "12.0"

|

||||

MIN_IOS_VERSION = "11.0"

|

||||

|

||||

load(

|

||||

"@build_bazel_rules_apple//apple:ios.bzl",

|

||||

|

|

|

|||

200

docs/index.md

200

docs/index.md

|

|

@ -1,121 +1,99 @@

|

|||

---

|

||||

layout: forward

|

||||

target: https://developers.google.com/mediapipe

|

||||

layout: default

|

||||

title: Home

|

||||

nav_order: 1

|