Merged with master

This commit is contained in:

commit

f86188f8e1

195

README.md

195

README.md

|

|

@ -4,8 +4,6 @@ title: Home

|

|||

nav_order: 1

|

||||

---

|

||||

|

||||

|

||||

|

||||

----

|

||||

|

||||

**Attention:** *Thanks for your interest in MediaPipe! We have moved to

|

||||

|

|

@ -14,86 +12,111 @@ as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

|||

|

||||

*This notice and web page will be removed on June 1, 2023.*

|

||||

|

||||

----

|

||||

|

||||

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

**Attention**: MediaPipe Solutions Preview is an early release. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/about#notice).

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

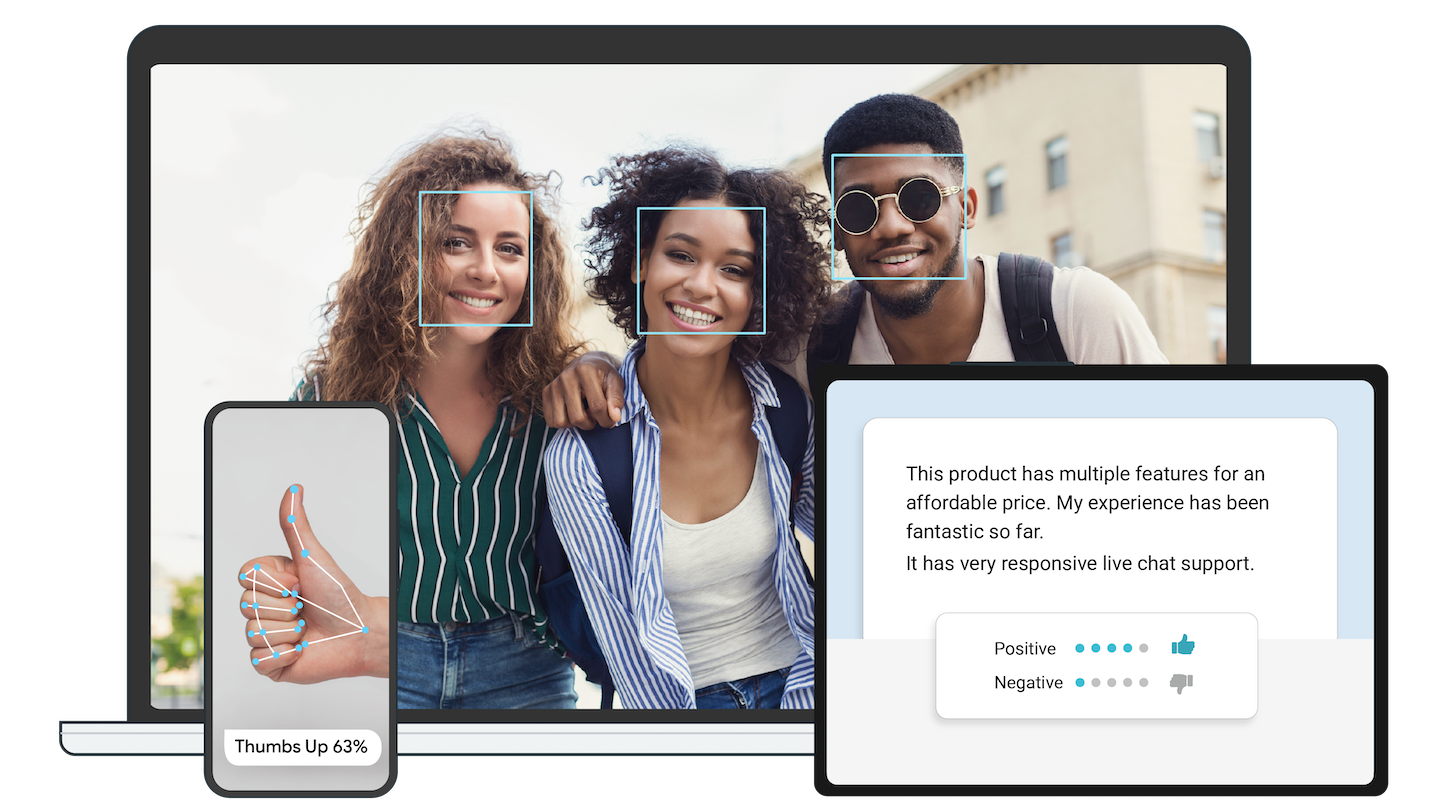

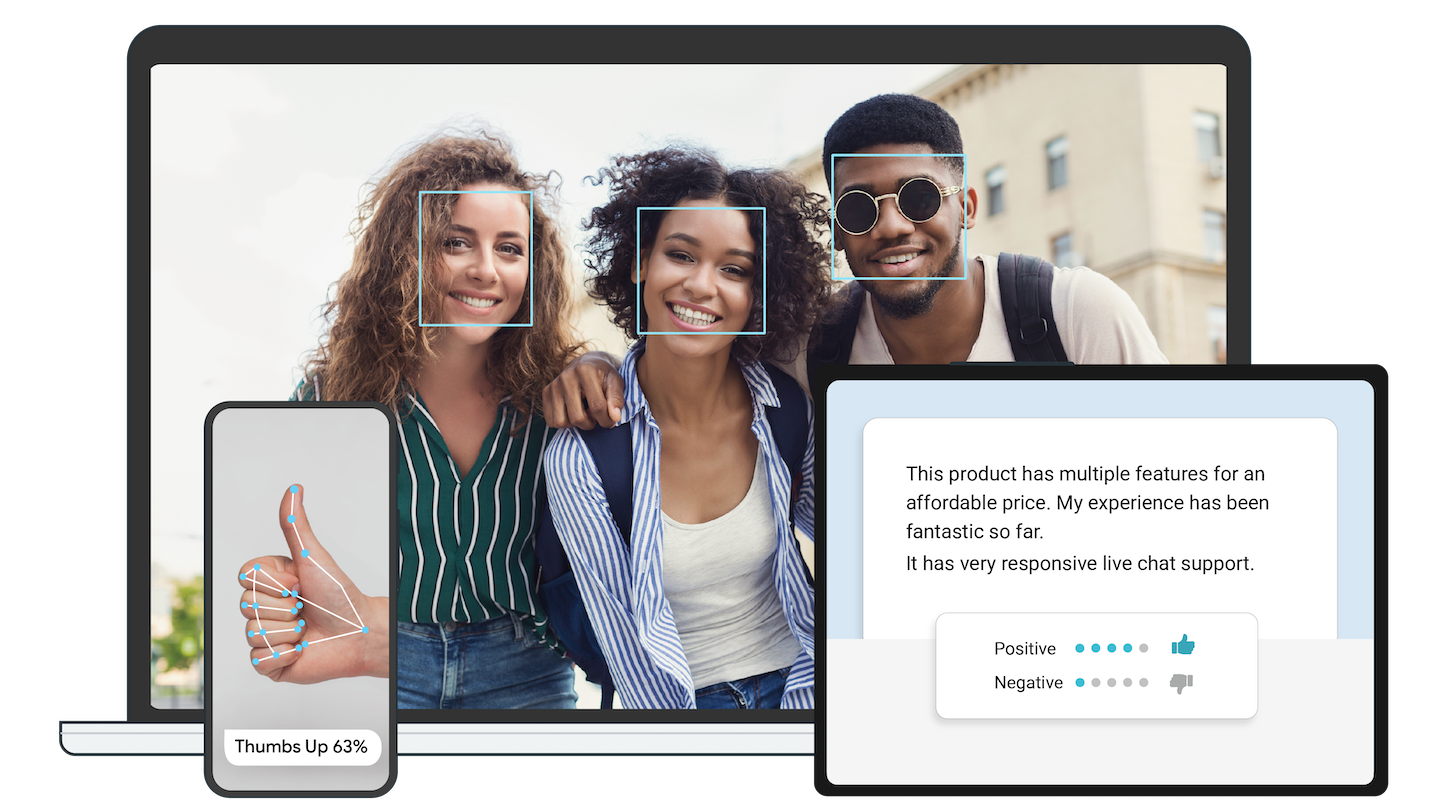

**On-device machine learning for everyone**

|

||||

|

||||

## Live ML anywhere

|

||||

Delight your customers with innovative machine learning features. MediaPipe

|

||||

contains everything that you need to customize and deploy to mobile (Android,

|

||||

iOS), web, desktop, edge devices, and IoT, effortlessly.

|

||||

|

||||

[MediaPipe](https://google.github.io/mediapipe/) offers cross-platform, customizable

|

||||

ML solutions for live and streaming media.

|

||||

* [See demos](https://goo.gle/mediapipe-studio)

|

||||

* [Learn more](https://developers.google.com/mediapipe/solutions)

|

||||

|

||||

|

|

||||

:------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------:

|

||||

***End-to-End acceleration***: *Built-in fast ML inference and processing accelerated even on common hardware* | ***Build once, deploy anywhere***: *Unified solution works across Android, iOS, desktop/cloud, web and IoT*

|

||||

|

|

||||

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

||||

## Get started

|

||||

|

||||

----

|

||||

You can get started with MediaPipe Solutions by by checking out any of the

|

||||

developer guides for

|

||||

[vision](https://developers.google.com/mediapipe/solutions/vision/object_detector),

|

||||

[text](https://developers.google.com/mediapipe/solutions/text/text_classifier),

|

||||

and

|

||||

[audio](https://developers.google.com/mediapipe/solutions/audio/audio_classifier)

|

||||

tasks. If you need help setting up a development environment for use with

|

||||

MediaPipe Tasks, check out the setup guides for

|

||||

[Android](https://developers.google.com/mediapipe/solutions/setup_android), [web

|

||||

apps](https://developers.google.com/mediapipe/solutions/setup_web), and

|

||||

[Python](https://developers.google.com/mediapipe/solutions/setup_python).

|

||||

|

||||

## ML solutions in MediaPipe

|

||||

## Solutions

|

||||

|

||||

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

||||

:----------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :------:

|

||||

[](https://google.github.io/mediapipe/solutions/face_detection) | [](https://google.github.io/mediapipe/solutions/face_mesh) | [](https://google.github.io/mediapipe/solutions/iris) | [](https://google.github.io/mediapipe/solutions/hands) | [](https://google.github.io/mediapipe/solutions/pose) | [](https://google.github.io/mediapipe/solutions/holistic)

|

||||

MediaPipe Solutions provides a suite of libraries and tools for you to quickly

|

||||

apply artificial intelligence (AI) and machine learning (ML) techniques in your

|

||||

applications. You can plug these solutions into your applications immediately,

|

||||

customize them to your needs, and use them across multiple development

|

||||

platforms. MediaPipe Solutions is part of the MediaPipe [open source

|

||||

project](https://github.com/google/mediapipe), so you can further customize the

|

||||

solutions code to meet your application needs.

|

||||

|

||||

Hair Segmentation | Object Detection | Box Tracking | Instant Motion Tracking | Objectron | KNIFT

|

||||

:-------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------: | :---:

|

||||

[](https://google.github.io/mediapipe/solutions/hair_segmentation) | [](https://google.github.io/mediapipe/solutions/object_detection) | [](https://google.github.io/mediapipe/solutions/box_tracking) | [](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | [](https://google.github.io/mediapipe/solutions/objectron) | [](https://google.github.io/mediapipe/solutions/knift)

|

||||

These libraries and resources provide the core functionality for each MediaPipe

|

||||

Solution:

|

||||

|

||||

<!-- []() in the first cell is needed to preserve table formatting in GitHub Pages. -->

|

||||

<!-- Whenever this table is updated, paste a copy to solutions/solutions.md. -->

|

||||

* **MediaPipe Tasks**: Cross-platform APIs and libraries for deploying

|

||||

solutions. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/tasks).

|

||||

* **MediaPipe models**: Pre-trained, ready-to-run models for use with each

|

||||

solution.

|

||||

|

||||

[]() | [Android](https://google.github.io/mediapipe/getting_started/android) | [iOS](https://google.github.io/mediapipe/getting_started/ios) | [C++](https://google.github.io/mediapipe/getting_started/cpp) | [Python](https://google.github.io/mediapipe/getting_started/python) | [JS](https://google.github.io/mediapipe/getting_started/javascript) | [Coral](https://github.com/google/mediapipe/tree/master/mediapipe/examples/coral/README.md)

|

||||

:---------------------------------------------------------------------------------------- | :-------------------------------------------------------------: | :-----------------------------------------------------: | :-----------------------------------------------------: | :-----------------------------------------------------------: | :-----------------------------------------------------------: | :--------------------------------------------------------------------:

|

||||

[Face Detection](https://google.github.io/mediapipe/solutions/face_detection) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅

|

||||

[Face Mesh](https://google.github.io/mediapipe/solutions/face_mesh) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Iris](https://google.github.io/mediapipe/solutions/iris) | ✅ | ✅ | ✅ | | |

|

||||

[Hands](https://google.github.io/mediapipe/solutions/hands) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Pose](https://google.github.io/mediapipe/solutions/pose) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Holistic](https://google.github.io/mediapipe/solutions/holistic) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Selfie Segmentation](https://google.github.io/mediapipe/solutions/selfie_segmentation) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Hair Segmentation](https://google.github.io/mediapipe/solutions/hair_segmentation) | ✅ | | ✅ | | |

|

||||

[Object Detection](https://google.github.io/mediapipe/solutions/object_detection) | ✅ | ✅ | ✅ | | | ✅

|

||||

[Box Tracking](https://google.github.io/mediapipe/solutions/box_tracking) | ✅ | ✅ | ✅ | | |

|

||||

[Instant Motion Tracking](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | ✅ | | | | |

|

||||

[Objectron](https://google.github.io/mediapipe/solutions/objectron) | ✅ | | ✅ | ✅ | ✅ |

|

||||

[KNIFT](https://google.github.io/mediapipe/solutions/knift) | ✅ | | | | |

|

||||

[AutoFlip](https://google.github.io/mediapipe/solutions/autoflip) | | | ✅ | | |

|

||||

[MediaSequence](https://google.github.io/mediapipe/solutions/media_sequence) | | | ✅ | | |

|

||||

[YouTube 8M](https://google.github.io/mediapipe/solutions/youtube_8m) | | | ✅ | | |

|

||||

These tools let you customize and evaluate solutions:

|

||||

|

||||

See also

|

||||

[MediaPipe Models and Model Cards](https://google.github.io/mediapipe/solutions/models)

|

||||

for ML models released in MediaPipe.

|

||||

* **MediaPipe Model Maker**: Customize models for solutions with your data.

|

||||

[Learn more](https://developers.google.com/mediapipe/solutions/model_maker).

|

||||

* **MediaPipe Studio**: Visualize, evaluate, and benchmark solutions in your

|

||||

browser. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/studio).

|

||||

|

||||

## Getting started

|

||||

### Legacy solutions

|

||||

|

||||

To start using MediaPipe

|

||||

[solutions](https://google.github.io/mediapipe/solutions/solutions) with only a few

|

||||

lines code, see example code and demos in

|

||||

[MediaPipe in Python](https://google.github.io/mediapipe/getting_started/python) and

|

||||

[MediaPipe in JavaScript](https://google.github.io/mediapipe/getting_started/javascript).

|

||||

We have ended support for [these MediaPipe Legacy Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||

as of March 1, 2023. All other MediaPipe Legacy Solutions will be upgraded to

|

||||

a new MediaPipe Solution. See the [Solutions guide](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||

for details. The [code repository](https://github.com/google/mediapipe/tree/master/mediapipe)

|

||||

and prebuilt binaries for all MediaPipe Legacy Solutions will continue to be

|

||||

provided on an as-is basis.

|

||||

|

||||

To use MediaPipe in C++, Android and iOS, which allow further customization of

|

||||

the [solutions](https://google.github.io/mediapipe/solutions/solutions) as well as

|

||||

building your own, learn how to

|

||||

[install](https://google.github.io/mediapipe/getting_started/install) MediaPipe and

|

||||

start building example applications in

|

||||

[C++](https://google.github.io/mediapipe/getting_started/cpp),

|

||||

[Android](https://google.github.io/mediapipe/getting_started/android) and

|

||||

[iOS](https://google.github.io/mediapipe/getting_started/ios).

|

||||

For more on the legacy solutions, see the [documentation](https://github.com/google/mediapipe/tree/master/docs/solutions).

|

||||

|

||||

The source code is hosted in the

|

||||

[MediaPipe Github repository](https://github.com/google/mediapipe), and you can

|

||||

run code search using

|

||||

[Google Open Source Code Search](https://cs.opensource.google/mediapipe/mediapipe).

|

||||

## Framework

|

||||

|

||||

## Publications

|

||||

To start using MediaPipe Framework, [install MediaPipe

|

||||

Framework](https://developers.google.com/mediapipe/framework/getting_started/install)

|

||||

and start building example applications in C++, Android, and iOS.

|

||||

|

||||

[MediaPipe Framework](https://developers.google.com/mediapipe/framework) is the

|

||||

low-level component used to build efficient on-device machine learning

|

||||

pipelines, similar to the premade MediaPipe Solutions.

|

||||

|

||||

Before using MediaPipe Framework, familiarize yourself with the following key

|

||||

[Framework

|

||||

concepts](https://developers.google.com/mediapipe/framework/framework_concepts/overview.md):

|

||||

|

||||

* [Packets](https://developers.google.com/mediapipe/framework/framework_concepts/packets.md)

|

||||

* [Graphs](https://developers.google.com/mediapipe/framework/framework_concepts/graphs.md)

|

||||

* [Calculators](https://developers.google.com/mediapipe/framework/framework_concepts/calculators.md)

|

||||

|

||||

## Community

|

||||

|

||||

* [Slack community](https://mediapipe.page.link/joinslack) for MediaPipe

|

||||

users.

|

||||

* [Discuss](https://groups.google.com/forum/#!forum/mediapipe) - General

|

||||

community discussion around MediaPipe.

|

||||

* [Awesome MediaPipe](https://mediapipe.page.link/awesome-mediapipe) - A

|

||||

curated list of awesome MediaPipe related frameworks, libraries and

|

||||

software.

|

||||

|

||||

## Contributing

|

||||

|

||||

We welcome contributions. Please follow these

|

||||

[guidelines](https://github.com/google/mediapipe/blob/master/CONTRIBUTING.md).

|

||||

|

||||

We use GitHub issues for tracking requests and bugs. Please post questions to

|

||||

the MediaPipe Stack Overflow with a `mediapipe` tag.

|

||||

|

||||

## Resources

|

||||

|

||||

### Publications

|

||||

|

||||

* [Bringing artworks to life with AR](https://developers.googleblog.com/2021/07/bringing-artworks-to-life-with-ar.html)

|

||||

in Google Developers Blog

|

||||

|

|

@ -102,7 +125,8 @@ run code search using

|

|||

* [SignAll SDK: Sign language interface using MediaPipe is now available for

|

||||

developers](https://developers.googleblog.com/2021/04/signall-sdk-sign-language-interface-using-mediapipe-now-available.html)

|

||||

in Google Developers Blog

|

||||

* [MediaPipe Holistic - Simultaneous Face, Hand and Pose Prediction, on Device](https://ai.googleblog.com/2020/12/mediapipe-holistic-simultaneous-face.html)

|

||||

* [MediaPipe Holistic - Simultaneous Face, Hand and Pose Prediction, on

|

||||

Device](https://ai.googleblog.com/2020/12/mediapipe-holistic-simultaneous-face.html)

|

||||

in Google AI Blog

|

||||

* [Background Features in Google Meet, Powered by Web ML](https://ai.googleblog.com/2020/10/background-features-in-google-meet.html)

|

||||

in Google AI Blog

|

||||

|

|

@ -130,43 +154,6 @@ run code search using

|

|||

in Google AI Blog

|

||||

* [MediaPipe: A Framework for Building Perception Pipelines](https://arxiv.org/abs/1906.08172)

|

||||

|

||||

## Videos

|

||||

### Videos

|

||||

|

||||

* [YouTube Channel](https://www.youtube.com/c/MediaPipe)

|

||||

|

||||

## Events

|

||||

|

||||

* [MediaPipe Seattle Meetup, Google Building Waterside, 13 Feb 2020](https://mediapipe.page.link/seattle2020)

|

||||

* [AI Nextcon 2020, 12-16 Feb 2020, Seattle](http://aisea20.xnextcon.com/)

|

||||

* [MediaPipe Madrid Meetup, 16 Dec 2019](https://www.meetup.com/Madrid-AI-Developers-Group/events/266329088/)

|

||||

* [MediaPipe London Meetup, Google 123 Building, 12 Dec 2019](https://www.meetup.com/London-AI-Tech-Talk/events/266329038)

|

||||

* [ML Conference, Berlin, 11 Dec 2019](https://mlconference.ai/machine-learning-advanced-development/mediapipe-building-real-time-cross-platform-mobile-web-edge-desktop-video-audio-ml-pipelines/)

|

||||

* [MediaPipe Berlin Meetup, Google Berlin, 11 Dec 2019](https://www.meetup.com/Berlin-AI-Tech-Talk/events/266328794/)

|

||||

* [The 3rd Workshop on YouTube-8M Large Scale Video Understanding Workshop,

|

||||

Seoul, Korea ICCV

|

||||

2019](https://research.google.com/youtube8m/workshop2019/index.html)

|

||||

* [AI DevWorld 2019, 10 Oct 2019, San Jose, CA](https://aidevworld.com)

|

||||

* [Google Industry Workshop at ICIP 2019, 24 Sept 2019, Taipei, Taiwan](http://2019.ieeeicip.org/?action=page4&id=14#Google)

|

||||

([presentation](https://docs.google.com/presentation/d/e/2PACX-1vRIBBbO_LO9v2YmvbHHEt1cwyqH6EjDxiILjuT0foXy1E7g6uyh4CesB2DkkEwlRDO9_lWfuKMZx98T/pub?start=false&loop=false&delayms=3000&slide=id.g556cc1a659_0_5))

|

||||

* [Open sourced at CVPR 2019, 17~20 June, Long Beach, CA](https://sites.google.com/corp/view/perception-cv4arvr/mediapipe)

|

||||

|

||||

## Community

|

||||

|

||||

* [Awesome MediaPipe](https://mediapipe.page.link/awesome-mediapipe) - A

|

||||

curated list of awesome MediaPipe related frameworks, libraries and software

|

||||

* [Slack community](https://mediapipe.page.link/joinslack) for MediaPipe users

|

||||

* [Discuss](https://groups.google.com/forum/#!forum/mediapipe) - General

|

||||

community discussion around MediaPipe

|

||||

|

||||

## Alpha disclaimer

|

||||

|

||||

MediaPipe is currently in alpha at v0.7. We may be still making breaking API

|

||||

changes and expect to get to stable APIs by v1.0.

|

||||

|

||||

## Contributing

|

||||

|

||||

We welcome contributions. Please follow these

|

||||

[guidelines](https://github.com/google/mediapipe/blob/master/CONTRIBUTING.md).

|

||||

|

||||

We use GitHub issues for tracking requests and bugs. Please post questions to

|

||||

the MediaPipe Stack Overflow with a `mediapipe` tag.

|

||||

|

|

|

|||

12

WORKSPACE

12

WORKSPACE

|

|

@ -375,6 +375,18 @@ http_archive(

|

|||

url = "https://github.com/opencv/opencv/releases/download/3.2.0/opencv-3.2.0-ios-framework.zip",

|

||||

)

|

||||

|

||||

# Building an opencv.xcframework from the OpenCV 4.5.1 sources is necessary for

|

||||

# MediaPipe iOS Task Libraries to be supported on arm64(M1) Macs. An

|

||||

# `opencv.xcframework` archive has not been released and it is recommended to

|

||||

# build the same from source using a script provided in OpenCV 4.5.0 upwards.

|

||||

http_archive(

|

||||

name = "ios_opencv_source",

|

||||

sha256 = "5fbc26ee09e148a4d494b225d04217f7c913ca1a4d46115b70cca3565d7bbe05",

|

||||

build_file = "@//third_party:opencv_ios_source.BUILD",

|

||||

type = "zip",

|

||||

url = "https://github.com/opencv/opencv/archive/refs/tags/4.5.1.zip",

|

||||

)

|

||||

|

||||

http_archive(

|

||||

name = "stblib",

|

||||

strip_prefix = "stb-b42009b3b9d4ca35bc703f5310eedc74f584be58",

|

||||

|

|

|

|||

195

docs/index.md

195

docs/index.md

|

|

@ -4,8 +4,6 @@ title: Home

|

|||

nav_order: 1

|

||||

---

|

||||

|

||||

|

||||

|

||||

----

|

||||

|

||||

**Attention:** *Thanks for your interest in MediaPipe! We have moved to

|

||||

|

|

@ -14,86 +12,111 @@ as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

|||

|

||||

*This notice and web page will be removed on June 1, 2023.*

|

||||

|

||||

----

|

||||

|

||||

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

<br><br><br><br><br><br><br><br><br><br>

|

||||

**Attention**: MediaPipe Solutions Preview is an early release. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/about#notice).

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

**On-device machine learning for everyone**

|

||||

|

||||

## Live ML anywhere

|

||||

Delight your customers with innovative machine learning features. MediaPipe

|

||||

contains everything that you need to customize and deploy to mobile (Android,

|

||||

iOS), web, desktop, edge devices, and IoT, effortlessly.

|

||||

|

||||

[MediaPipe](https://google.github.io/mediapipe/) offers cross-platform, customizable

|

||||

ML solutions for live and streaming media.

|

||||

* [See demos](https://goo.gle/mediapipe-studio)

|

||||

* [Learn more](https://developers.google.com/mediapipe/solutions)

|

||||

|

||||

|

|

||||

:------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------:

|

||||

***End-to-End acceleration***: *Built-in fast ML inference and processing accelerated even on common hardware* | ***Build once, deploy anywhere***: *Unified solution works across Android, iOS, desktop/cloud, web and IoT*

|

||||

|

|

||||

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

||||

## Get started

|

||||

|

||||

----

|

||||

You can get started with MediaPipe Solutions by by checking out any of the

|

||||

developer guides for

|

||||

[vision](https://developers.google.com/mediapipe/solutions/vision/object_detector),

|

||||

[text](https://developers.google.com/mediapipe/solutions/text/text_classifier),

|

||||

and

|

||||

[audio](https://developers.google.com/mediapipe/solutions/audio/audio_classifier)

|

||||

tasks. If you need help setting up a development environment for use with

|

||||

MediaPipe Tasks, check out the setup guides for

|

||||

[Android](https://developers.google.com/mediapipe/solutions/setup_android), [web

|

||||

apps](https://developers.google.com/mediapipe/solutions/setup_web), and

|

||||

[Python](https://developers.google.com/mediapipe/solutions/setup_python).

|

||||

|

||||

## ML solutions in MediaPipe

|

||||

## Solutions

|

||||

|

||||

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

||||

:----------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :--------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------: | :------:

|

||||

[](https://google.github.io/mediapipe/solutions/face_detection) | [](https://google.github.io/mediapipe/solutions/face_mesh) | [](https://google.github.io/mediapipe/solutions/iris) | [](https://google.github.io/mediapipe/solutions/hands) | [](https://google.github.io/mediapipe/solutions/pose) | [](https://google.github.io/mediapipe/solutions/holistic)

|

||||

MediaPipe Solutions provides a suite of libraries and tools for you to quickly

|

||||

apply artificial intelligence (AI) and machine learning (ML) techniques in your

|

||||

applications. You can plug these solutions into your applications immediately,

|

||||

customize them to your needs, and use them across multiple development

|

||||

platforms. MediaPipe Solutions is part of the MediaPipe [open source

|

||||

project](https://github.com/google/mediapipe), so you can further customize the

|

||||

solutions code to meet your application needs.

|

||||

|

||||

Hair Segmentation | Object Detection | Box Tracking | Instant Motion Tracking | Objectron | KNIFT

|

||||

:-------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------------------------------------------------------------: | :-------------------------------------------------------------------------------------------------------------------: | :---:

|

||||

[](https://google.github.io/mediapipe/solutions/hair_segmentation) | [](https://google.github.io/mediapipe/solutions/object_detection) | [](https://google.github.io/mediapipe/solutions/box_tracking) | [](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | [](https://google.github.io/mediapipe/solutions/objectron) | [](https://google.github.io/mediapipe/solutions/knift)

|

||||

These libraries and resources provide the core functionality for each MediaPipe

|

||||

Solution:

|

||||

|

||||

<!-- []() in the first cell is needed to preserve table formatting in GitHub Pages. -->

|

||||

<!-- Whenever this table is updated, paste a copy to solutions/solutions.md. -->

|

||||

* **MediaPipe Tasks**: Cross-platform APIs and libraries for deploying

|

||||

solutions. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/tasks).

|

||||

* **MediaPipe models**: Pre-trained, ready-to-run models for use with each

|

||||

solution.

|

||||

|

||||

[]() | [Android](https://google.github.io/mediapipe/getting_started/android) | [iOS](https://google.github.io/mediapipe/getting_started/ios) | [C++](https://google.github.io/mediapipe/getting_started/cpp) | [Python](https://google.github.io/mediapipe/getting_started/python) | [JS](https://google.github.io/mediapipe/getting_started/javascript) | [Coral](https://github.com/google/mediapipe/tree/master/mediapipe/examples/coral/README.md)

|

||||

:---------------------------------------------------------------------------------------- | :-------------------------------------------------------------: | :-----------------------------------------------------: | :-----------------------------------------------------: | :-----------------------------------------------------------: | :-----------------------------------------------------------: | :--------------------------------------------------------------------:

|

||||

[Face Detection](https://google.github.io/mediapipe/solutions/face_detection) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅

|

||||

[Face Mesh](https://google.github.io/mediapipe/solutions/face_mesh) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Iris](https://google.github.io/mediapipe/solutions/iris) | ✅ | ✅ | ✅ | | |

|

||||

[Hands](https://google.github.io/mediapipe/solutions/hands) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Pose](https://google.github.io/mediapipe/solutions/pose) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Holistic](https://google.github.io/mediapipe/solutions/holistic) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Selfie Segmentation](https://google.github.io/mediapipe/solutions/selfie_segmentation) | ✅ | ✅ | ✅ | ✅ | ✅ |

|

||||

[Hair Segmentation](https://google.github.io/mediapipe/solutions/hair_segmentation) | ✅ | | ✅ | | |

|

||||

[Object Detection](https://google.github.io/mediapipe/solutions/object_detection) | ✅ | ✅ | ✅ | | | ✅

|

||||

[Box Tracking](https://google.github.io/mediapipe/solutions/box_tracking) | ✅ | ✅ | ✅ | | |

|

||||

[Instant Motion Tracking](https://google.github.io/mediapipe/solutions/instant_motion_tracking) | ✅ | | | | |

|

||||

[Objectron](https://google.github.io/mediapipe/solutions/objectron) | ✅ | | ✅ | ✅ | ✅ |

|

||||

[KNIFT](https://google.github.io/mediapipe/solutions/knift) | ✅ | | | | |

|

||||

[AutoFlip](https://google.github.io/mediapipe/solutions/autoflip) | | | ✅ | | |

|

||||

[MediaSequence](https://google.github.io/mediapipe/solutions/media_sequence) | | | ✅ | | |

|

||||

[YouTube 8M](https://google.github.io/mediapipe/solutions/youtube_8m) | | | ✅ | | |

|

||||

These tools let you customize and evaluate solutions:

|

||||

|

||||

See also

|

||||

[MediaPipe Models and Model Cards](https://google.github.io/mediapipe/solutions/models)

|

||||

for ML models released in MediaPipe.

|

||||

* **MediaPipe Model Maker**: Customize models for solutions with your data.

|

||||

[Learn more](https://developers.google.com/mediapipe/solutions/model_maker).

|

||||

* **MediaPipe Studio**: Visualize, evaluate, and benchmark solutions in your

|

||||

browser. [Learn

|

||||

more](https://developers.google.com/mediapipe/solutions/studio).

|

||||

|

||||

## Getting started

|

||||

### Legacy solutions

|

||||

|

||||

To start using MediaPipe

|

||||

[solutions](https://google.github.io/mediapipe/solutions/solutions) with only a few

|

||||

lines code, see example code and demos in

|

||||

[MediaPipe in Python](https://google.github.io/mediapipe/getting_started/python) and

|

||||

[MediaPipe in JavaScript](https://google.github.io/mediapipe/getting_started/javascript).

|

||||

We have ended support for [these MediaPipe Legacy Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||

as of March 1, 2023. All other MediaPipe Legacy Solutions will be upgraded to

|

||||

a new MediaPipe Solution. See the [Solutions guide](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||

for details. The [code repository](https://github.com/google/mediapipe/tree/master/mediapipe)

|

||||

and prebuilt binaries for all MediaPipe Legacy Solutions will continue to be

|

||||

provided on an as-is basis.

|

||||

|

||||

To use MediaPipe in C++, Android and iOS, which allow further customization of

|

||||

the [solutions](https://google.github.io/mediapipe/solutions/solutions) as well as

|

||||

building your own, learn how to

|

||||

[install](https://google.github.io/mediapipe/getting_started/install) MediaPipe and

|

||||

start building example applications in

|

||||

[C++](https://google.github.io/mediapipe/getting_started/cpp),

|

||||

[Android](https://google.github.io/mediapipe/getting_started/android) and

|

||||

[iOS](https://google.github.io/mediapipe/getting_started/ios).

|

||||

For more on the legacy solutions, see the [documentation](https://github.com/google/mediapipe/tree/master/docs/solutions).

|

||||

|

||||

The source code is hosted in the

|

||||

[MediaPipe Github repository](https://github.com/google/mediapipe), and you can

|

||||

run code search using

|

||||

[Google Open Source Code Search](https://cs.opensource.google/mediapipe/mediapipe).

|

||||

## Framework

|

||||

|

||||

## Publications

|

||||

To start using MediaPipe Framework, [install MediaPipe

|

||||

Framework](https://developers.google.com/mediapipe/framework/getting_started/install)

|

||||

and start building example applications in C++, Android, and iOS.

|

||||

|

||||

[MediaPipe Framework](https://developers.google.com/mediapipe/framework) is the

|

||||

low-level component used to build efficient on-device machine learning

|

||||

pipelines, similar to the premade MediaPipe Solutions.

|

||||

|

||||

Before using MediaPipe Framework, familiarize yourself with the following key

|

||||

[Framework

|

||||

concepts](https://developers.google.com/mediapipe/framework/framework_concepts/overview.md):

|

||||

|

||||

* [Packets](https://developers.google.com/mediapipe/framework/framework_concepts/packets.md)

|

||||

* [Graphs](https://developers.google.com/mediapipe/framework/framework_concepts/graphs.md)

|

||||

* [Calculators](https://developers.google.com/mediapipe/framework/framework_concepts/calculators.md)

|

||||

|

||||

## Community

|

||||

|

||||

* [Slack community](https://mediapipe.page.link/joinslack) for MediaPipe

|

||||

users.

|

||||

* [Discuss](https://groups.google.com/forum/#!forum/mediapipe) - General

|

||||

community discussion around MediaPipe.

|

||||

* [Awesome MediaPipe](https://mediapipe.page.link/awesome-mediapipe) - A

|

||||

curated list of awesome MediaPipe related frameworks, libraries and

|

||||

software.

|

||||

|

||||

## Contributing

|

||||

|

||||

We welcome contributions. Please follow these

|

||||

[guidelines](https://github.com/google/mediapipe/blob/master/CONTRIBUTING.md).

|

||||

|

||||

We use GitHub issues for tracking requests and bugs. Please post questions to

|

||||

the MediaPipe Stack Overflow with a `mediapipe` tag.

|

||||

|

||||

## Resources

|

||||

|

||||

### Publications

|

||||

|

||||

* [Bringing artworks to life with AR](https://developers.googleblog.com/2021/07/bringing-artworks-to-life-with-ar.html)

|

||||

in Google Developers Blog

|

||||

|

|

@ -102,7 +125,8 @@ run code search using

|

|||

* [SignAll SDK: Sign language interface using MediaPipe is now available for

|

||||

developers](https://developers.googleblog.com/2021/04/signall-sdk-sign-language-interface-using-mediapipe-now-available.html)

|

||||

in Google Developers Blog

|

||||

* [MediaPipe Holistic - Simultaneous Face, Hand and Pose Prediction, on Device](https://ai.googleblog.com/2020/12/mediapipe-holistic-simultaneous-face.html)

|

||||

* [MediaPipe Holistic - Simultaneous Face, Hand and Pose Prediction, on

|

||||

Device](https://ai.googleblog.com/2020/12/mediapipe-holistic-simultaneous-face.html)

|

||||

in Google AI Blog

|

||||

* [Background Features in Google Meet, Powered by Web ML](https://ai.googleblog.com/2020/10/background-features-in-google-meet.html)

|

||||

in Google AI Blog

|

||||

|

|

@ -130,43 +154,6 @@ run code search using

|

|||

in Google AI Blog

|

||||

* [MediaPipe: A Framework for Building Perception Pipelines](https://arxiv.org/abs/1906.08172)

|

||||

|

||||

## Videos

|

||||

### Videos

|

||||

|

||||

* [YouTube Channel](https://www.youtube.com/c/MediaPipe)

|

||||

|

||||

## Events

|

||||

|

||||

* [MediaPipe Seattle Meetup, Google Building Waterside, 13 Feb 2020](https://mediapipe.page.link/seattle2020)

|

||||

* [AI Nextcon 2020, 12-16 Feb 2020, Seattle](http://aisea20.xnextcon.com/)

|

||||

* [MediaPipe Madrid Meetup, 16 Dec 2019](https://www.meetup.com/Madrid-AI-Developers-Group/events/266329088/)

|

||||

* [MediaPipe London Meetup, Google 123 Building, 12 Dec 2019](https://www.meetup.com/London-AI-Tech-Talk/events/266329038)

|

||||

* [ML Conference, Berlin, 11 Dec 2019](https://mlconference.ai/machine-learning-advanced-development/mediapipe-building-real-time-cross-platform-mobile-web-edge-desktop-video-audio-ml-pipelines/)

|

||||

* [MediaPipe Berlin Meetup, Google Berlin, 11 Dec 2019](https://www.meetup.com/Berlin-AI-Tech-Talk/events/266328794/)

|

||||

* [The 3rd Workshop on YouTube-8M Large Scale Video Understanding Workshop,

|

||||

Seoul, Korea ICCV

|

||||

2019](https://research.google.com/youtube8m/workshop2019/index.html)

|

||||

* [AI DevWorld 2019, 10 Oct 2019, San Jose, CA](https://aidevworld.com)

|

||||

* [Google Industry Workshop at ICIP 2019, 24 Sept 2019, Taipei, Taiwan](http://2019.ieeeicip.org/?action=page4&id=14#Google)

|

||||

([presentation](https://docs.google.com/presentation/d/e/2PACX-1vRIBBbO_LO9v2YmvbHHEt1cwyqH6EjDxiILjuT0foXy1E7g6uyh4CesB2DkkEwlRDO9_lWfuKMZx98T/pub?start=false&loop=false&delayms=3000&slide=id.g556cc1a659_0_5))

|

||||

* [Open sourced at CVPR 2019, 17~20 June, Long Beach, CA](https://sites.google.com/corp/view/perception-cv4arvr/mediapipe)

|

||||

|

||||

## Community

|

||||

|

||||

* [Awesome MediaPipe](https://mediapipe.page.link/awesome-mediapipe) - A

|

||||

curated list of awesome MediaPipe related frameworks, libraries and software

|

||||

* [Slack community](https://mediapipe.page.link/joinslack) for MediaPipe users

|

||||

* [Discuss](https://groups.google.com/forum/#!forum/mediapipe) - General

|

||||

community discussion around MediaPipe

|

||||

|

||||

## Alpha disclaimer

|

||||

|

||||

MediaPipe is currently in alpha at v0.7. We may be still making breaking API

|

||||

changes and expect to get to stable APIs by v1.0.

|

||||

|

||||

## Contributing

|

||||

|

||||

We welcome contributions. Please follow these

|

||||

[guidelines](https://github.com/google/mediapipe/blob/master/CONTRIBUTING.md).

|

||||

|

||||

We use GitHub issues for tracking requests and bugs. Please post questions to

|

||||

the MediaPipe Stack Overflow with a `mediapipe` tag.

|

||||

|

|

|

|||

|

|

@ -141,6 +141,7 @@ config_setting(

|

|||

"ios_armv7",

|

||||

"ios_arm64",

|

||||

"ios_arm64e",

|

||||

"ios_sim_arm64",

|

||||

]

|

||||

]

|

||||

|

||||

|

|

|

|||

|

|

@ -33,7 +33,9 @@ bzl_library(

|

|||

srcs = [

|

||||

"transitive_protos.bzl",

|

||||

],

|

||||

visibility = ["//mediapipe/framework:__subpackages__"],

|

||||

visibility = [

|

||||

"//mediapipe/framework:__subpackages__",

|

||||

],

|

||||

)

|

||||

|

||||

bzl_library(

|

||||

|

|

|

|||

|

|

@ -23,15 +23,13 @@ package mediapipe;

|

|||

option java_package = "com.google.mediapipe.proto";

|

||||

option java_outer_classname = "CalculatorOptionsProto";

|

||||

|

||||

// Options for Calculators. Each Calculator implementation should

|

||||

// have its own options proto, which should look like this:

|

||||

// Options for Calculators, DEPRECATED. New calculators are encouraged to use

|

||||

// proto3 syntax options:

|

||||

//

|

||||

// message MyCalculatorOptions {

|

||||

// extend CalculatorOptions {

|

||||

// optional MyCalculatorOptions ext = <unique id, e.g. the CL#>;

|

||||

// }

|

||||

// optional string field_needed_by_my_calculator = 1;

|

||||

// optional int32 another_field = 2;

|

||||

// // proto3 does not expect "optional"

|

||||

// string field_needed_by_my_calculator = 1;

|

||||

// int32 another_field = 2;

|

||||

// // etc

|

||||

// }

|

||||

message CalculatorOptions {

|

||||

|

|

|

|||

|

|

@ -15,9 +15,7 @@

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe/framework:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe/framework:__subpackages__"])

|

||||

|

||||

cc_library(

|

||||

name = "simple_calculator",

|

||||

|

|

|

|||

|

|

@ -974,7 +974,7 @@ class TemplateParser::Parser::ParserImpl {

|

|||

}

|

||||

|

||||

// Consumes an identifier and saves its value in the identifier parameter.

|

||||

// Returns false if the token is not of type IDENTFIER.

|

||||

// Returns false if the token is not of type IDENTIFIER.

|

||||

bool ConsumeIdentifier(std::string* identifier) {

|

||||

if (LookingAtType(io::Tokenizer::TYPE_IDENTIFIER)) {

|

||||

*identifier = tokenizer_.current().text;

|

||||

|

|

@ -1672,7 +1672,9 @@ class TemplateParser::Parser::MediaPipeParserImpl

|

|||

if (field_type == ProtoUtilLite::FieldType::TYPE_MESSAGE) {

|

||||

*args = {""};

|

||||

} else {

|

||||

MEDIAPIPE_CHECK_OK(ProtoUtilLite::Serialize({"1"}, field_type, args));

|

||||

constexpr char kPlaceholderValue[] = "1";

|

||||

MEDIAPIPE_CHECK_OK(

|

||||

ProtoUtilLite::Serialize({kPlaceholderValue}, field_type, args));

|

||||

}

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -19,9 +19,7 @@ load(

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe/model_maker/python/vision/gesture_recognizer:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe/model_maker/python/vision/gesture_recognizer:__subpackages__"])

|

||||

|

||||

mediapipe_files(

|

||||

srcs = [

|

||||

|

|

|

|||

|

|

@ -19,9 +19,7 @@ load(

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe/model_maker/python/text/text_classifier:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe/model_maker/python/text/text_classifier:__subpackages__"])

|

||||

|

||||

mediapipe_files(

|

||||

srcs = [

|

||||

|

|

|

|||

|

|

@ -14,9 +14,7 @@

|

|||

|

||||

# Placeholder for internal Python strict library and test compatibility macro.

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

licenses(["notice"])

|

||||

|

||||

|

|

|

|||

|

|

@ -17,9 +17,7 @@

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

py_library(

|

||||

name = "data_util",

|

||||

|

|

|

|||

|

|

@ -15,9 +15,7 @@

|

|||

# Placeholder for internal Python strict library and test compatibility macro.

|

||||

# Placeholder for internal Python strict test compatibility macro.

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

licenses(["notice"])

|

||||

|

||||

|

|

|

|||

|

|

@ -17,9 +17,7 @@

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

py_library(

|

||||

name = "test_util",

|

||||

|

|

|

|||

|

|

@ -14,9 +14,7 @@

|

|||

|

||||

# Placeholder for internal Python strict library and test compatibility macro.

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

licenses(["notice"])

|

||||

|

||||

|

|

|

|||

|

|

@ -15,9 +15,7 @@

|

|||

# Placeholder for internal Python strict library and test compatibility macro.

|

||||

# Placeholder for internal Python strict test compatibility macro.

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

licenses(["notice"])

|

||||

|

||||

|

|

|

|||

|

|

@ -12,8 +12,6 @@

|

|||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

licenses(["notice"])

|

||||

|

|

|

|||

|

|

@ -17,9 +17,7 @@

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

# TODO: Remove the unnecessary test data once the demo data are moved to an open-sourced

|

||||

# directory.

|

||||

|

|

|

|||

|

|

@ -17,9 +17,7 @@

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

######################################################################

|

||||

# Public target of the MediaPipe Model Maker ImageClassifier APIs.

|

||||

|

|

|

|||

|

|

@ -17,9 +17,7 @@

|

|||

|

||||

licenses(["notice"])

|

||||

|

||||

package(

|

||||

default_visibility = ["//mediapipe:__subpackages__"],

|

||||

)

|

||||

package(default_visibility = ["//mediapipe:__subpackages__"])

|

||||

|

||||

py_library(

|

||||

name = "object_detector_import",

|

||||

|

|

@ -88,6 +86,17 @@ py_test(

|

|||

],

|

||||

)

|

||||

|

||||

py_library(

|

||||

name = "detection",

|

||||

srcs = ["detection.py"],

|

||||

)

|

||||

|

||||

py_test(

|

||||

name = "detection_test",

|

||||

srcs = ["detection_test.py"],

|

||||

deps = [":detection"],

|

||||

)

|

||||

|

||||

py_library(

|

||||

name = "hyperparameters",

|

||||

srcs = ["hyperparameters.py"],

|

||||

|

|

@ -116,6 +125,7 @@ py_library(

|

|||

name = "model",

|

||||

srcs = ["model.py"],

|

||||

deps = [

|

||||

":detection",

|

||||

":model_options",

|

||||

":model_spec",

|

||||

],

|

||||

|

|

@ -163,6 +173,7 @@ py_library(

|

|||

"//mediapipe/model_maker/python/core/tasks:classifier",

|

||||

"//mediapipe/model_maker/python/core/utils:model_util",

|

||||

"//mediapipe/model_maker/python/core/utils:quantization",

|

||||

"//mediapipe/tasks/python/metadata/metadata_writers:metadata_info",

|

||||

"//mediapipe/tasks/python/metadata/metadata_writers:metadata_writer",

|

||||

"//mediapipe/tasks/python/metadata/metadata_writers:object_detector",

|

||||

],

|

||||

|

|

|

|||

|

|

@ -32,6 +32,7 @@ ObjectDetectorOptions = object_detector_options.ObjectDetectorOptions

|

|||

# Remove duplicated and non-public API

|

||||

del dataset

|

||||

del dataset_util # pylint: disable=undefined-variable

|

||||

del detection # pylint: disable=undefined-variable

|

||||

del hyperparameters

|

||||

del model # pylint: disable=undefined-variable

|

||||

del model_options

|

||||

|

|

|

|||

|

|

@ -106,7 +106,7 @@ class Dataset(classification_dataset.ClassificationDataset):

|

|||

...

|

||||

Each <file0>.xml annotation file should have the following format:

|

||||

<annotation>

|

||||

<filename>file0.jpg<filename>

|

||||

<filename>file0.jpg</filename>

|

||||

<object>

|

||||

<name>kangaroo</name>

|

||||

<bndbox>

|

||||

|

|

@ -114,6 +114,7 @@ class Dataset(classification_dataset.ClassificationDataset):

|

|||

<ymin>89</ymin>

|

||||

<xmax>386</xmax>

|

||||

<ymax>262</ymax>

|

||||

</bndbox>

|

||||

</object>

|

||||

<object>...</object>

|

||||

</annotation>

|

||||

|

|

|

|||

|

|

@ -0,0 +1,34 @@

|

|||

# Copyright 2023 The MediaPipe Authors.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the "License");

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

"""Custom Detection export module for Object Detection."""

|

||||

|

||||

from typing import Any, Mapping

|

||||

|

||||

from official.vision.serving import detection

|

||||

|

||||

|

||||

class DetectionModule(detection.DetectionModule):

|

||||

"""A serving detection module for exporting the model.

|

||||

|

||||

This module overrides the tensorflow_models DetectionModule by only outputting

|

||||

the pre-nms detection_boxes and detection_scores.

|

||||

"""

|

||||

|

||||

def serve(self, images) -> Mapping[str, Any]:

|

||||

result = super().serve(images)

|

||||

final_outputs = {

|

||||

'detection_boxes': result['detection_boxes'],

|

||||

'detection_scores': result['detection_scores'],

|

||||

}

|

||||

return final_outputs

|

||||

|

|

@ -0,0 +1,73 @@

|

|||

# Copyright 2023 The MediaPipe Authors.

|

||||

#

|

||||

# Licensed under the Apache License, Version 2.0 (the 'License');

|

||||

# you may not use this file except in compliance with the License.

|

||||

# You may obtain a copy of the License at

|

||||

#

|

||||

# http://www.apache.org/licenses/LICENSE-2.0

|

||||

#

|

||||

# Unless required by applicable law or agreed to in writing, software

|

||||

# distributed under the License is distributed on an 'AS IS' BASIS,

|

||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

# See the License for the specific language governing permissions and

|

||||

# limitations under the License.

|

||||

|

||||

from unittest import mock

|

||||

import tensorflow as tf

|

||||

|

||||

from mediapipe.model_maker.python.vision.object_detector import detection

|

||||

from official.core import config_definitions as cfg

|

||||

from official.vision import configs

|

||||

from official.vision.serving import detection as detection_module

|

||||

|

||||

|

||||

class ObjectDetectorTest(tf.test.TestCase):

|

||||

|

||||

@mock.patch.object(detection_module.DetectionModule, 'serve', autospec=True)

|

||||

def test_detection_module(self, mock_serve):

|

||||

mock_serve.return_value = {

|

||||

'detection_boxes': 1,

|

||||

'detection_scores': 2,

|

||||

'detection_classes': 3,

|

||||

'num_detections': 4,

|

||||

}

|

||||

model_config = configs.retinanet.RetinaNet(

|

||||

min_level=3,

|

||||

max_level=7,

|

||||

num_classes=10,

|

||||

input_size=[256, 256, 3],

|

||||

anchor=configs.retinanet.Anchor(

|

||||

num_scales=3, aspect_ratios=[0.5, 1.0, 2.0], anchor_size=3

|

||||

),

|

||||

backbone=configs.backbones.Backbone(

|

||||

type='mobilenet', mobilenet=configs.backbones.MobileNet()

|

||||

),

|

||||

decoder=configs.decoders.Decoder(

|

||||

type='fpn',

|

||||

fpn=configs.decoders.FPN(

|

||||

num_filters=128, use_separable_conv=True, use_keras_layer=True

|

||||

),

|

||||

),

|

||||

head=configs.retinanet.RetinaNetHead(

|

||||

num_filters=128, use_separable_conv=True

|

||||

),

|

||||

detection_generator=configs.retinanet.DetectionGenerator(),

|

||||

norm_activation=configs.common.NormActivation(activation='relu6'),

|

||||

)

|

||||

task_config = configs.retinanet.RetinaNetTask(model=model_config)

|

||||

params = cfg.ExperimentConfig(

|

||||

task=task_config,

|

||||

)

|

||||

detection_instance = detection.DetectionModule(

|

||||

params=params, batch_size=1, input_image_size=[256, 256]

|

||||

)

|

||||

outputs = detection_instance.serve(0)

|

||||

expected_outputs = {

|

||||

'detection_boxes': 1,

|

||||

'detection_scores': 2,

|

||||

}

|

||||

self.assertAllEqual(outputs, expected_outputs)

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

tf.test.main()

|

||||

|

|

@ -27,8 +27,6 @@ class HParams(hp.BaseHParams):

|

|||

learning_rate: Learning rate to use for gradient descent training.

|

||||

batch_size: Batch size for training.

|

||||

epochs: Number of training iterations over the dataset.

|

||||

do_fine_tuning: If true, the base module is trained together with the

|

||||

classification layer on top.

|

||||

cosine_decay_epochs: The number of epochs for cosine decay learning rate.

|

||||

See

|

||||

https://www.tensorflow.org/api_docs/python/tf/keras/optimizers/schedules/CosineDecay

|

||||

|

|

@ -39,13 +37,13 @@ class HParams(hp.BaseHParams):

|

|||

"""

|

||||

|

||||

# Parameters from BaseHParams class.

|

||||

learning_rate: float = 0.003

|

||||

batch_size: int = 32

|

||||

epochs: int = 10

|

||||

learning_rate: float = 0.3

|

||||

batch_size: int = 8

|

||||

epochs: int = 30

|

||||

|

||||

# Parameters for cosine learning rate decay

|

||||

cosine_decay_epochs: Optional[int] = None

|

||||

cosine_decay_alpha: float = 0.0

|

||||

cosine_decay_alpha: float = 1.0

|

||||

|

||||

|

||||

@dataclasses.dataclass

|

||||

|

|

@ -67,8 +65,8 @@ class QATHParams:

|

|||

for more information.

|

||||

"""

|

||||

|

||||

learning_rate: float = 0.03

|

||||

batch_size: int = 32

|

||||

epochs: int = 10

|

||||

decay_steps: int = 231

|

||||

learning_rate: float = 0.3

|

||||

batch_size: int = 8

|

||||

epochs: int = 15

|

||||

decay_steps: int = 8

|

||||

decay_rate: float = 0.96

|

||||

|

|

|

|||

|

|

@ -18,6 +18,7 @@ from typing import Mapping, Optional, Sequence, Union

|

|||

|

||||

import tensorflow as tf

|

||||

|

||||

from mediapipe.model_maker.python.vision.object_detector import detection

|

||||

from mediapipe.model_maker.python.vision.object_detector import model_options as model_opt

|

||||

from mediapipe.model_maker.python.vision.object_detector import model_spec as ms

|

||||

from official.core import config_definitions as cfg

|

||||

|

|

@ -29,7 +30,6 @@ from official.vision.losses import loss_utils

|

|||

from official.vision.modeling import factory

|

||||

from official.vision.modeling import retinanet_model

|

||||

from official.vision.modeling.layers import detection_generator

|

||||

from official.vision.serving import detection

|

||||

|

||||

|

||||

class ObjectDetectorModel(tf.keras.Model):

|

||||

|

|

@ -199,6 +199,7 @@ class ObjectDetectorModel(tf.keras.Model):

|

|||

max_detections=10,

|

||||

max_classes_per_detection=1,

|

||||

normalize_anchor_coordinates=True,

|

||||

omit_nms=True,

|

||||

),

|

||||

)

|

||||

tflite_post_processing_config = (

|

||||

|

|

|

|||

|

|

@ -28,6 +28,7 @@ from mediapipe.model_maker.python.vision.object_detector import model_options as

|

|||

from mediapipe.model_maker.python.vision.object_detector import model_spec as ms

|

||||

from mediapipe.model_maker.python.vision.object_detector import object_detector_options

|

||||

from mediapipe.model_maker.python.vision.object_detector import preprocessor

|

||||

from mediapipe.tasks.python.metadata.metadata_writers import metadata_info

|

||||

from mediapipe.tasks.python.metadata.metadata_writers import metadata_writer

|

||||

from mediapipe.tasks.python.metadata.metadata_writers import object_detector as object_detector_writer

|

||||

from official.vision.evaluation import coco_evaluator

|

||||

|

|

@ -264,6 +265,27 @@ class ObjectDetector(classifier.Classifier):

|

|||

coco_metrics = coco_eval.result()

|

||||

return losses, coco_metrics

|

||||

|

||||

def _create_fixed_anchor(

|

||||

self, anchor_box: List[float]

|

||||

) -> object_detector_writer.FixedAnchor:

|

||||

"""Helper function to create FixedAnchor objects from an anchor box array.

|

||||

|

||||

Args:

|

||||

anchor_box: List of anchor box coordinates in the format of [x_min, y_min,

|

||||

x_max, y_max].

|

||||

|

||||

Returns:

|

||||

A FixedAnchor object representing the anchor_box.

|

||||

"""

|

||||

image_shape = self._model_spec.input_image_shape[:2]

|

||||

y_center_norm = (anchor_box[0] + anchor_box[2]) / (2 * image_shape[0])

|

||||

x_center_norm = (anchor_box[1] + anchor_box[3]) / (2 * image_shape[1])

|

||||

height_norm = (anchor_box[2] - anchor_box[0]) / image_shape[0]

|

||||

width_norm = (anchor_box[3] - anchor_box[1]) / image_shape[1]

|

||||

return object_detector_writer.FixedAnchor(

|

||||

x_center_norm, y_center_norm, width_norm, height_norm

|

||||

)

|

||||

|

||||

def export_model(

|

||||

self,

|

||||

model_name: str = 'model.tflite',

|

||||

|

|

@ -328,11 +350,40 @@ class ObjectDetector(classifier.Classifier):

|

|||

converter.target_spec.supported_ops = (tf.lite.OpsSet.TFLITE_BUILTINS,)

|

||||

tflite_model = converter.convert()

|

||||

|

||||

writer = object_detector_writer.MetadataWriter.create_for_models_with_nms(

|

||||

# Build anchors

|

||||

raw_anchor_boxes = self._preprocessor.anchor_boxes

|

||||

anchors = []

|

||||

for _, anchor_boxes in raw_anchor_boxes.items():

|

||||

anchor_boxes_reshaped = anchor_boxes.numpy().reshape((-1, 4))

|

||||

for ab in anchor_boxes_reshaped:

|

||||

anchors.append(self._create_fixed_anchor(ab))

|

||||

|

||||

ssd_anchors_options = object_detector_writer.SsdAnchorsOptions(

|

||||

object_detector_writer.FixedAnchorsSchema(anchors)

|

||||

)

|

||||

|

||||

tensor_decoding_options = object_detector_writer.TensorsDecodingOptions(

|

||||

num_classes=self._num_classes,

|

||||

num_boxes=len(anchors),

|

||||

num_coords=4,

|

||||

keypoint_coord_offset=0,

|

||||

num_keypoints=0,

|

||||

num_values_per_keypoint=2,

|

||||

x_scale=1,

|

||||

y_scale=1,

|

||||

w_scale=1,

|

||||

h_scale=1,

|

||||

apply_exponential_on_box_size=True,

|

||||

sigmoid_score=False,

|

||||

)

|

||||

writer = object_detector_writer.MetadataWriter.create_for_models_without_nms(

|

||||

tflite_model,

|

||||

self._model_spec.mean_rgb,

|

||||

self._model_spec.stddev_rgb,

|

||||

labels=metadata_writer.Labels().add(list(self._label_names)),

|

||||

ssd_anchors_options=ssd_anchors_options,

|

||||

tensors_decoding_options=tensor_decoding_options,

|

||||

output_tensors_order=metadata_info.RawDetectionOutputTensorsOrder.LOCATION_SCORE,

|

||||

)

|

||||

tflite_model_with_metadata, metadata_json = writer.populate()

|

||||

model_util.save_tflite(tflite_model_with_metadata, tflite_file)

|

||||

|

|

|

|||

|

|

@ -44,6 +44,26 @@ class Preprocessor(object):

|

|||

self._aug_scale_max = 2.0

|

||||

self._max_num_instances = 100

|

||||

|

||||

self._padded_size = preprocess_ops.compute_padded_size(

|

||||

self._output_size, 2**self._max_level

|

||||

)

|

||||

|

||||

input_anchor = anchor.build_anchor_generator(

|

||||

min_level=self._min_level,

|

||||

max_level=self._max_level,

|

||||

num_scales=self._num_scales,

|

||||

aspect_ratios=self._aspect_ratios,

|

||||

anchor_size=self._anchor_size,

|

||||

)

|

||||

self._anchor_boxes = input_anchor(image_size=self._output_size)

|

||||

self._anchor_labeler = anchor.AnchorLabeler(

|

||||

self._match_threshold, self._unmatched_threshold

|

||||

)

|

||||

|

||||

@property

|

||||

def anchor_boxes(self):

|

||||

return self._anchor_boxes

|

||||

|

||||

def __call__(

|

||||

self, data: Mapping[str, Any], is_training: bool = True

|

||||

) -> Tuple[tf.Tensor, Mapping[str, Any]]:

|

||||

|

|

@ -90,13 +110,10 @@ class Preprocessor(object):

|

|||

image, image_info = preprocess_ops.resize_and_crop_image(

|

||||

image,

|

||||

self._output_size,

|

||||

padded_size=preprocess_ops.compute_padded_size(

|

||||

self._output_size, 2**self._max_level

|

||||

),

|

||||

padded_size=self._padded_size,

|

||||

aug_scale_min=(self._aug_scale_min if is_training else 1.0),

|

||||

aug_scale_max=(self._aug_scale_max if is_training else 1.0),

|

||||

)

|

||||

image_height, image_width, _ = image.get_shape().as_list()

|

||||

|

||||

# Resize and crop boxes.

|

||||

image_scale = image_info[2, :]

|

||||

|

|

@ -110,20 +127,9 @@ class Preprocessor(object):

|

|||

classes = tf.gather(classes, indices)

|

||||

|

||||

# Assign anchors.

|

||||

input_anchor = anchor.build_anchor_generator(

|

||||

min_level=self._min_level,

|

||||

max_level=self._max_level,

|

||||

num_scales=self._num_scales,

|

||||

aspect_ratios=self._aspect_ratios,

|

||||

anchor_size=self._anchor_size,

|

||||

)

|

||||

anchor_boxes = input_anchor(image_size=(image_height, image_width))

|

||||

anchor_labeler = anchor.AnchorLabeler(

|

||||

self._match_threshold, self._unmatched_threshold

|

||||

)

|

||||

(cls_targets, box_targets, _, cls_weights, box_weights) = (

|

||||

anchor_labeler.label_anchors(

|

||||

anchor_boxes, boxes, tf.expand_dims(classes, axis=1)

|

||||

self._anchor_labeler.label_anchors(

|

||||

self.anchor_boxes, boxes, tf.expand_dims(classes, axis=1)

|

||||

)

|

||||

)

|

||||

|

||||

|

|

@ -134,7 +140,7 @@ class Preprocessor(object):

|

|||

labels = {

|

||||

'cls_targets': cls_targets,

|

||||

'box_targets': box_targets,

|

||||

'anchor_boxes': anchor_boxes,

|

||||

'anchor_boxes': self.anchor_boxes,

|

||||

'cls_weights': cls_weights,

|

||||

'box_weights': box_weights,

|

||||

'image_info': image_info,

|

||||

|

|

|

|||

|

|

@ -361,9 +361,10 @@ class FaceStylizerGraph : public core::ModelTaskGraph {

|

|||

|

||||

auto& tensors_to_image =

|

||||

graph.AddNode("mediapipe.tasks.TensorsToImageCalculator");

|

||||

ConfigureTensorsToImageCalculator(

|

||||

image_to_tensor_options,

|

||||

&tensors_to_image.GetOptions<TensorsToImageCalculatorOptions>());

|

||||

auto& tensors_to_image_options =

|

||||

tensors_to_image.GetOptions<TensorsToImageCalculatorOptions>();

|

||||

tensors_to_image_options.mutable_input_tensor_float_range()->set_min(-1);

|

||||

tensors_to_image_options.mutable_input_tensor_float_range()->set_max(1);

|

||||

face_alignment_image >> tensors_to_image.In(kTensorsTag);

|

||||

face_alignment = tensors_to_image.Out(kImageTag).Cast<Image>();

|

||||

|

||||

|

|

|

|||

|

|

@ -63,6 +63,8 @@ cc_library(

|

|||

"//mediapipe/calculators/image:image_properties_calculator",

|

||||

"//mediapipe/calculators/image:image_transformation_calculator",

|

||||