Merge branch 'master' into ios-gesture-recognizer-tests

This commit is contained in:

commit

e1d8854388

|

|

@ -1,17 +1,16 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe

|

||||||

title: Home

|

title: Home

|

||||||

nav_order: 1

|

nav_order: 1

|

||||||

---

|

---

|

||||||

|

|

||||||

----

|

----

|

||||||

|

|

||||||

**Attention:** *Thanks for your interest in MediaPipe! We have moved to

|

**Attention:** *We have moved to

|

||||||

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

||||||

|

|

||||||

*This notice and web page will be removed on June 1, 2023.*

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**Attention**: MediaPipe Solutions Preview is an early release. [Learn

|

**Attention**: MediaPipe Solutions Preview is an early release. [Learn

|

||||||

|

|

|

||||||

|

|

@ -266,10 +266,10 @@ http_archive(

|

||||||

|

|

||||||

http_archive(

|

http_archive(

|

||||||

name = "com_googlesource_code_re2",

|

name = "com_googlesource_code_re2",

|

||||||

sha256 = "e06b718c129f4019d6e7aa8b7631bee38d3d450dd980246bfaf493eb7db67868",

|

sha256 = "ef516fb84824a597c4d5d0d6d330daedb18363b5a99eda87d027e6bdd9cba299",

|

||||||

strip_prefix = "re2-fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8",

|

strip_prefix = "re2-03da4fc0857c285e3a26782f6bc8931c4c950df4",

|

||||||

urls = [

|

urls = [

|

||||||

"https://github.com/google/re2/archive/fe4a310131c37f9a7e7f7816fa6ce2a8b27d65a8.tar.gz",

|

"https://github.com/google/re2/archive/03da4fc0857c285e3a26782f6bc8931c4c950df4.tar.gz",

|

||||||

],

|

],

|

||||||

)

|

)

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,17 +1,16 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe

|

||||||

title: Home

|

title: Home

|

||||||

nav_order: 1

|

nav_order: 1

|

||||||

---

|

---

|

||||||

|

|

||||||

----

|

----

|

||||||

|

|

||||||

**Attention:** *Thanks for your interest in MediaPipe! We have moved to

|

**Attention:** *We have moved to

|

||||||

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

as the primary developer documentation site for MediaPipe as of April 3, 2023.*

|

||||||

|

|

||||||

*This notice and web page will be removed on June 1, 2023.*

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**Attention**: MediaPipe Solutions Preview is an early release. [Learn

|

**Attention**: MediaPipe Solutions Preview is an early release. [Learn

|

||||||

|

|

|

||||||

|

|

@ -20,9 +20,9 @@ nav_order: 1

|

||||||

---

|

---

|

||||||

|

|

||||||

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

As of May 10, 2023, this solution was upgraded to a new MediaPipe

|

||||||

Solution. For more information, see the

|

Solution. For more information, see the

|

||||||

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/vision/face_detector)

|

||||||

site.*

|

site.*

|

||||||

|

|

||||||

----

|

----

|

||||||

|

|

|

||||||

|

|

@ -20,9 +20,9 @@ nav_order: 2

|

||||||

---

|

---

|

||||||

|

|

||||||

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

As of May 10, 2023, this solution was upgraded to a new MediaPipe

|

||||||

Solution. For more information, see the

|

Solution. For more information, see the

|

||||||

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/vision/face_landmarker)

|

||||||

site.*

|

site.*

|

||||||

|

|

||||||

----

|

----

|

||||||

|

|

|

||||||

|

|

@ -20,9 +20,9 @@ nav_order: 3

|

||||||

---

|

---

|

||||||

|

|

||||||

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

As of May 10, 2023, this solution was upgraded to a new MediaPipe

|

||||||

Solution. For more information, see the

|

Solution. For more information, see the

|

||||||

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/vision/face_landmarker)

|

||||||

site.*

|

site.*

|

||||||

|

|

||||||

----

|

----

|

||||||

|

|

|

||||||

|

|

@ -22,9 +22,9 @@ nav_order: 5

|

||||||

---

|

---

|

||||||

|

|

||||||

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

As of May 10, 2023, this solution was upgraded to a new MediaPipe

|

||||||

Solution. For more information, see the

|

Solution. For more information, see the

|

||||||

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/vision/pose_landmarker/)

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/vision/pose_landmarker)

|

||||||

site.*

|

site.*

|

||||||

|

|

||||||

----

|

----

|

||||||

|

|

|

||||||

|

|

@ -21,7 +21,7 @@ nav_order: 1

|

||||||

---

|

---

|

||||||

|

|

||||||

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

As of May 10, 2023, this solution was upgraded to a new MediaPipe

|

||||||

Solution. For more information, see the

|

Solution. For more information, see the

|

||||||

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/vision/pose_landmarker/)

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/vision/pose_landmarker/)

|

||||||

site.*

|

site.*

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/solutions/guide#legacy

|

||||||

title: MediaPipe Legacy Solutions

|

title: MediaPipe Legacy Solutions

|

||||||

nav_order: 3

|

nav_order: 3

|

||||||

has_children: true

|

has_children: true

|

||||||

|

|

@ -13,8 +14,7 @@ has_toc: false

|

||||||

{:toc}

|

{:toc}

|

||||||

---

|

---

|

||||||

|

|

||||||

**Attention:** *Thank you for your interest in MediaPipe Solutions. We have

|

**Attention:** *We have ended support for

|

||||||

ended support for

|

|

||||||

[these MediaPipe Legacy Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

[these MediaPipe Legacy Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

as of March 1, 2023. All other

|

as of March 1, 2023. All other

|

||||||

[MediaPipe Legacy Solutions will be upgraded](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

[MediaPipe Legacy Solutions will be upgraded](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

|

@ -25,14 +25,6 @@ be provided on an as-is basis. We encourage you to check out the new MediaPipe

|

||||||

Solutions at:

|

Solutions at:

|

||||||

[https://developers.google.com/mediapipe/solutions](https://developers.google.com/mediapipe/solutions)*

|

[https://developers.google.com/mediapipe/solutions](https://developers.google.com/mediapipe/solutions)*

|

||||||

|

|

||||||

*This notice and web page will be removed on June 1, 2023.*

|

|

||||||

|

|

||||||

----

|

|

||||||

|

|

||||||

<br><br><br><br><br><br><br><br><br><br>

|

|

||||||

<br><br><br><br><br><br><br><br><br><br>

|

|

||||||

<br><br><br><br><br><br><br><br><br><br>

|

|

||||||

|

|

||||||

----

|

----

|

||||||

|

|

||||||

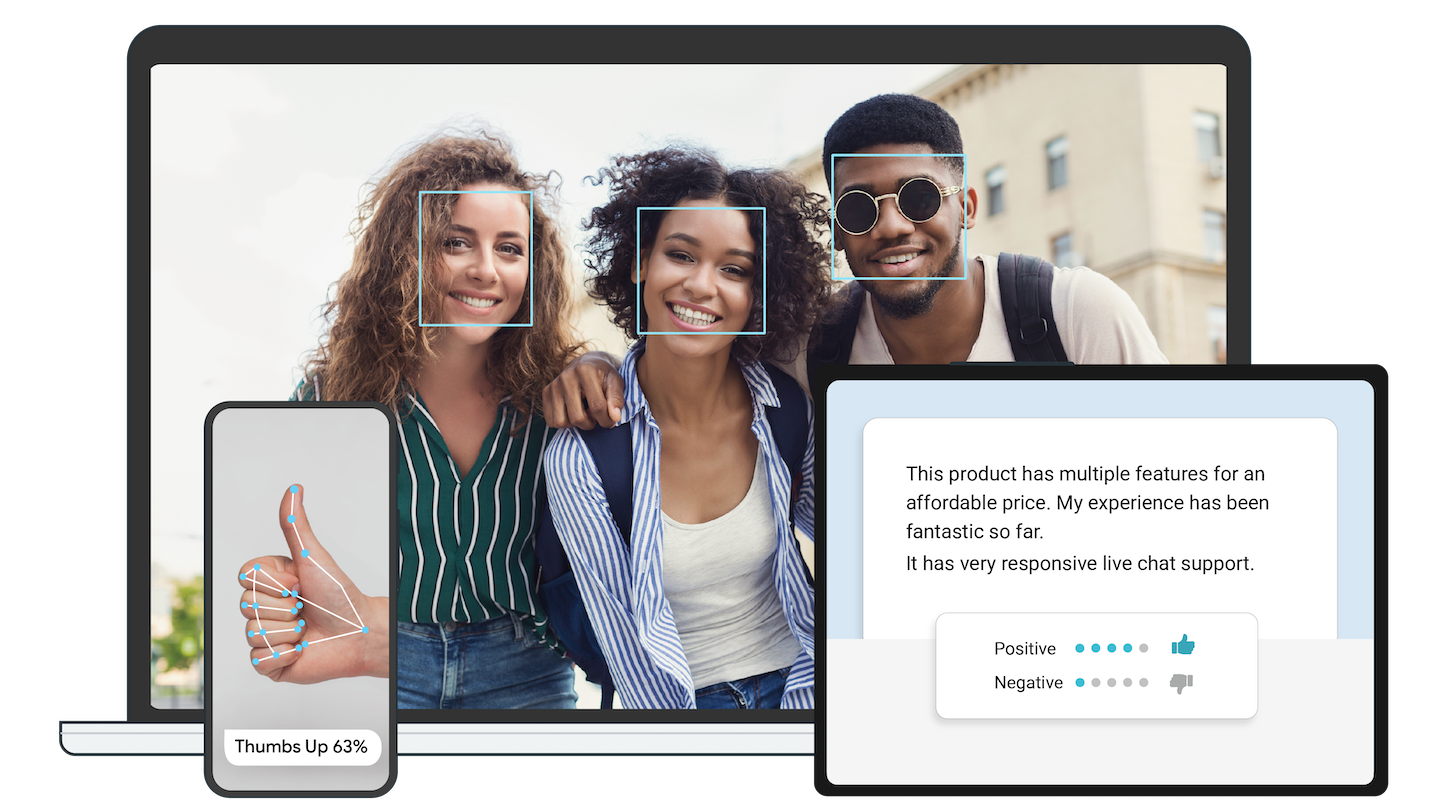

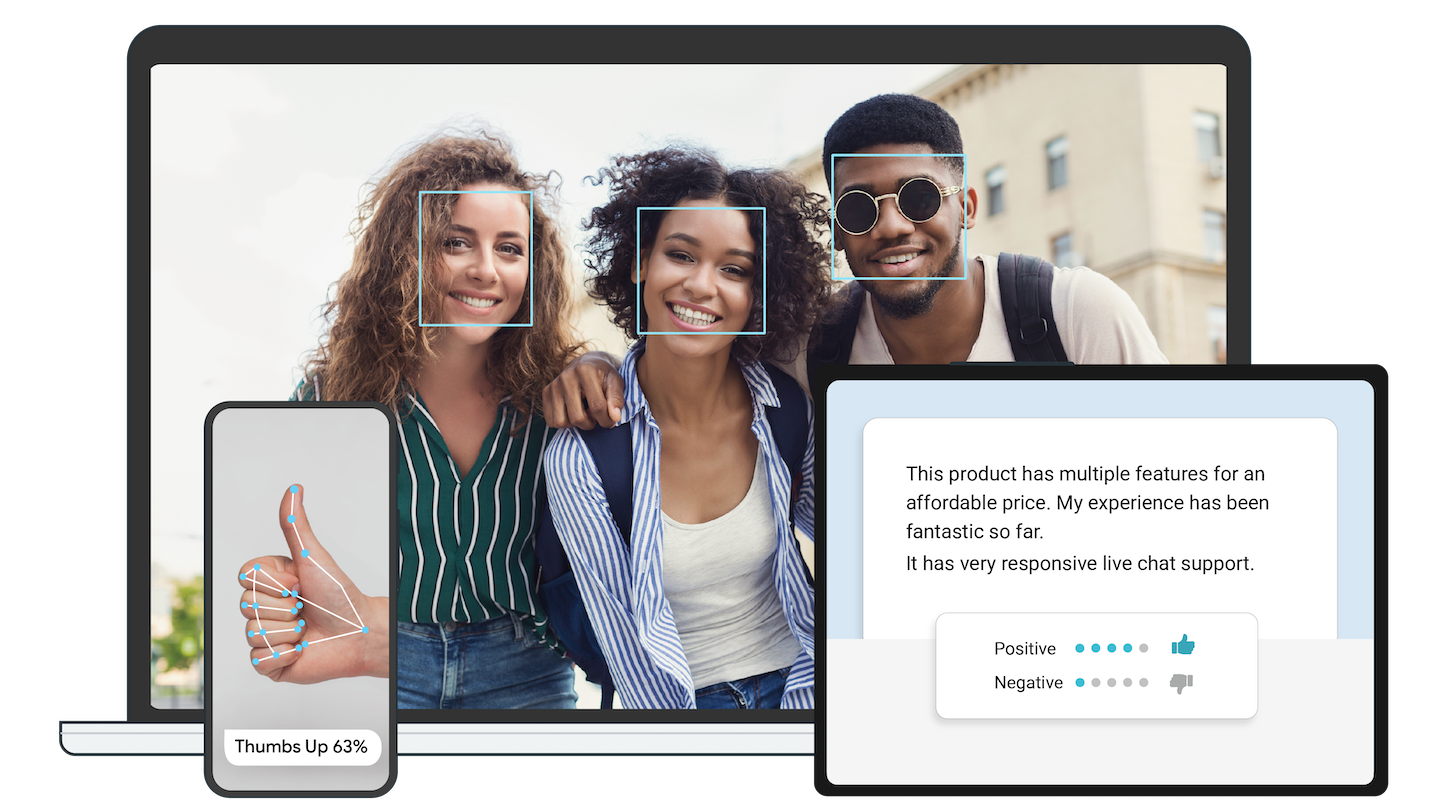

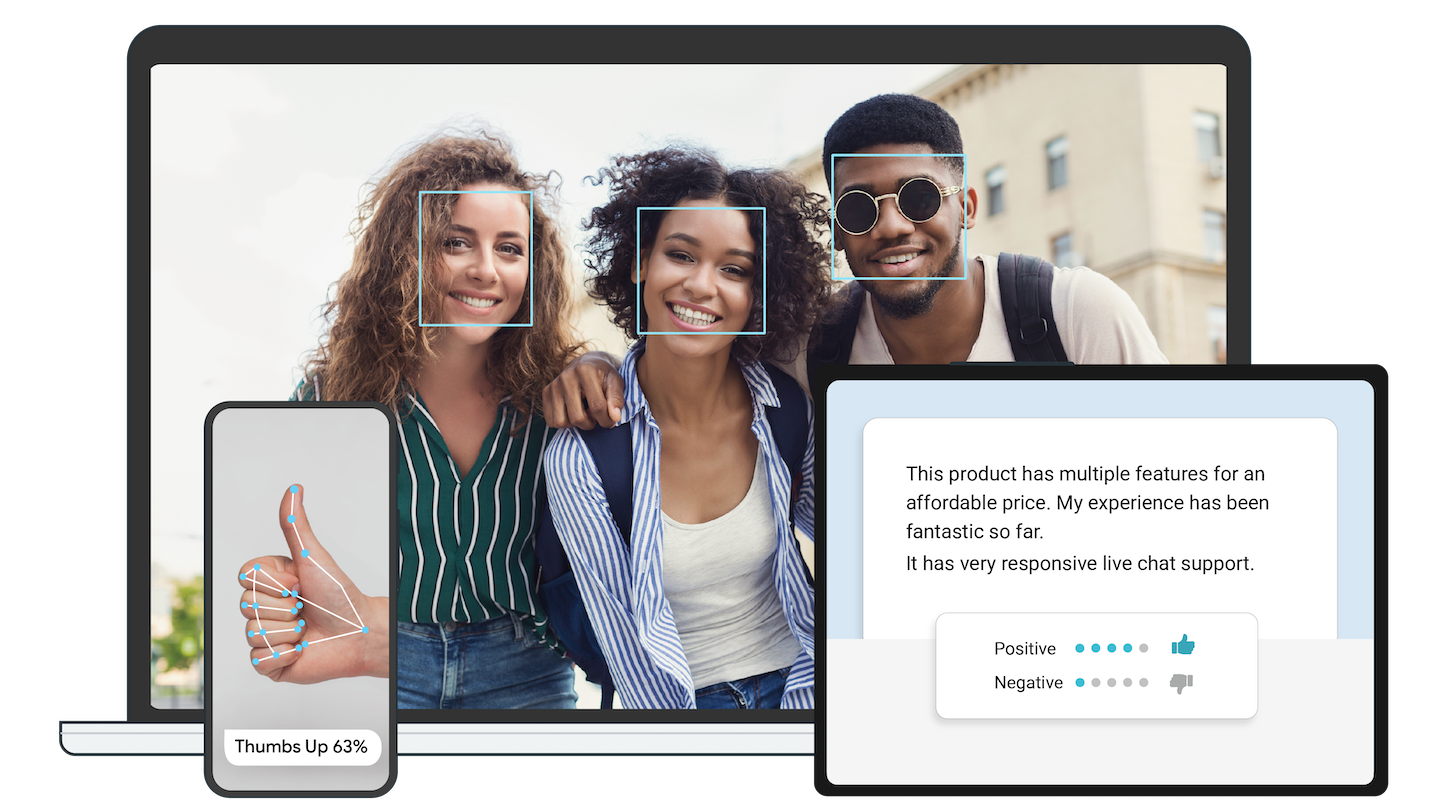

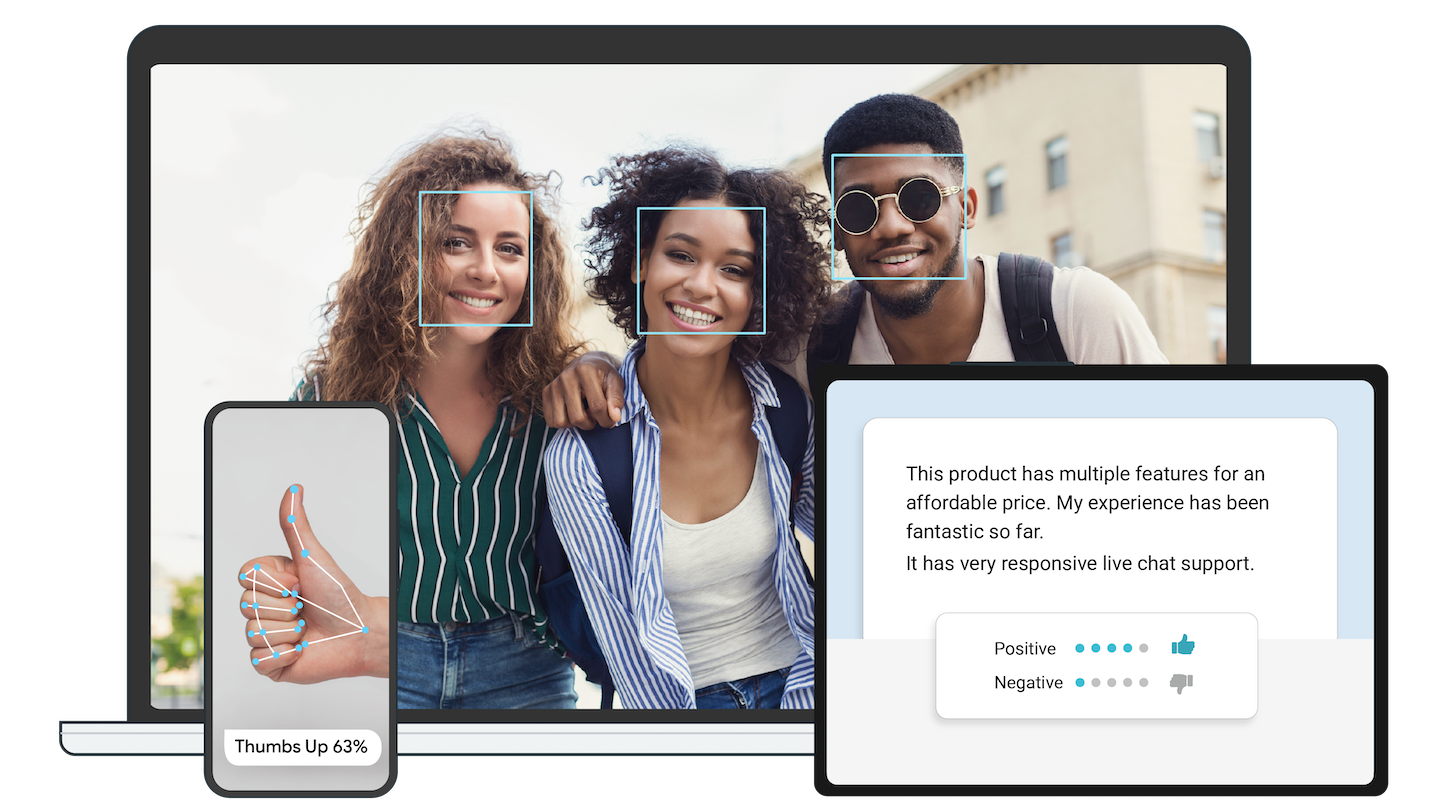

MediaPipe offers open source cross-platform, customizable ML solutions for live

|

MediaPipe offers open source cross-platform, customizable ML solutions for live

|

||||||

|

|

|

||||||

|

|

@ -1240,6 +1240,7 @@ cc_library(

|

||||||

"//mediapipe/framework/formats:classification_cc_proto",

|

"//mediapipe/framework/formats:classification_cc_proto",

|

||||||

"//mediapipe/framework/formats:detection_cc_proto",

|

"//mediapipe/framework/formats:detection_cc_proto",

|

||||||

"//mediapipe/framework/formats:landmark_cc_proto",

|

"//mediapipe/framework/formats:landmark_cc_proto",

|

||||||

|

"//mediapipe/framework/formats:rect_cc_proto",

|

||||||

"//mediapipe/framework/port:ret_check",

|

"//mediapipe/framework/port:ret_check",

|

||||||

"//mediapipe/framework/port:status",

|

"//mediapipe/framework/port:status",

|

||||||

],

|

],

|

||||||

|

|

|

||||||

|

|

@ -17,6 +17,7 @@

|

||||||

#include "mediapipe/framework/formats/classification.pb.h"

|

#include "mediapipe/framework/formats/classification.pb.h"

|

||||||

#include "mediapipe/framework/formats/detection.pb.h"

|

#include "mediapipe/framework/formats/detection.pb.h"

|

||||||

#include "mediapipe/framework/formats/landmark.pb.h"

|

#include "mediapipe/framework/formats/landmark.pb.h"

|

||||||

|

#include "mediapipe/framework/formats/rect.pb.h"

|

||||||

|

|

||||||

namespace mediapipe {

|

namespace mediapipe {

|

||||||

namespace api2 {

|

namespace api2 {

|

||||||

|

|

@ -37,5 +38,12 @@ using GetDetectionVectorItemCalculator =

|

||||||

GetVectorItemCalculator<mediapipe::Detection>;

|

GetVectorItemCalculator<mediapipe::Detection>;

|

||||||

REGISTER_CALCULATOR(GetDetectionVectorItemCalculator);

|

REGISTER_CALCULATOR(GetDetectionVectorItemCalculator);

|

||||||

|

|

||||||

|

using GetNormalizedRectVectorItemCalculator =

|

||||||

|

GetVectorItemCalculator<NormalizedRect>;

|

||||||

|

REGISTER_CALCULATOR(GetNormalizedRectVectorItemCalculator);

|

||||||

|

|

||||||

|

using GetRectVectorItemCalculator = GetVectorItemCalculator<Rect>;

|

||||||

|

REGISTER_CALCULATOR(GetRectVectorItemCalculator);

|

||||||

|

|

||||||

} // namespace api2

|

} // namespace api2

|

||||||

} // namespace mediapipe

|

} // namespace mediapipe

|

||||||

|

|

|

||||||

|

|

@ -290,17 +290,17 @@ void LandmarksToNormalizedLandmarks(const LandmarkList& landmarks,

|

||||||

// Scale Z the same way as X (using image width).

|

// Scale Z the same way as X (using image width).

|

||||||

norm_landmark->set_z(landmark.z() / image_width);

|

norm_landmark->set_z(landmark.z() / image_width);

|

||||||

|

|

||||||

if (landmark.has_presence()) {

|

|

||||||

norm_landmark->set_presence(landmark.presence());

|

|

||||||

} else {

|

|

||||||

norm_landmark->clear_presence();

|

|

||||||

}

|

|

||||||

|

|

||||||

if (landmark.has_visibility()) {

|

if (landmark.has_visibility()) {

|

||||||

norm_landmark->set_visibility(landmark.visibility());

|

norm_landmark->set_visibility(landmark.visibility());

|

||||||

} else {

|

} else {

|

||||||

norm_landmark->clear_visibility();

|

norm_landmark->clear_visibility();

|

||||||

}

|

}

|

||||||

|

|

||||||

|

if (landmark.has_presence()) {

|

||||||

|

norm_landmark->set_presence(landmark.presence());

|

||||||

|

} else {

|

||||||

|

norm_landmark->clear_presence();

|

||||||

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -165,7 +165,7 @@ template <class V, class... U>

|

||||||

struct IsCompatibleType<V, OneOf<U...>>

|

struct IsCompatibleType<V, OneOf<U...>>

|

||||||

: std::integral_constant<bool, (std::is_same_v<V, U> || ...)> {};

|

: std::integral_constant<bool, (std::is_same_v<V, U> || ...)> {};

|

||||||

|

|

||||||

}; // namespace internal

|

} // namespace internal

|

||||||

|

|

||||||

template <typename T>

|

template <typename T>

|

||||||

inline Packet<T> PacketBase::As() const {

|

inline Packet<T> PacketBase::As() const {

|

||||||

|

|

@ -259,19 +259,19 @@ struct First {

|

||||||

|

|

||||||

template <class T>

|

template <class T>

|

||||||

struct AddStatus {

|

struct AddStatus {

|

||||||

using type = StatusOr<T>;

|

using type = absl::StatusOr<T>;

|

||||||

};

|

};

|

||||||

template <class T>

|

template <class T>

|

||||||

struct AddStatus<StatusOr<T>> {

|

struct AddStatus<absl::StatusOr<T>> {

|

||||||

using type = StatusOr<T>;

|

using type = absl::StatusOr<T>;

|

||||||

};

|

};

|

||||||

template <>

|

template <>

|

||||||

struct AddStatus<Status> {

|

struct AddStatus<absl::Status> {

|

||||||

using type = Status;

|

using type = absl::Status;

|

||||||

};

|

};

|

||||||

template <>

|

template <>

|

||||||

struct AddStatus<void> {

|

struct AddStatus<void> {

|

||||||

using type = Status;

|

using type = absl::Status;

|

||||||

};

|

};

|

||||||

|

|

||||||

template <class R, class F, class... A>

|

template <class R, class F, class... A>

|

||||||

|

|

@ -282,7 +282,7 @@ struct CallAndAddStatusImpl {

|

||||||

};

|

};

|

||||||

template <class F, class... A>

|

template <class F, class... A>

|

||||||

struct CallAndAddStatusImpl<void, F, A...> {

|

struct CallAndAddStatusImpl<void, F, A...> {

|

||||||

Status operator()(const F& f, A&&... a) {

|

absl::Status operator()(const F& f, A&&... a) {

|

||||||

f(std::forward<A>(a)...);

|

f(std::forward<A>(a)...);

|

||||||

return {};

|

return {};

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -88,10 +88,13 @@ class SafeIntStrongIntValidator {

|

||||||

|

|

||||||

// If the argument is floating point, we can do a simple check to make

|

// If the argument is floating point, we can do a simple check to make

|

||||||

// sure the value is in range. It is undefined behavior to convert to int

|

// sure the value is in range. It is undefined behavior to convert to int

|

||||||

// from a float that is out of range.

|

// from a float that is out of range. Since large integers will loose some

|

||||||

|

// precision when being converted to floating point, the integer max and min

|

||||||

|

// are explicitly converted back to floating point for this comparison, in

|

||||||

|

// order to satisfy compiler warnings.

|

||||||

if (std::is_floating_point<U>::value) {

|

if (std::is_floating_point<U>::value) {

|

||||||

if (arg < std::numeric_limits<T>::min() ||

|

if (arg < static_cast<U>(std::numeric_limits<T>::min()) ||

|

||||||

arg > std::numeric_limits<T>::max()) {

|

arg > static_cast<U>(std::numeric_limits<T>::max())) {

|

||||||

ErrorType::Error("SafeInt: init from out of bounds float", arg, "=");

|

ErrorType::Error("SafeInt: init from out of bounds float", arg, "=");

|

||||||

}

|

}

|

||||||

} else {

|

} else {

|

||||||

|

|

|

||||||

|

|

@ -94,7 +94,7 @@ const Packet& OutputStreamShard::Header() const {

|

||||||

// binary. This function can be defined in the .cc file because only two

|

// binary. This function can be defined in the .cc file because only two

|

||||||

// versions are ever instantiated, and all call sites are within this .cc file.

|

// versions are ever instantiated, and all call sites are within this .cc file.

|

||||||

template <typename T>

|

template <typename T>

|

||||||

Status OutputStreamShard::AddPacketInternal(T&& packet) {

|

absl::Status OutputStreamShard::AddPacketInternal(T&& packet) {

|

||||||

if (IsClosed()) {

|

if (IsClosed()) {

|

||||||

return mediapipe::FailedPreconditionErrorBuilder(MEDIAPIPE_LOC)

|

return mediapipe::FailedPreconditionErrorBuilder(MEDIAPIPE_LOC)

|

||||||

<< "Packet sent to closed stream \"" << Name() << "\".";

|

<< "Packet sent to closed stream \"" << Name() << "\".";

|

||||||

|

|

@ -113,7 +113,7 @@ Status OutputStreamShard::AddPacketInternal(T&& packet) {

|

||||||

<< timestamp.DebugString();

|

<< timestamp.DebugString();

|

||||||

}

|

}

|

||||||

|

|

||||||

Status result = output_stream_spec_->packet_type->Validate(packet);

|

absl::Status result = output_stream_spec_->packet_type->Validate(packet);

|

||||||

if (!result.ok()) {

|

if (!result.ok()) {

|

||||||

return StatusBuilder(result, MEDIAPIPE_LOC).SetPrepend() << absl::StrCat(

|

return StatusBuilder(result, MEDIAPIPE_LOC).SetPrepend() << absl::StrCat(

|

||||||

"Packet type mismatch on calculator outputting to stream \"",

|

"Packet type mismatch on calculator outputting to stream \"",

|

||||||

|

|

@ -132,14 +132,14 @@ Status OutputStreamShard::AddPacketInternal(T&& packet) {

|

||||||

}

|

}

|

||||||

|

|

||||||

void OutputStreamShard::AddPacket(const Packet& packet) {

|

void OutputStreamShard::AddPacket(const Packet& packet) {

|

||||||

Status status = AddPacketInternal(packet);

|

absl::Status status = AddPacketInternal(packet);

|

||||||

if (!status.ok()) {

|

if (!status.ok()) {

|

||||||

output_stream_spec_->TriggerErrorCallback(status);

|

output_stream_spec_->TriggerErrorCallback(status);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

void OutputStreamShard::AddPacket(Packet&& packet) {

|

void OutputStreamShard::AddPacket(Packet&& packet) {

|

||||||

Status status = AddPacketInternal(std::move(packet));

|

absl::Status status = AddPacketInternal(std::move(packet));

|

||||||

if (!status.ok()) {

|

if (!status.ok()) {

|

||||||

output_stream_spec_->TriggerErrorCallback(status);

|

output_stream_spec_->TriggerErrorCallback(status);

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -59,8 +59,8 @@ absl::Status CombinedStatus(absl::string_view general_comment,

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

if (error_code == StatusCode::kOk) return OkStatus();

|

if (error_code == absl::StatusCode::kOk) return absl::OkStatus();

|

||||||

Status combined;

|

absl::Status combined;

|

||||||

combined = absl::Status(

|

combined = absl::Status(

|

||||||

error_code,

|

error_code,

|

||||||

absl::StrCat(general_comment, "\n", absl::StrJoin(errors, "\n")));

|

absl::StrCat(general_comment, "\n", absl::StrJoin(errors, "\n")));

|

||||||

|

|

|

||||||

|

|

@ -241,9 +241,9 @@ class StaticMap {

|

||||||

#define DEFINE_MEDIAPIPE_TYPE_MAP(MapName, KeyType) \

|

#define DEFINE_MEDIAPIPE_TYPE_MAP(MapName, KeyType) \

|

||||||

class MapName : public type_map_internal::StaticMap<MapName, KeyType> {};

|

class MapName : public type_map_internal::StaticMap<MapName, KeyType> {};

|

||||||

// Defines a map from unique typeid number to MediaPipeTypeData.

|

// Defines a map from unique typeid number to MediaPipeTypeData.

|

||||||

DEFINE_MEDIAPIPE_TYPE_MAP(PacketTypeIdToMediaPipeTypeData, size_t);

|

DEFINE_MEDIAPIPE_TYPE_MAP(PacketTypeIdToMediaPipeTypeData, size_t)

|

||||||

// Defines a map from unique type string to MediaPipeTypeData.

|

// Defines a map from unique type string to MediaPipeTypeData.

|

||||||

DEFINE_MEDIAPIPE_TYPE_MAP(PacketTypeStringToMediaPipeTypeData, std::string);

|

DEFINE_MEDIAPIPE_TYPE_MAP(PacketTypeStringToMediaPipeTypeData, std::string)

|

||||||

|

|

||||||

// MEDIAPIPE_REGISTER_TYPE can be used to register a type.

|

// MEDIAPIPE_REGISTER_TYPE can be used to register a type.

|

||||||

// Convention:

|

// Convention:

|

||||||

|

|

|

||||||

|

|

@ -211,6 +211,14 @@ GlTexture GlCalculatorHelper::CreateDestinationTexture(int width, int height,

|

||||||

return MapGpuBuffer(gpu_buffer, gpu_buffer.GetWriteView<GlTextureView>(0));

|

return MapGpuBuffer(gpu_buffer, gpu_buffer.GetWriteView<GlTextureView>(0));

|

||||||

}

|

}

|

||||||

|

|

||||||

|

GlTexture GlCalculatorHelper::CreateDestinationTexture(

|

||||||

|

const ImageFrame& image_frame) {

|

||||||

|

// TODO: ensure buffer pool is used when creating textures out of

|

||||||

|

// ImageFrame.

|

||||||

|

GpuBuffer gpu_buffer = GpuBufferCopyingImageFrame(image_frame);

|

||||||

|

return MapGpuBuffer(gpu_buffer, gpu_buffer.GetWriteView<GlTextureView>(0));

|

||||||

|

}

|

||||||

|

|

||||||

GlTexture GlCalculatorHelper::CreateSourceTexture(

|

GlTexture GlCalculatorHelper::CreateSourceTexture(

|

||||||

const mediapipe::Image& image) {

|

const mediapipe::Image& image) {

|

||||||

return CreateSourceTexture(image.GetGpuBuffer());

|

return CreateSourceTexture(image.GetGpuBuffer());

|

||||||

|

|

|

||||||

|

|

@ -135,6 +135,12 @@ class GlCalculatorHelper {

|

||||||

// This is deprecated because: 1) it encourages the use of GlTexture as a

|

// This is deprecated because: 1) it encourages the use of GlTexture as a

|

||||||

// long-lived object; 2) it requires copying the ImageFrame's contents,

|

// long-lived object; 2) it requires copying the ImageFrame's contents,

|

||||||

// which may not always be necessary.

|

// which may not always be necessary.

|

||||||

|

//

|

||||||

|

// WARNING: do NOT use as a destination texture which will be sent to

|

||||||

|

// downstream calculators as it may lead to synchronization issues. The result

|

||||||

|

// is meant to be a short-lived object, local to a single calculator and

|

||||||

|

// single GL thread. Use `CreateDestinationTexture` instead, if you need a

|

||||||

|

// destination texture.

|

||||||

ABSL_DEPRECATED("Use `GpuBufferWithImageFrame`.")

|

ABSL_DEPRECATED("Use `GpuBufferWithImageFrame`.")

|

||||||

GlTexture CreateSourceTexture(const ImageFrame& image_frame);

|

GlTexture CreateSourceTexture(const ImageFrame& image_frame);

|

||||||

|

|

||||||

|

|

@ -156,6 +162,14 @@ class GlCalculatorHelper {

|

||||||

int output_width, int output_height,

|

int output_width, int output_height,

|

||||||

GpuBufferFormat format = GpuBufferFormat::kBGRA32);

|

GpuBufferFormat format = GpuBufferFormat::kBGRA32);

|

||||||

|

|

||||||

|

// Creates a destination texture copying and uploading passed image frame.

|

||||||

|

//

|

||||||

|

// WARNING: mind that this functions creates a new texture every time and

|

||||||

|

// doesn't use MediaPipe's gpu buffer pool.

|

||||||

|

// TODO: ensure buffer pool is used when creating textures out of

|

||||||

|

// ImageFrame.

|

||||||

|

GlTexture CreateDestinationTexture(const ImageFrame& image_frame);

|

||||||

|

|

||||||

// The OpenGL name of the output framebuffer.

|

// The OpenGL name of the output framebuffer.

|

||||||

GLuint framebuffer() const;

|

GLuint framebuffer() const;

|

||||||

|

|

||||||

|

|

@ -196,7 +210,7 @@ class GlCalculatorHelper {

|

||||||

// This class should be the main way to interface with GL memory within a single

|

// This class should be the main way to interface with GL memory within a single

|

||||||

// calculator. This is the preferred way to utilize the memory pool inside of

|

// calculator. This is the preferred way to utilize the memory pool inside of

|

||||||

// the helper, because GlTexture manages efficiently releasing memory back into

|

// the helper, because GlTexture manages efficiently releasing memory back into

|

||||||

// the pool. A GPU backed Image can be extracted from the unerlying

|

// the pool. A GPU backed Image can be extracted from the underlying

|

||||||

// memory.

|

// memory.

|

||||||

class GlTexture {

|

class GlTexture {

|

||||||

public:

|

public:

|

||||||

|

|

|

||||||

|

|

@ -65,7 +65,7 @@ static void SetThreadName(const char* name) {

|

||||||

#elif __APPLE__

|

#elif __APPLE__

|

||||||

pthread_setname_np(name);

|

pthread_setname_np(name);

|

||||||

#endif

|

#endif

|

||||||

ANNOTATE_THREAD_NAME(name);

|

ABSL_ANNOTATE_THREAD_NAME(name);

|

||||||

}

|

}

|

||||||

|

|

||||||

GlContext::DedicatedThread::DedicatedThread() {

|

GlContext::DedicatedThread::DedicatedThread() {

|

||||||

|

|

|

||||||

|

|

@ -91,9 +91,9 @@ class GlTextureBuffer

|

||||||

// TODO: turn into a single call?

|

// TODO: turn into a single call?

|

||||||

GLuint name() const { return name_; }

|

GLuint name() const { return name_; }

|

||||||

GLenum target() const { return target_; }

|

GLenum target() const { return target_; }

|

||||||

int width() const { return width_; }

|

int width() const override { return width_; }

|

||||||

int height() const { return height_; }

|

int height() const override { return height_; }

|

||||||

GpuBufferFormat format() const { return format_; }

|

GpuBufferFormat format() const override { return format_; }

|

||||||

|

|

||||||

GlTextureView GetReadView(internal::types<GlTextureView>,

|

GlTextureView GetReadView(internal::types<GlTextureView>,

|

||||||

int plane) const override;

|

int plane) const override;

|

||||||

|

|

|

||||||

|

|

@ -71,11 +71,10 @@ absl::Status ImageFrameToGpuBufferCalculator::Process(CalculatorContext* cc) {

|

||||||

#else

|

#else

|

||||||

const auto& input = cc->Inputs().Index(0).Get<ImageFrame>();

|

const auto& input = cc->Inputs().Index(0).Get<ImageFrame>();

|

||||||

helper_.RunInGlContext([this, &input, &cc]() {

|

helper_.RunInGlContext([this, &input, &cc]() {

|

||||||

auto src = helper_.CreateSourceTexture(input);

|

GlTexture dst = helper_.CreateDestinationTexture(input);

|

||||||

auto output = src.GetFrame<GpuBuffer>();

|

std::unique_ptr<GpuBuffer> output = dst.GetFrame<GpuBuffer>();

|

||||||

glFlush();

|

|

||||||

cc->Outputs().Index(0).Add(output.release(), cc->InputTimestamp());

|

cc->Outputs().Index(0).Add(output.release(), cc->InputTimestamp());

|

||||||

src.Release();

|

dst.Release();

|

||||||

});

|

});

|

||||||

#endif // MEDIAPIPE_GPU_BUFFER_USE_CV_PIXEL_BUFFER

|

#endif // MEDIAPIPE_GPU_BUFFER_USE_CV_PIXEL_BUFFER

|

||||||

return absl::OkStatus();

|

return absl::OkStatus();

|

||||||

|

|

|

||||||

|

|

@ -15,9 +15,12 @@

|

||||||

|

|

||||||

import dataclasses

|

import dataclasses

|

||||||

import tempfile

|

import tempfile

|

||||||

|

|

||||||

from typing import Optional

|

from typing import Optional

|

||||||

|

|

||||||

|

import tensorflow as tf

|

||||||

|

|

||||||

|

from official.common import distribute_utils

|

||||||

|

|

||||||

|

|

||||||

@dataclasses.dataclass

|

@dataclasses.dataclass

|

||||||

class BaseHParams:

|

class BaseHParams:

|

||||||

|

|

@ -43,10 +46,10 @@ class BaseHParams:

|

||||||

documentation for more details:

|

documentation for more details:

|

||||||

https://www.tensorflow.org/api_docs/python/tf/distribute/Strategy.

|

https://www.tensorflow.org/api_docs/python/tf/distribute/Strategy.

|

||||||

num_gpus: How many GPUs to use at each worker with the

|

num_gpus: How many GPUs to use at each worker with the

|

||||||

DistributionStrategies API. The default is -1, which means utilize all

|

DistributionStrategies API. The default is 0.

|

||||||

available GPUs.

|

tpu: The TPU resource to be used for training. This should be either the

|

||||||

tpu: The Cloud TPU to use for training. This should be either the name used

|

name used when creating the Cloud TPU, a grpc://ip.address.of.tpu:8470

|

||||||

when creating the Cloud TPU, or a grpc://ip.address.of.tpu:8470 url.

|

url, or an empty string if using a local TPU.

|

||||||

"""

|

"""

|

||||||

|

|

||||||

# Parameters for train configuration

|

# Parameters for train configuration

|

||||||

|

|

@ -63,5 +66,16 @@ class BaseHParams:

|

||||||

|

|

||||||

# Parameters for hardware acceleration

|

# Parameters for hardware acceleration

|

||||||

distribution_strategy: str = 'off'

|

distribution_strategy: str = 'off'

|

||||||

num_gpus: int = -1 # default value of -1 means use all available GPUs

|

num_gpus: int = 0

|

||||||

tpu: str = ''

|

tpu: str = ''

|

||||||

|

_strategy: tf.distribute.Strategy = dataclasses.field(init=False)

|

||||||

|

|

||||||

|

def __post_init__(self):

|

||||||

|

self._strategy = distribute_utils.get_distribution_strategy(

|

||||||

|

distribution_strategy=self.distribution_strategy,

|

||||||

|

num_gpus=self.num_gpus,

|

||||||

|

tpu_address=self.tpu,

|

||||||

|

)

|

||||||

|

|

||||||

|

def get_strategy(self):

|

||||||

|

return self._strategy

|

||||||

|

|

|

||||||

|

|

@ -85,8 +85,10 @@ class ModelSpecTest(tf.test.TestCase):

|

||||||

steps_per_epoch=None,

|

steps_per_epoch=None,

|

||||||

shuffle=False,

|

shuffle=False,

|

||||||

distribution_strategy='off',

|

distribution_strategy='off',

|

||||||

num_gpus=-1,

|

num_gpus=0,

|

||||||

tpu=''))

|

tpu='',

|

||||||

|

),

|

||||||

|

)

|

||||||

|

|

||||||

def test_custom_bert_spec(self):

|

def test_custom_bert_spec(self):

|

||||||

custom_bert_classifier_options = (

|

custom_bert_classifier_options = (

|

||||||

|

|

|

||||||

|

|

@ -311,9 +311,11 @@ class _BertClassifier(TextClassifier):

|

||||||

label_names: Sequence[str]):

|

label_names: Sequence[str]):

|

||||||

super().__init__(model_spec, hparams, label_names)

|

super().__init__(model_spec, hparams, label_names)

|

||||||

self._model_options = model_options

|

self._model_options = model_options

|

||||||

|

with self._hparams.get_strategy().scope():

|

||||||

self._loss_function = tf.keras.losses.SparseCategoricalCrossentropy()

|

self._loss_function = tf.keras.losses.SparseCategoricalCrossentropy()

|

||||||

self._metric_function = tf.keras.metrics.SparseCategoricalAccuracy(

|

self._metric_function = tf.keras.metrics.SparseCategoricalAccuracy(

|

||||||

"test_accuracy", dtype=tf.float32)

|

"test_accuracy", dtype=tf.float32

|

||||||

|

)

|

||||||

self._text_preprocessor: preprocessor.BertClassifierPreprocessor = None

|

self._text_preprocessor: preprocessor.BertClassifierPreprocessor = None

|

||||||

|

|

||||||

@classmethod

|

@classmethod

|

||||||

|

|

@ -350,6 +352,7 @@ class _BertClassifier(TextClassifier):

|

||||||

"""

|

"""

|

||||||

(processed_train_data, processed_validation_data) = (

|

(processed_train_data, processed_validation_data) = (

|

||||||

self._load_and_run_preprocessor(train_data, validation_data))

|

self._load_and_run_preprocessor(train_data, validation_data))

|

||||||

|

with self._hparams.get_strategy().scope():

|

||||||

self._create_model()

|

self._create_model()

|

||||||

self._create_optimizer(processed_train_data)

|

self._create_optimizer(processed_train_data)

|

||||||

self._train_model(processed_train_data, processed_validation_data)

|

self._train_model(processed_train_data, processed_validation_data)

|

||||||

|

|

|

||||||

|

|

@ -53,6 +53,7 @@ CALCULATORS_AND_GRAPHS = [

|

||||||

"//mediapipe/tasks/cc/text/text_classifier:text_classifier_graph",

|

"//mediapipe/tasks/cc/text/text_classifier:text_classifier_graph",

|

||||||

"//mediapipe/tasks/cc/text/text_embedder:text_embedder_graph",

|

"//mediapipe/tasks/cc/text/text_embedder:text_embedder_graph",

|

||||||

"//mediapipe/tasks/cc/vision/face_detector:face_detector_graph",

|

"//mediapipe/tasks/cc/vision/face_detector:face_detector_graph",

|

||||||

|

"//mediapipe/tasks/cc/vision/face_landmarker:face_landmarker_graph",

|

||||||

"//mediapipe/tasks/cc/vision/image_classifier:image_classifier_graph",

|

"//mediapipe/tasks/cc/vision/image_classifier:image_classifier_graph",

|

||||||

"//mediapipe/tasks/cc/vision/object_detector:object_detector_graph",

|

"//mediapipe/tasks/cc/vision/object_detector:object_detector_graph",

|

||||||

]

|

]

|

||||||

|

|

@ -80,6 +81,9 @@ strip_api_include_path_prefix(

|

||||||

"//mediapipe/tasks/ios/vision/face_detector:sources/MPPFaceDetector.h",

|

"//mediapipe/tasks/ios/vision/face_detector:sources/MPPFaceDetector.h",

|

||||||

"//mediapipe/tasks/ios/vision/face_detector:sources/MPPFaceDetectorOptions.h",

|

"//mediapipe/tasks/ios/vision/face_detector:sources/MPPFaceDetectorOptions.h",

|

||||||

"//mediapipe/tasks/ios/vision/face_detector:sources/MPPFaceDetectorResult.h",

|

"//mediapipe/tasks/ios/vision/face_detector:sources/MPPFaceDetectorResult.h",

|

||||||

|

"//mediapipe/tasks/ios/vision/face_landmarker:sources/MPPFaceLandmarker.h",

|

||||||

|

"//mediapipe/tasks/ios/vision/face_landmarker:sources/MPPFaceLandmarkerOptions.h",

|

||||||

|

"//mediapipe/tasks/ios/vision/face_landmarker:sources/MPPFaceLandmarkerResult.h",

|

||||||

"//mediapipe/tasks/ios/vision/image_classifier:sources/MPPImageClassifier.h",

|

"//mediapipe/tasks/ios/vision/image_classifier:sources/MPPImageClassifier.h",

|

||||||

"//mediapipe/tasks/ios/vision/image_classifier:sources/MPPImageClassifierOptions.h",

|

"//mediapipe/tasks/ios/vision/image_classifier:sources/MPPImageClassifierOptions.h",

|

||||||

"//mediapipe/tasks/ios/vision/image_classifier:sources/MPPImageClassifierResult.h",

|

"//mediapipe/tasks/ios/vision/image_classifier:sources/MPPImageClassifierResult.h",

|

||||||

|

|

@ -164,6 +168,9 @@ apple_static_xcframework(

|

||||||

":MPPFaceDetector.h",

|

":MPPFaceDetector.h",

|

||||||

":MPPFaceDetectorOptions.h",

|

":MPPFaceDetectorOptions.h",

|

||||||

":MPPFaceDetectorResult.h",

|

":MPPFaceDetectorResult.h",

|

||||||

|

":MPPFaceLandmarker.h",

|

||||||

|

":MPPFaceLandmarkerOptions.h",

|

||||||

|

":MPPFaceLandmarkerResult.h",

|

||||||

":MPPImageClassifier.h",

|

":MPPImageClassifier.h",

|

||||||

":MPPImageClassifierOptions.h",

|

":MPPImageClassifierOptions.h",

|

||||||

":MPPImageClassifierResult.h",

|

":MPPImageClassifierResult.h",

|

||||||

|

|

@ -173,6 +180,7 @@ apple_static_xcframework(

|

||||||

],

|

],

|

||||||

deps = [

|

deps = [

|

||||||

"//mediapipe/tasks/ios/vision/face_detector:MPPFaceDetector",

|

"//mediapipe/tasks/ios/vision/face_detector:MPPFaceDetector",

|

||||||

|

"//mediapipe/tasks/ios/vision/face_landmarker:MPPFaceLandmarker",

|

||||||

"//mediapipe/tasks/ios/vision/image_classifier:MPPImageClassifier",

|

"//mediapipe/tasks/ios/vision/image_classifier:MPPImageClassifier",

|

||||||

"//mediapipe/tasks/ios/vision/object_detector:MPPObjectDetector",

|

"//mediapipe/tasks/ios/vision/object_detector:MPPObjectDetector",

|

||||||

],

|

],

|

||||||

|

|

|

||||||

|

|

@ -4,9 +4,9 @@ Pod::Spec.new do |s|

|

||||||

s.authors = 'Google Inc.'

|

s.authors = 'Google Inc.'

|

||||||

s.license = { :type => 'Apache',:file => "LICENSE" }

|

s.license = { :type => 'Apache',:file => "LICENSE" }

|

||||||

s.homepage = 'https://github.com/google/mediapipe'

|

s.homepage = 'https://github.com/google/mediapipe'

|

||||||

s.source = { :http => '${MPP_DOWNLOAD_URL}' }

|

s.source = { :http => '${MPP_COMMON_DOWNLOAD_URL}' }

|

||||||

s.summary = 'MediaPipe Task Library - Text'

|

s.summary = 'MediaPipe Task Library - Text'

|

||||||

s.description = 'The Natural Language APIs of the MediaPipe Task Library'

|

s.description = 'The common libraries of the MediaPipe Task Library'

|

||||||

|

|

||||||

s.ios.deployment_target = '11.0'

|

s.ios.deployment_target = '11.0'

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -4,7 +4,7 @@ Pod::Spec.new do |s|

|

||||||

s.authors = 'Google Inc.'

|

s.authors = 'Google Inc.'

|

||||||

s.license = { :type => 'Apache',:file => "LICENSE" }

|

s.license = { :type => 'Apache',:file => "LICENSE" }

|

||||||

s.homepage = 'https://github.com/google/mediapipe'

|

s.homepage = 'https://github.com/google/mediapipe'

|

||||||

s.source = { :http => '${MPP_DOWNLOAD_URL}' }

|

s.source = { :http => '${MPP_TEXT_DOWNLOAD_URL}' }

|

||||||

s.summary = 'MediaPipe Task Library - Text'

|

s.summary = 'MediaPipe Task Library - Text'

|

||||||

s.description = 'The Natural Language APIs of the MediaPipe Task Library'

|

s.description = 'The Natural Language APIs of the MediaPipe Task Library'

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -4,7 +4,7 @@ Pod::Spec.new do |s|

|

||||||

s.authors = 'Google Inc.'

|

s.authors = 'Google Inc.'

|

||||||

s.license = { :type => 'Apache',:file => "LICENSE" }

|

s.license = { :type => 'Apache',:file => "LICENSE" }

|

||||||

s.homepage = 'https://github.com/google/mediapipe'

|

s.homepage = 'https://github.com/google/mediapipe'

|

||||||

s.source = { :http => '${MPP_DOWNLOAD_URL}' }

|

s.source = { :http => '${MPP_VISION_DOWNLOAD_URL}' }

|

||||||

s.summary = 'MediaPipe Task Library - Vision'

|

s.summary = 'MediaPipe Task Library - Vision'

|

||||||

s.description = 'The Vision APIs of the MediaPipe Task Library'

|

s.description = 'The Vision APIs of the MediaPipe Task Library'

|

||||||

|

|

||||||

|

|

|

||||||

71

mediapipe/tasks/ios/test/vision/face_landmarker/BUILD

Normal file

71

mediapipe/tasks/ios/test/vision/face_landmarker/BUILD

Normal file

|

|

@ -0,0 +1,71 @@

|

||||||

|

load("@build_bazel_rules_apple//apple:ios.bzl", "ios_unit_test")

|

||||||

|

load(

|

||||||

|

"//mediapipe/framework/tool:ios.bzl",

|

||||||

|

"MPP_TASK_MINIMUM_OS_VERSION",

|

||||||

|

)

|

||||||

|

load(

|

||||||

|

"@org_tensorflow//tensorflow/lite:special_rules.bzl",

|

||||||

|

"tflite_ios_lab_runner",

|

||||||

|

)

|

||||||

|

|

||||||

|

package(default_visibility = ["//mediapipe/tasks:internal"])

|

||||||

|

|

||||||

|

licenses(["notice"])

|

||||||

|

|

||||||

|

# Default tags for filtering iOS targets. Targets are restricted to Apple platforms.

|

||||||

|

TFL_DEFAULT_TAGS = [

|

||||||

|

"apple",

|

||||||

|

]

|

||||||

|

|

||||||

|

# Following sanitizer tests are not supported by iOS test targets.

|

||||||

|

TFL_DISABLED_SANITIZER_TAGS = [

|

||||||

|

"noasan",

|

||||||

|

"nomsan",

|

||||||

|

"notsan",

|

||||||

|

]

|

||||||

|

|

||||||

|

objc_library(

|

||||||

|

name = "MPPFaceLandmarkerObjcTestLibrary",

|

||||||

|

testonly = 1,

|

||||||

|

srcs = ["MPPFaceLandmarkerTests.mm"],

|

||||||

|

copts = [

|

||||||

|

"-ObjC++",

|

||||||

|

"-std=c++17",

|

||||||

|

"-x objective-c++",

|

||||||

|

],

|

||||||

|

data = [

|

||||||

|

"//mediapipe/tasks/testdata/vision:test_images",

|

||||||

|

"//mediapipe/tasks/testdata/vision:test_models",

|

||||||

|

"//mediapipe/tasks/testdata/vision:test_protos",

|

||||||

|

],

|

||||||

|

deps = [

|

||||||

|

"//mediapipe/framework/formats:classification_cc_proto",

|

||||||

|

"//mediapipe/framework/formats:landmark_cc_proto",

|

||||||

|

"//mediapipe/framework/formats:matrix_data_cc_proto",

|

||||||

|

"//mediapipe/tasks/cc/vision/face_geometry/proto:face_geometry_cc_proto",

|

||||||

|

"//mediapipe/tasks/ios/common:MPPCommon",

|

||||||

|

"//mediapipe/tasks/ios/components/containers/utils:MPPClassificationResultHelpers",

|

||||||

|

"//mediapipe/tasks/ios/components/containers/utils:MPPDetectionHelpers",

|

||||||

|

"//mediapipe/tasks/ios/components/containers/utils:MPPLandmarkHelpers",

|

||||||

|

"//mediapipe/tasks/ios/test/vision/utils:MPPImageTestUtils",

|

||||||

|

"//mediapipe/tasks/ios/test/vision/utils:parse_proto_utils",

|

||||||

|

"//mediapipe/tasks/ios/vision/face_landmarker:MPPFaceLandmarker",

|

||||||

|

"//mediapipe/tasks/ios/vision/face_landmarker:MPPFaceLandmarkerResult",

|

||||||

|

"//third_party/apple_frameworks:UIKit",

|

||||||

|

] + select({

|

||||||

|

"//third_party:opencv_ios_sim_arm64_source_build": ["@ios_opencv_source//:opencv_xcframework"],

|

||||||

|

"//third_party:opencv_ios_arm64_source_build": ["@ios_opencv_source//:opencv_xcframework"],

|

||||||

|

"//third_party:opencv_ios_x86_64_source_build": ["@ios_opencv_source//:opencv_xcframework"],

|

||||||

|

"//conditions:default": ["@ios_opencv//:OpencvFramework"],

|

||||||

|

}),

|

||||||

|

)

|

||||||

|

|

||||||

|

ios_unit_test(

|

||||||

|

name = "MPPFaceLandmarkerObjcTest",

|

||||||

|

minimum_os_version = MPP_TASK_MINIMUM_OS_VERSION,

|

||||||

|

runner = tflite_ios_lab_runner("IOS_LATEST"),

|

||||||

|

tags = TFL_DEFAULT_TAGS + TFL_DISABLED_SANITIZER_TAGS,

|

||||||

|

deps = [

|

||||||

|

":MPPFaceLandmarkerObjcTestLibrary",

|

||||||

|

],

|

||||||

|

)

|

||||||

|

|

@ -0,0 +1,553 @@

|

||||||

|

// Copyright 2023 The MediaPipe Authors.

|

||||||

|

//

|

||||||

|

// Licensed under the Apache License, Version 2.0 (the "License");

|

||||||

|

// you may not use this file except in compliance with the License.

|

||||||

|

// You may obtain a copy of the License at

|

||||||

|

//

|

||||||

|

// http://www.apache.org/licenses/LICENSE-2.0

|

||||||

|

//

|

||||||

|

// Unless required by applicable law or agreed to in writing, software

|

||||||

|

// distributed under the License is distributed on an "AS IS" BASIS,

|

||||||

|

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||||

|

// See the License for the specific language governing permissions and

|

||||||

|

// limitations under the License.

|

||||||

|

|

||||||

|

#import <Foundation/Foundation.h>

|

||||||

|

#import <UIKit/UIKit.h>

|

||||||

|

#import <XCTest/XCTest.h>

|

||||||

|

|

||||||

|

#include "mediapipe/framework/formats/classification.pb.h"

|

||||||

|

#include "mediapipe/framework/formats/landmark.pb.h"

|

||||||

|

#include "mediapipe/framework/formats/matrix_data.pb.h"

|

||||||

|

#include "mediapipe/tasks/cc/vision/face_geometry/proto/face_geometry.pb.h"

|

||||||

|

#import "mediapipe/tasks/ios/common/sources/MPPCommon.h"

|

||||||

|

#import "mediapipe/tasks/ios/components/containers/utils/sources/MPPClassificationResult+Helpers.h"

|

||||||

|

#import "mediapipe/tasks/ios/components/containers/utils/sources/MPPDetection+Helpers.h"

|

||||||

|

#import "mediapipe/tasks/ios/components/containers/utils/sources/MPPLandmark+Helpers.h"

|

||||||

|

#import "mediapipe/tasks/ios/test/vision/utils/sources/MPPImage+TestUtils.h"

|

||||||

|

#include "mediapipe/tasks/ios/test/vision/utils/sources/parse_proto_utils.h"

|

||||||

|

#import "mediapipe/tasks/ios/vision/face_landmarker/sources/MPPFaceLandmarker.h"

|

||||||

|

#import "mediapipe/tasks/ios/vision/face_landmarker/sources/MPPFaceLandmarkerResult.h"

|

||||||

|

|

||||||

|

using NormalizedLandmarkListProto = ::mediapipe::NormalizedLandmarkList;

|

||||||

|

using ClassificationListProto = ::mediapipe::ClassificationList;

|

||||||

|

using FaceGeometryProto = ::mediapipe::tasks::vision::face_geometry::proto::FaceGeometry;

|

||||||

|

using ::mediapipe::tasks::ios::test::vision::utils::get_proto_from_pbtxt;

|

||||||

|

|

||||||

|

static NSString *const kPbFileExtension = @"pbtxt";

|

||||||

|

|

||||||

|

typedef NSDictionary<NSString *, NSString *> ResourceFileInfo;

|

||||||

|

|

||||||

|

static ResourceFileInfo *const kPortraitImage =

|

||||||

|

@{@"name" : @"portrait", @"type" : @"jpg", @"orientation" : @(UIImageOrientationUp)};

|

||||||

|

static ResourceFileInfo *const kPortraitRotatedImage =

|

||||||

|

@{@"name" : @"portrait_rotated", @"type" : @"jpg", @"orientation" : @(UIImageOrientationRight)};

|

||||||

|

static ResourceFileInfo *const kCatImage = @{@"name" : @"cat", @"type" : @"jpg"};

|

||||||

|

static ResourceFileInfo *const kPortraitExpectedLandmarksName =

|

||||||

|

@{@"name" : @"portrait_expected_face_landmarks", @"type" : kPbFileExtension};

|

||||||

|

static ResourceFileInfo *const kPortraitExpectedBlendshapesName =

|

||||||

|

@{@"name" : @"portrait_expected_blendshapes", @"type" : kPbFileExtension};

|

||||||

|

static ResourceFileInfo *const kPortraitExpectedGeometryName =

|

||||||

|

@{@"name" : @"portrait_expected_face_geometry", @"type" : kPbFileExtension};

|

||||||

|

static NSString *const kFaceLandmarkerModelName = @"face_landmarker_v2";

|

||||||

|

static NSString *const kFaceLandmarkerWithBlendshapesModelName =

|

||||||

|

@"face_landmarker_v2_with_blendshapes";

|

||||||

|

static NSString *const kExpectedErrorDomain = @"com.google.mediapipe.tasks";

|

||||||

|

static NSString *const kLiveStreamTestsDictFaceLandmarkerKey = @"face_landmarker";

|

||||||

|

static NSString *const kLiveStreamTestsDictExpectationKey = @"expectation";

|

||||||

|

|

||||||

|

constexpr float kLandmarkErrorThreshold = 0.03f;

|

||||||

|

constexpr float kBlendshapesErrorThreshold = 0.1f;

|

||||||

|

constexpr float kFacialTransformationMatrixErrorThreshold = 0.2f;

|

||||||

|

|

||||||

|

#define AssertEqualErrors(error, expectedError) \

|

||||||

|

XCTAssertNotNil(error); \

|

||||||

|

XCTAssertEqualObjects(error.domain, expectedError.domain); \

|

||||||

|

XCTAssertEqual(error.code, expectedError.code); \

|

||||||

|

XCTAssertEqualObjects(error.localizedDescription, expectedError.localizedDescription)

|

||||||

|

|

||||||

|

@interface MPPFaceLandmarkerTests : XCTestCase <MPPFaceLandmarkerLiveStreamDelegate> {

|

||||||

|

NSDictionary *_liveStreamSucceedsTestDict;

|

||||||

|

NSDictionary *_outOfOrderTimestampTestDict;

|

||||||

|

}

|

||||||

|

@end

|

||||||

|

|

||||||

|

@implementation MPPFaceLandmarkerTests

|

||||||

|

|

||||||

|

#pragma mark General Tests

|

||||||

|

|

||||||

|

- (void)testCreateFaceLandmarkerWithMissingModelPathFails {

|

||||||

|

NSString *modelPath = [MPPFaceLandmarkerTests filePathWithName:@"" extension:@""];

|

||||||

|

|

||||||

|

NSError *error = nil;

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithModelPath:modelPath

|

||||||

|

error:&error];

|

||||||

|

XCTAssertNil(faceLandmarker);

|

||||||

|

|

||||||

|

NSError *expectedError = [NSError

|

||||||

|

errorWithDomain:kExpectedErrorDomain

|

||||||

|

code:MPPTasksErrorCodeInvalidArgumentError

|

||||||

|

userInfo:@{

|

||||||

|

NSLocalizedDescriptionKey :

|

||||||

|

@"INVALID_ARGUMENT: ExternalFile must specify at least one of 'file_content', "

|

||||||

|

@"'file_name', 'file_pointer_meta' or 'file_descriptor_meta'."

|

||||||

|

}];

|

||||||

|

AssertEqualErrors(error, expectedError);

|

||||||

|

}

|

||||||

|

|

||||||

|

#pragma mark Image Mode Tests

|

||||||

|

|

||||||

|

- (void)testDetectWithImageModeAndPotraitSucceeds {

|

||||||

|

NSString *modelPath = [MPPFaceLandmarkerTests filePathWithName:kFaceLandmarkerModelName

|

||||||

|

extension:@"task"];

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithModelPath:modelPath

|

||||||

|

error:nil];

|

||||||

|

NSArray<MPPNormalizedLandmark *> *expectedLandmarks =

|

||||||

|

[MPPFaceLandmarkerTests expectedLandmarksFromFileInfo:kPortraitExpectedLandmarksName];

|

||||||

|

[self assertResultsOfDetectInImageWithFileInfo:kPortraitImage

|

||||||

|

usingFaceLandmarker:faceLandmarker

|

||||||

|

containsExpectedLandmarks:expectedLandmarks

|

||||||

|

expectedBlendshapes:NULL

|

||||||

|

expectedTransformationMatrix:NULL];

|

||||||

|

}

|

||||||

|

|

||||||

|

- (void)testDetectWithImageModeAndPotraitAndFacialTransformationMatrixesSucceeds {

|

||||||

|

MPPFaceLandmarkerOptions *options =

|

||||||

|

[self faceLandmarkerOptionsWithModelName:kFaceLandmarkerModelName];

|

||||||

|

options.outputFacialTransformationMatrixes = YES;

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithOptions:options error:nil];

|

||||||

|

|

||||||

|

NSArray<MPPNormalizedLandmark *> *expectedLandmarks =

|

||||||

|

[MPPFaceLandmarkerTests expectedLandmarksFromFileInfo:kPortraitExpectedLandmarksName];

|

||||||

|

MPPTransformMatrix *expectedTransformationMatrix = [MPPFaceLandmarkerTests

|

||||||

|

expectedTransformationMatrixFromFileInfo:kPortraitExpectedGeometryName];

|

||||||

|

[self assertResultsOfDetectInImageWithFileInfo:kPortraitImage

|

||||||

|

usingFaceLandmarker:faceLandmarker

|

||||||

|

containsExpectedLandmarks:expectedLandmarks

|

||||||

|

expectedBlendshapes:NULL

|

||||||

|

expectedTransformationMatrix:expectedTransformationMatrix];

|

||||||

|

}

|

||||||

|

|

||||||

|

- (void)testDetectWithImageModeAndNoFaceSucceeds {

|

||||||

|

NSString *modelPath = [MPPFaceLandmarkerTests filePathWithName:kFaceLandmarkerModelName

|

||||||

|

extension:@"task"];

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithModelPath:modelPath

|

||||||

|

error:nil];

|

||||||

|

XCTAssertNotNil(faceLandmarker);

|

||||||

|

|

||||||

|

NSError *error;

|

||||||

|

MPPImage *mppImage = [self imageWithFileInfo:kCatImage];

|

||||||

|

MPPFaceLandmarkerResult *faceLandmarkerResult = [faceLandmarker detectInImage:mppImage

|

||||||

|

error:&error];

|

||||||

|

XCTAssertNil(error);

|

||||||

|

XCTAssertNotNil(faceLandmarkerResult);

|

||||||

|

XCTAssertEqualObjects(faceLandmarkerResult.faceLandmarks, [NSArray array]);

|

||||||

|

XCTAssertEqualObjects(faceLandmarkerResult.faceBlendshapes, [NSArray array]);

|

||||||

|

XCTAssertEqualObjects(faceLandmarkerResult.facialTransformationMatrixes, [NSArray array]);

|

||||||

|

}

|

||||||

|

|

||||||

|

#pragma mark Video Mode Tests

|

||||||

|

|

||||||

|

- (void)testDetectWithVideoModeAndPotraitSucceeds {

|

||||||

|

MPPFaceLandmarkerOptions *options =

|

||||||

|

[self faceLandmarkerOptionsWithModelName:kFaceLandmarkerModelName];

|

||||||

|

options.runningMode = MPPRunningModeVideo;

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithOptions:options error:nil];

|

||||||

|

|

||||||

|

MPPImage *image = [self imageWithFileInfo:kPortraitImage];

|

||||||

|

NSArray<MPPNormalizedLandmark *> *expectedLandmarks =

|

||||||

|

[MPPFaceLandmarkerTests expectedLandmarksFromFileInfo:kPortraitExpectedLandmarksName];

|

||||||

|

for (int i = 0; i < 3; i++) {

|

||||||

|

MPPFaceLandmarkerResult *faceLandmarkerResult = [faceLandmarker detectInVideoFrame:image

|

||||||

|

timestampInMilliseconds:i

|

||||||

|

error:nil];

|

||||||

|

[self assertFaceLandmarkerResult:faceLandmarkerResult

|

||||||

|

containsExpectedLandmarks:expectedLandmarks

|

||||||

|

expectedBlendshapes:NULL

|

||||||

|

expectedTransformationMatrix:NULL];

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

#pragma mark Live Stream Mode Tests

|

||||||

|

|

||||||

|

- (void)testDetectWithLiveStreamModeAndPotraitSucceeds {

|

||||||

|

NSInteger iterationCount = 100;

|

||||||

|

|

||||||

|

// Because of flow limiting, the callback might be invoked fewer than `iterationCount` times. An

|

||||||

|

// normal expectation will fail if expectation.fullfill() is not called

|

||||||

|

// `expectation.expectedFulfillmentCount` times. If `expectation.isInverted = true`, the test will

|

||||||

|

// only succeed if expectation is not fullfilled for the specified `expectedFulfillmentCount`.

|

||||||

|

// Since it is not possible to predict how many times the expectation is supposed to be

|

||||||

|

// fullfilled, `expectation.expectedFulfillmentCount` = `iterationCount` + 1 and

|

||||||

|

// `expectation.isInverted = true` ensures that test succeeds if expectation is fullfilled <=

|

||||||

|

// `iterationCount` times.

|

||||||

|

XCTestExpectation *expectation = [[XCTestExpectation alloc]

|

||||||

|

initWithDescription:@"detectWithOutOfOrderTimestampsAndLiveStream"];

|

||||||

|

expectation.expectedFulfillmentCount = iterationCount + 1;

|

||||||

|

expectation.inverted = YES;

|

||||||

|

|

||||||

|

MPPFaceLandmarkerOptions *options =

|

||||||

|

[self faceLandmarkerOptionsWithModelName:kFaceLandmarkerModelName];

|

||||||

|

options.runningMode = MPPRunningModeLiveStream;

|

||||||

|

options.faceLandmarkerLiveStreamDelegate = self;

|

||||||

|

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithOptions:options error:nil];

|

||||||

|

MPPImage *image = [self imageWithFileInfo:kPortraitImage];

|

||||||

|

|

||||||

|

_liveStreamSucceedsTestDict = @{

|

||||||

|

kLiveStreamTestsDictFaceLandmarkerKey : faceLandmarker,

|

||||||

|

kLiveStreamTestsDictExpectationKey : expectation

|

||||||

|

};

|

||||||

|

|

||||||

|

for (int i = 0; i < iterationCount; i++) {

|

||||||

|

XCTAssertTrue([faceLandmarker detectAsyncInImage:image timestampInMilliseconds:i error:nil]);

|

||||||

|

}

|

||||||

|

|

||||||

|

NSTimeInterval timeout = 0.5f;

|

||||||

|

[self waitForExpectations:@[ expectation ] timeout:timeout];

|

||||||

|

}

|

||||||

|

|

||||||

|

- (void)testDetectWithOutOfOrderTimestampsAndLiveStreamModeFails {

|

||||||

|

MPPFaceLandmarkerOptions *options =

|

||||||

|

[self faceLandmarkerOptionsWithModelName:kFaceLandmarkerModelName];

|

||||||

|

options.runningMode = MPPRunningModeLiveStream;

|

||||||

|

options.faceLandmarkerLiveStreamDelegate = self;

|

||||||

|

|

||||||

|

XCTestExpectation *expectation = [[XCTestExpectation alloc]

|

||||||

|

initWithDescription:@"detectWithOutOfOrderTimestampsAndLiveStream"];

|

||||||

|

expectation.expectedFulfillmentCount = 1;

|

||||||

|

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithOptions:options error:nil];

|

||||||

|

_liveStreamSucceedsTestDict = @{

|

||||||

|

kLiveStreamTestsDictFaceLandmarkerKey : faceLandmarker,

|

||||||

|

kLiveStreamTestsDictExpectationKey : expectation

|

||||||

|

};

|

||||||

|

|

||||||

|

MPPImage *image = [self imageWithFileInfo:kPortraitImage];

|

||||||

|

XCTAssertTrue([faceLandmarker detectAsyncInImage:image timestampInMilliseconds:1 error:nil]);

|

||||||

|

|

||||||

|

NSError *error;

|

||||||

|

XCTAssertFalse([faceLandmarker detectAsyncInImage:image timestampInMilliseconds:0 error:&error]);

|

||||||

|

|

||||||

|

NSError *expectedError =

|

||||||

|

[NSError errorWithDomain:kExpectedErrorDomain

|

||||||

|

code:MPPTasksErrorCodeInvalidArgumentError

|

||||||

|

userInfo:@{

|

||||||

|

NSLocalizedDescriptionKey :

|

||||||

|

@"INVALID_ARGUMENT: Input timestamp must be monotonically increasing."

|

||||||

|

}];

|

||||||

|

AssertEqualErrors(error, expectedError);

|

||||||

|

|

||||||

|

NSTimeInterval timeout = 0.5f;

|

||||||

|

[self waitForExpectations:@[ expectation ] timeout:timeout];

|

||||||

|

}

|

||||||

|

|

||||||

|

#pragma mark Running Mode Tests

|

||||||

|

|

||||||

|

- (void)testCreateFaceLandmarkerFailsWithDelegateInNonLiveStreamMode {

|

||||||

|

MPPRunningMode runningModesToTest[] = {MPPRunningModeImage, MPPRunningModeVideo};

|

||||||

|

for (int i = 0; i < sizeof(runningModesToTest) / sizeof(runningModesToTest[0]); i++) {

|

||||||

|

MPPFaceLandmarkerOptions *options =

|

||||||

|

[self faceLandmarkerOptionsWithModelName:kFaceLandmarkerModelName];

|

||||||

|

|

||||||

|

options.runningMode = runningModesToTest[i];

|

||||||

|

options.faceLandmarkerLiveStreamDelegate = self;

|

||||||

|

|

||||||

|

[self

|

||||||

|

assertCreateFaceLandmarkerWithOptions:options

|

||||||

|

failsWithExpectedError:

|

||||||

|

[NSError errorWithDomain:kExpectedErrorDomain

|

||||||

|

code:MPPTasksErrorCodeInvalidArgumentError

|

||||||

|

userInfo:@{

|

||||||

|

NSLocalizedDescriptionKey :

|

||||||

|

@"The vision task is in image or video mode. The "

|

||||||

|

@"delegate must not be set in the task's options."

|

||||||

|

}]];

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

|

- (void)testCreateFaceLandmarkerFailsWithMissingDelegateInLiveStreamMode {

|

||||||

|

MPPFaceLandmarkerOptions *options =

|

||||||

|

[self faceLandmarkerOptionsWithModelName:kFaceLandmarkerModelName];

|

||||||

|

options.runningMode = MPPRunningModeLiveStream;

|

||||||

|

|

||||||

|

[self assertCreateFaceLandmarkerWithOptions:options

|

||||||

|

failsWithExpectedError:

|

||||||

|

[NSError errorWithDomain:kExpectedErrorDomain

|

||||||

|

code:MPPTasksErrorCodeInvalidArgumentError

|

||||||

|

userInfo:@{

|

||||||

|

NSLocalizedDescriptionKey :

|

||||||

|

@"The vision task is in live stream mode. An "

|

||||||

|

@"object must be set as the delegate of the task "

|

||||||

|

@"in its options to ensure asynchronous delivery "

|

||||||

|

@"of results."

|

||||||

|

}]];

|

||||||

|

}

|

||||||

|

|

||||||

|

- (void)testDetectFailsWithCallingWrongAPIInImageMode {

|

||||||

|

MPPFaceLandmarkerOptions *options =

|

||||||

|

[self faceLandmarkerOptionsWithModelName:kFaceLandmarkerModelName];

|

||||||

|

MPPFaceLandmarker *faceLandmarker = [[MPPFaceLandmarker alloc] initWithOptions:options error:nil];

|

||||||

|

|

||||||

|

MPPImage *image = [self imageWithFileInfo:kPortraitImage];

|

||||||

|

|

||||||

|

NSError *liveStreamAPICallError;

|

||||||

|

XCTAssertFalse([faceLandmarker detectAsyncInImage:image

|