Merge pull request #4118 from kuaashish:master

PiperOrigin-RevId: 513364683

This commit is contained in:

commit

82ee00f25d

|

|

@ -60,7 +60,7 @@ The second approach allows up to [`max_in_flight`] invocations of the

|

||||||

packets from [`CalculatorBase::Process`] are automatically ordered by timestamp

|

packets from [`CalculatorBase::Process`] are automatically ordered by timestamp

|

||||||

before they are passed along to downstream calculators.

|

before they are passed along to downstream calculators.

|

||||||

|

|

||||||

With either aproach, you must be aware that the calculator running in parallel

|

With either approach, you must be aware that the calculator running in parallel

|

||||||

cannot maintain internal state in the same way as a normal sequential

|

cannot maintain internal state in the same way as a normal sequential

|

||||||

calculator.

|

calculator.

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -38,8 +38,8 @@ If you open a GitHub issue, here is our policy:

|

||||||

- **OS Platform and Distribution (e.g., Linux Ubuntu 16.04)**:

|

- **OS Platform and Distribution (e.g., Linux Ubuntu 16.04)**:

|

||||||

- **Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device**:

|

- **Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device**:

|

||||||

- **Bazel version**:

|

- **Bazel version**:

|

||||||

- **Android Studio, NDK, SDK versions (if issue is related to building in mobile dev enviroment)**:

|

- **Android Studio, NDK, SDK versions (if issue is related to building in mobile dev environment)**:

|

||||||

- **Xcode & Tulsi version (if issue is related to building in mobile dev enviroment)**:

|

- **Xcode & Tulsi version (if issue is related to building in mobile dev environment)**:

|

||||||

- **Exact steps to reproduce**:

|

- **Exact steps to reproduce**:

|

||||||

|

|

||||||

### Describe the problem

|

### Describe the problem

|

||||||

|

|

|

||||||

|

|

@ -44,7 +44,7 @@ snippets.

|

||||||

|

|

||||||

| Browser | Platform | Notes |

|

| Browser | Platform | Notes |

|

||||||

| ------- | ----------------------- | -------------------------------------- |

|

| ------- | ----------------------- | -------------------------------------- |

|

||||||

| Chrome | Android / Windows / Mac | Pixel 4 and older unsupported. Fuschia |

|

| Chrome | Android / Windows / Mac | Pixel 4 and older unsupported. Fuchsia |

|

||||||

| | | unsupported. |

|

| | | unsupported. |

|

||||||

| Chrome | iOS | Camera unavailable in Chrome on iOS. |

|

| Chrome | iOS | Camera unavailable in Chrome on iOS. |

|

||||||

| Safari | iPad/iPhone/Mac | iOS and Safari on iPad / iPhone / |

|

| Safari | iPad/iPhone/Mac | iOS and Safari on iPad / iPhone / |

|

||||||

|

|

|

||||||

|

|

@ -66,7 +66,7 @@ WARNING: Download from https://storage.googleapis.com/mirror.tensorflow.org/gith

|

||||||

```

|

```

|

||||||

|

|

||||||

usually indicates that Bazel fails to download necessary dependency repositories

|

usually indicates that Bazel fails to download necessary dependency repositories

|

||||||

that MediaPipe needs. MedaiPipe has several dependency repositories that are

|

that MediaPipe needs. MediaPipe has several dependency repositories that are

|

||||||

hosted by Google sites. In some regions, you may need to set up a network proxy

|

hosted by Google sites. In some regions, you may need to set up a network proxy

|

||||||

or use a VPN to access those resources. You may also need to append

|

or use a VPN to access those resources. You may also need to append

|

||||||

`--host_jvm_args "-DsocksProxyHost=<ip address> -DsocksProxyPort=<port number>"`

|

`--host_jvm_args "-DsocksProxyHost=<ip address> -DsocksProxyPort=<port number>"`

|

||||||

|

|

|

||||||

|

|

@ -143,7 +143,7 @@ about the model in this [paper](https://arxiv.org/abs/2006.10962).

|

||||||

The [Face Landmark Model](#face-landmark-model) performs a single-camera face landmark

|

The [Face Landmark Model](#face-landmark-model) performs a single-camera face landmark

|

||||||

detection in the screen coordinate space: the X- and Y- coordinates are

|

detection in the screen coordinate space: the X- and Y- coordinates are

|

||||||

normalized screen coordinates, while the Z coordinate is relative and is scaled

|

normalized screen coordinates, while the Z coordinate is relative and is scaled

|

||||||

as the X coodinate under the

|

as the X coordinate under the

|

||||||

[weak perspective projection camera model](https://en.wikipedia.org/wiki/3D_projection#Weak_perspective_projection).

|

[weak perspective projection camera model](https://en.wikipedia.org/wiki/3D_projection#Weak_perspective_projection).

|

||||||

This format is well-suited for some applications, however it does not directly

|

This format is well-suited for some applications, however it does not directly

|

||||||

enable the full spectrum of augmented reality (AR) features like aligning a

|

enable the full spectrum of augmented reality (AR) features like aligning a

|

||||||

|

|

|

||||||

|

|

@ -48,7 +48,7 @@ camera, in real-time, without the need for specialized hardware. Through use of

|

||||||

iris landmarks, the solution is also able to determine the metric distance

|

iris landmarks, the solution is also able to determine the metric distance

|

||||||

between the subject and the camera with relative error less than 10%. Note that

|

between the subject and the camera with relative error less than 10%. Note that

|

||||||

iris tracking does not infer the location at which people are looking, nor does

|

iris tracking does not infer the location at which people are looking, nor does

|

||||||

it provide any form of identity recognition. With the cross-platfrom capability

|

it provide any form of identity recognition. With the cross-platform capability

|

||||||

of the MediaPipe framework, MediaPipe Iris can run on most modern

|

of the MediaPipe framework, MediaPipe Iris can run on most modern

|

||||||

[mobile phones](#mobile), [desktops/laptops](#desktop) and even on the

|

[mobile phones](#mobile), [desktops/laptops](#desktop) and even on the

|

||||||

[web](#web).

|

[web](#web).

|

||||||

|

|

@ -109,7 +109,7 @@ You can also find more details in this

|

||||||

### Iris Landmark Model

|

### Iris Landmark Model

|

||||||

|

|

||||||

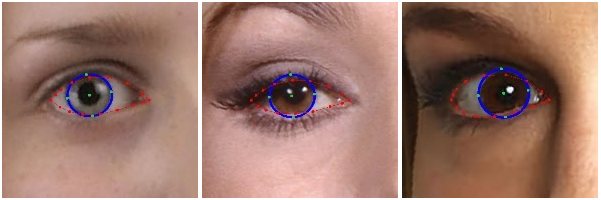

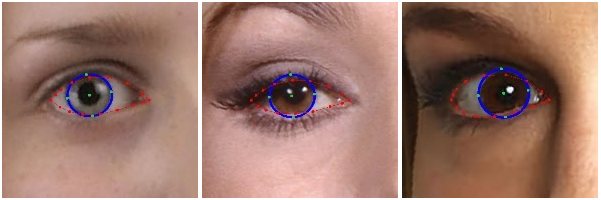

The iris model takes an image patch of the eye region and estimates both the eye

|

The iris model takes an image patch of the eye region and estimates both the eye

|

||||||

landmarks (along the eyelid) and iris landmarks (along ths iris contour). You

|

landmarks (along the eyelid) and iris landmarks (along this iris contour). You

|

||||||

can find more details in this [paper](https://arxiv.org/abs/2006.11341).

|

can find more details in this [paper](https://arxiv.org/abs/2006.11341).

|

||||||

|

|

||||||

|

|

|

|

||||||

|

|

|

||||||

|

|

@ -95,7 +95,7 @@ process new data sets, in the documentation of

|

||||||

|

|

||||||

MediaSequence uses SequenceExamples as the format of both inputs and

|

MediaSequence uses SequenceExamples as the format of both inputs and

|

||||||

outputs. Annotations are encoded as inputs in a SequenceExample of metadata

|

outputs. Annotations are encoded as inputs in a SequenceExample of metadata

|

||||||

that defines the labels and the path to the cooresponding video file. This

|

that defines the labels and the path to the corresponding video file. This

|

||||||

metadata is passed as input to the C++ `media_sequence_demo` binary, and the

|

metadata is passed as input to the C++ `media_sequence_demo` binary, and the

|

||||||

output is a SequenceExample filled with images and annotations ready for

|

output is a SequenceExample filled with images and annotations ready for

|

||||||

model training.

|

model training.

|

||||||

|

|

|

||||||

|

|

@ -180,7 +180,7 @@ and a

|

||||||

The detection subgraph performs ML inference only once every few frames to

|

The detection subgraph performs ML inference only once every few frames to

|

||||||

reduce computation load, and decodes the output tensor to a FrameAnnotation that

|

reduce computation load, and decodes the output tensor to a FrameAnnotation that

|

||||||

contains nine keypoints: the 3D bounding box's center and its eight vertices.

|

contains nine keypoints: the 3D bounding box's center and its eight vertices.

|

||||||

The tracking subgraph runs every frame, using the box traker in

|

The tracking subgraph runs every frame, using the box tracker in

|

||||||

[MediaPipe Box Tracking](./box_tracking.md) to track the 2D box tightly

|

[MediaPipe Box Tracking](./box_tracking.md) to track the 2D box tightly

|

||||||

enclosing the projection of the 3D bounding box, and lifts the tracked 2D

|

enclosing the projection of the 3D bounding box, and lifts the tracked 2D

|

||||||

keypoints to 3D with

|

keypoints to 3D with

|

||||||

|

|

@ -623,7 +623,7 @@ z_ndc = 1 / Z

|

||||||

|

|

||||||

### Pixel Space

|

### Pixel Space

|

||||||

|

|

||||||

In this API we set upper-left coner of an image as the origin of pixel

|

In this API we set upper-left corner of an image as the origin of pixel

|

||||||

coordinate. One can convert from NDC to pixel space as follows:

|

coordinate. One can convert from NDC to pixel space as follows:

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

|

||||||

Loading…

Reference in New Issue

Block a user