Merge branch 'google:master' into master

This commit is contained in:

commit

6e7018b826

11

README.md

11

README.md

|

|

@ -19,6 +19,17 @@ ML solutions for live and streaming media.

|

||||||

|

|

|

|

||||||

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

|

**Attention:** *Thanks for your interest in MediaPipe! We are moving to

|

||||||

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

|

as the primary developer documentation

|

||||||

|

site for MediaPipe starting April 3, 2023.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## ML solutions in MediaPipe

|

## ML solutions in MediaPipe

|

||||||

|

|

||||||

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

||||||

|

|

|

||||||

13

docs/_layouts/forward.html

Normal file

13

docs/_layouts/forward.html

Normal file

|

|

@ -0,0 +1,13 @@

|

||||||

|

<html lang="en">

|

||||||

|

<head>

|

||||||

|

<meta charset="utf-8"/>

|

||||||

|

<meta http-equiv="refresh" content="0;url={{ page.target }}"/>

|

||||||

|

<link rel="canonical" href="{{ page.target }}"/>

|

||||||

|

<title>Redirecting</title>

|

||||||

|

</head>

|

||||||

|

<body>

|

||||||

|

<p>This page now lives on https://developers.google.com/mediapipe/. If you aren't automatically

|

||||||

|

redirected, follow this

|

||||||

|

<a href="{{ page.target }}">link</a>.</p>

|

||||||

|

</body>

|

||||||

|

</html>

|

||||||

|

|

@ -593,3 +593,105 @@ CalculatorGraphConfig BuildGraph() {

|

||||||

return graph.GetConfig();

|

return graph.GetConfig();

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|

||||||

|

### Separate nodes for better readability

|

||||||

|

|

||||||

|

```c++ {.bad}

|

||||||

|

CalculatorGraphConfig BuildGraph() {

|

||||||

|

Graph graph;

|

||||||

|

|

||||||

|

// Inputs.

|

||||||

|

Stream<A> a = graph.In(0).Cast<A>();

|

||||||

|

auto& node1 = graph.AddNode("Calculator1");

|

||||||

|

a.ConnectTo(node1.In("INPUT"));

|

||||||

|

Stream<B> b = node1.Out("OUTPUT").Cast<B>();

|

||||||

|

auto& node2 = graph.AddNode("Calculator2");

|

||||||

|

b.ConnectTo(node2.In("INPUT"));

|

||||||

|

Stream<C> c = node2.Out("OUTPUT").Cast<C>();

|

||||||

|

auto& node3 = graph.AddNode("Calculator3");

|

||||||

|

b.ConnectTo(node3.In("INPUT_B"));

|

||||||

|

c.ConnectTo(node3.In("INPUT_C"));

|

||||||

|

Stream<D> d = node3.Out("OUTPUT").Cast<D>();

|

||||||

|

auto& node4 = graph.AddNode("Calculator4");

|

||||||

|

b.ConnectTo(node4.In("INPUT_B"));

|

||||||

|

c.ConnectTo(node4.In("INPUT_C"));

|

||||||

|

d.ConnectTo(node4.In("INPUT_D"));

|

||||||

|

Stream<E> e = node4.Out("OUTPUT").Cast<E>();

|

||||||

|

// Outputs.

|

||||||

|

b.SetName("b").ConnectTo(graph.Out(0));

|

||||||

|

c.SetName("c").ConnectTo(graph.Out(1));

|

||||||

|

d.SetName("d").ConnectTo(graph.Out(2));

|

||||||

|

e.SetName("e").ConnectTo(graph.Out(3));

|

||||||

|

|

||||||

|

return graph.GetConfig();

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

In the above code, it can be hard to grasp the idea where each node begins and

|

||||||

|

ends. To improve this and help your code readers, you can simply have blank

|

||||||

|

lines before and after each node:

|

||||||

|

|

||||||

|

```c++ {.good}

|

||||||

|

CalculatorGraphConfig BuildGraph() {

|

||||||

|

Graph graph;

|

||||||

|

|

||||||

|

// Inputs.

|

||||||

|

Stream<A> a = graph.In(0).Cast<A>();

|

||||||

|

|

||||||

|

auto& node1 = graph.AddNode("Calculator1");

|

||||||

|

a.ConnectTo(node1.In("INPUT"));

|

||||||

|

Stream<B> b = node1.Out("OUTPUT").Cast<B>();

|

||||||

|

|

||||||

|

auto& node2 = graph.AddNode("Calculator2");

|

||||||

|

b.ConnectTo(node2.In("INPUT"));

|

||||||

|

Stream<C> c = node2.Out("OUTPUT").Cast<C>();

|

||||||

|

|

||||||

|

auto& node3 = graph.AddNode("Calculator3");

|

||||||

|

b.ConnectTo(node3.In("INPUT_B"));

|

||||||

|

c.ConnectTo(node3.In("INPUT_C"));

|

||||||

|

Stream<D> d = node3.Out("OUTPUT").Cast<D>();

|

||||||

|

|

||||||

|

auto& node4 = graph.AddNode("Calculator4");

|

||||||

|

b.ConnectTo(node4.In("INPUT_B"));

|

||||||

|

c.ConnectTo(node4.In("INPUT_C"));

|

||||||

|

d.ConnectTo(node4.In("INPUT_D"));

|

||||||

|

Stream<E> e = node4.Out("OUTPUT").Cast<E>();

|

||||||

|

|

||||||

|

// Outputs.

|

||||||

|

b.SetName("b").ConnectTo(graph.Out(0));

|

||||||

|

c.SetName("c").ConnectTo(graph.Out(1));

|

||||||

|

d.SetName("d").ConnectTo(graph.Out(2));

|

||||||

|

e.SetName("e").ConnectTo(graph.Out(3));

|

||||||

|

|

||||||

|

return graph.GetConfig();

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

Also, the above representation matches `CalculatorGraphConfig` proto

|

||||||

|

representation better.

|

||||||

|

|

||||||

|

If you extract nodes into utility functions, they are scoped within functions

|

||||||

|

already and it's clear where they begin and end, so it's completely fine to

|

||||||

|

have:

|

||||||

|

|

||||||

|

```c++ {.good}

|

||||||

|

CalculatorGraphConfig BuildGraph() {

|

||||||

|

Graph graph;

|

||||||

|

|

||||||

|

// Inputs.

|

||||||

|

Stream<A> a = graph.In(0).Cast<A>();

|

||||||

|

|

||||||

|

Stream<B> b = RunCalculator1(a, graph);

|

||||||

|

Stream<C> c = RunCalculator2(b, graph);

|

||||||

|

Stream<D> d = RunCalculator3(b, c, graph);

|

||||||

|

Stream<E> e = RunCalculator4(b, c, d, graph);

|

||||||

|

|

||||||

|

// Outputs.

|

||||||

|

b.SetName("b").ConnectTo(graph.Out(0));

|

||||||

|

c.SetName("c").ConnectTo(graph.Out(1));

|

||||||

|

d.SetName("d").ConnectTo(graph.Out(2));

|

||||||

|

e.SetName("e").ConnectTo(graph.Out(3));

|

||||||

|

|

||||||

|

return graph.GetConfig();

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/framework_concepts/calculators

|

||||||

title: Calculators

|

title: Calculators

|

||||||

parent: Framework Concepts

|

parent: Framework Concepts

|

||||||

nav_order: 1

|

nav_order: 1

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/framework_concepts/overview

|

||||||

title: Framework Concepts

|

title: Framework Concepts

|

||||||

nav_order: 5

|

nav_order: 5

|

||||||

has_children: true

|

has_children: true

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/framework_concepts/gpu

|

||||||

title: GPU

|

title: GPU

|

||||||

parent: Framework Concepts

|

parent: Framework Concepts

|

||||||

nav_order: 5

|

nav_order: 5

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/framework_concepts/graphs

|

||||||

title: Graphs

|

title: Graphs

|

||||||

parent: Framework Concepts

|

parent: Framework Concepts

|

||||||

nav_order: 2

|

nav_order: 2

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/framework_concepts/packets

|

||||||

title: Packets

|

title: Packets

|

||||||

parent: Framework Concepts

|

parent: Framework Concepts

|

||||||

nav_order: 3

|

nav_order: 3

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/framework_concepts/realtime_streams

|

||||||

title: Real-time Streams

|

title: Real-time Streams

|

||||||

parent: Framework Concepts

|

parent: Framework Concepts

|

||||||

nav_order: 6

|

nav_order: 6

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/framework_concepts/synchronization

|

||||||

title: Synchronization

|

title: Synchronization

|

||||||

parent: Framework Concepts

|

parent: Framework Concepts

|

||||||

nav_order: 4

|

nav_order: 4

|

||||||

|

|

|

||||||

|

|

@ -13,6 +13,17 @@ nav_order: 2

|

||||||

{:toc}

|

{:toc}

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thanks for your interest in MediaPipe! We are moving to

|

||||||

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

|

as the primary developer documentation

|

||||||

|

site for MediaPipe starting April 3, 2023. This content will not be moved to

|

||||||

|

the new site, but will remain available in the source code repository on an

|

||||||

|

as-is basis.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

MediaPipe Android Solution APIs (currently in alpha) are available in:

|

MediaPipe Android Solution APIs (currently in alpha) are available in:

|

||||||

|

|

||||||

* [MediaPipe Face Detection](../solutions/face_detection#android-solution-api)

|

* [MediaPipe Face Detection](../solutions/face_detection#android-solution-api)

|

||||||

|

|

|

||||||

|

|

@ -12,6 +12,17 @@ nav_exclude: true

|

||||||

{:toc}

|

{:toc}

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thanks for your interest in MediaPipe! We are moving to

|

||||||

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

|

as the primary developer documentation

|

||||||

|

site for MediaPipe starting April 3, 2023. This content will not be moved to

|

||||||

|

the new site, but will remain available in the source code repository on an

|

||||||

|

as-is basis.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

### Android

|

### Android

|

||||||

|

|

||||||

Please see these [instructions](./android.md).

|

Please see these [instructions](./android.md).

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/getting_started/faq

|

||||||

title: FAQ

|

title: FAQ

|

||||||

parent: Getting Started

|

parent: Getting Started

|

||||||

nav_order: 9

|

nav_order: 9

|

||||||

|

|

@ -59,7 +60,7 @@ The second approach allows up to [`max_in_flight`] invocations of the

|

||||||

packets from [`CalculatorBase::Process`] are automatically ordered by timestamp

|

packets from [`CalculatorBase::Process`] are automatically ordered by timestamp

|

||||||

before they are passed along to downstream calculators.

|

before they are passed along to downstream calculators.

|

||||||

|

|

||||||

With either aproach, you must be aware that the calculator running in parallel

|

With either approach, you must be aware that the calculator running in parallel

|

||||||

cannot maintain internal state in the same way as a normal sequential

|

cannot maintain internal state in the same way as a normal sequential

|

||||||

calculator.

|

calculator.

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -11,3 +11,14 @@ has_children: true

|

||||||

1. TOC

|

1. TOC

|

||||||

{:toc}

|

{:toc}

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thanks for your interest in MediaPipe! We are moving to

|

||||||

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

|

as the primary developer documentation

|

||||||

|

site for MediaPipe starting April 3, 2023. This content will not be moved to

|

||||||

|

the new site, but will remain available in the source code repository on an

|

||||||

|

as-is basis.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/getting_started/gpu_support

|

||||||

title: GPU Support

|

title: GPU Support

|

||||||

parent: Getting Started

|

parent: Getting Started

|

||||||

nav_order: 7

|

nav_order: 7

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/getting_started/help

|

||||||

title: Getting Help

|

title: Getting Help

|

||||||

parent: Getting Started

|

parent: Getting Started

|

||||||

nav_order: 8

|

nav_order: 8

|

||||||

|

|

@ -37,8 +38,8 @@ If you open a GitHub issue, here is our policy:

|

||||||

- **OS Platform and Distribution (e.g., Linux Ubuntu 16.04)**:

|

- **OS Platform and Distribution (e.g., Linux Ubuntu 16.04)**:

|

||||||

- **Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device**:

|

- **Mobile device (e.g. iPhone 8, Pixel 2, Samsung Galaxy) if the issue happens on mobile device**:

|

||||||

- **Bazel version**:

|

- **Bazel version**:

|

||||||

- **Android Studio, NDK, SDK versions (if issue is related to building in mobile dev enviroment)**:

|

- **Android Studio, NDK, SDK versions (if issue is related to building in mobile dev environment)**:

|

||||||

- **Xcode & Tulsi version (if issue is related to building in mobile dev enviroment)**:

|

- **Xcode & Tulsi version (if issue is related to building in mobile dev environment)**:

|

||||||

- **Exact steps to reproduce**:

|

- **Exact steps to reproduce**:

|

||||||

|

|

||||||

### Describe the problem

|

### Describe the problem

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/getting_started/install

|

||||||

title: Installation

|

title: Installation

|

||||||

parent: Getting Started

|

parent: Getting Started

|

||||||

nav_order: 6

|

nav_order: 6

|

||||||

|

|

|

||||||

|

|

@ -12,6 +12,17 @@ nav_order: 4

|

||||||

{:toc}

|

{:toc}

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thanks for your interest in MediaPipe! We are moving to

|

||||||

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

|

as the primary developer documentation

|

||||||

|

site for MediaPipe starting April 3, 2023. This content will not be moved to

|

||||||

|

the new site, but will remain available in the source code repository on an

|

||||||

|

as-is basis.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Ready-to-use JavaScript Solutions

|

## Ready-to-use JavaScript Solutions

|

||||||

|

|

||||||

MediaPipe currently offers the following solutions:

|

MediaPipe currently offers the following solutions:

|

||||||

|

|

@ -33,7 +44,7 @@ snippets.

|

||||||

|

|

||||||

| Browser | Platform | Notes |

|

| Browser | Platform | Notes |

|

||||||

| ------- | ----------------------- | -------------------------------------- |

|

| ------- | ----------------------- | -------------------------------------- |

|

||||||

| Chrome | Android / Windows / Mac | Pixel 4 and older unsupported. Fuschia |

|

| Chrome | Android / Windows / Mac | Pixel 4 and older unsupported. Fuchsia |

|

||||||

| | | unsupported. |

|

| | | unsupported. |

|

||||||

| Chrome | iOS | Camera unavailable in Chrome on iOS. |

|

| Chrome | iOS | Camera unavailable in Chrome on iOS. |

|

||||||

| Safari | iPad/iPhone/Mac | iOS and Safari on iPad / iPhone / |

|

| Safari | iPad/iPhone/Mac | iOS and Safari on iPad / iPhone / |

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/getting_started/troubleshooting

|

||||||

title: Troubleshooting

|

title: Troubleshooting

|

||||||

parent: Getting Started

|

parent: Getting Started

|

||||||

nav_order: 10

|

nav_order: 10

|

||||||

|

|

@ -65,7 +66,7 @@ WARNING: Download from https://storage.googleapis.com/mirror.tensorflow.org/gith

|

||||||

```

|

```

|

||||||

|

|

||||||

usually indicates that Bazel fails to download necessary dependency repositories

|

usually indicates that Bazel fails to download necessary dependency repositories

|

||||||

that MediaPipe needs. MedaiPipe has several dependency repositories that are

|

that MediaPipe needs. MediaPipe has several dependency repositories that are

|

||||||

hosted by Google sites. In some regions, you may need to set up a network proxy

|

hosted by Google sites. In some regions, you may need to set up a network proxy

|

||||||

or use a VPN to access those resources. You may also need to append

|

or use a VPN to access those resources. You may also need to append

|

||||||

`--host_jvm_args "-DsocksProxyHost=<ip address> -DsocksProxyPort=<port number>"`

|

`--host_jvm_args "-DsocksProxyHost=<ip address> -DsocksProxyPort=<port number>"`

|

||||||

|

|

|

||||||

|

|

@ -19,6 +19,17 @@ ML solutions for live and streaming media.

|

||||||

|

|

|

|

||||||

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

***Ready-to-use solutions***: *Cutting-edge ML solutions demonstrating full power of the framework* | ***Free and open source***: *Framework and solutions both under Apache 2.0, fully extensible and customizable*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

|

**Attention:** *Thanks for your interest in MediaPipe! We are moving to

|

||||||

|

[https://developers.google.com/mediapipe](https://developers.google.com/mediapipe)

|

||||||

|

as the primary developer documentation

|

||||||

|

site for MediaPipe starting April 3, 2023.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## ML solutions in MediaPipe

|

## ML solutions in MediaPipe

|

||||||

|

|

||||||

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

Face Detection | Face Mesh | Iris | Hands | Pose | Holistic

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 14

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for this MediaPipe Legacy Solution as of March 1, 2023.

|

||||||

|

For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

AutoFlip is an automatic video cropping pipeline built on top of MediaPipe. This

|

AutoFlip is an automatic video cropping pipeline built on top of MediaPipe. This

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 10

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for this MediaPipe Legacy Solution as of March 1, 2023.

|

||||||

|

For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

MediaPipe Box Tracking has been powering real-time tracking in

|

MediaPipe Box Tracking has been powering real-time tracking in

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 1

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

MediaPipe Face Detection is an ultrafast face detection solution that comes with

|

MediaPipe Face Detection is an ultrafast face detection solution that comes with

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 2

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

MediaPipe Face Mesh is a solution that estimates 468 3D face landmarks in

|

MediaPipe Face Mesh is a solution that estimates 468 3D face landmarks in

|

||||||

|

|

@ -133,7 +143,7 @@ about the model in this [paper](https://arxiv.org/abs/2006.10962).

|

||||||

The [Face Landmark Model](#face-landmark-model) performs a single-camera face landmark

|

The [Face Landmark Model](#face-landmark-model) performs a single-camera face landmark

|

||||||

detection in the screen coordinate space: the X- and Y- coordinates are

|

detection in the screen coordinate space: the X- and Y- coordinates are

|

||||||

normalized screen coordinates, while the Z coordinate is relative and is scaled

|

normalized screen coordinates, while the Z coordinate is relative and is scaled

|

||||||

as the X coodinate under the

|

as the X coordinate under the

|

||||||

[weak perspective projection camera model](https://en.wikipedia.org/wiki/3D_projection#Weak_perspective_projection).

|

[weak perspective projection camera model](https://en.wikipedia.org/wiki/3D_projection#Weak_perspective_projection).

|

||||||

This format is well-suited for some applications, however it does not directly

|

This format is well-suited for some applications, however it does not directly

|

||||||

enable the full spectrum of augmented reality (AR) features like aligning a

|

enable the full spectrum of augmented reality (AR) features like aligning a

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 8

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Example Apps

|

## Example Apps

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 4

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

The ability to perceive the shape and motion of hands can be a vital component

|

The ability to perceive the shape and motion of hands can be a vital component

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 6

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

Live perception of simultaneous [human pose](./pose.md),

|

Live perception of simultaneous [human pose](./pose.md),

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 11

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for this MediaPipe Legacy Solution as of March 1, 2023.

|

||||||

|

For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

Augmented Reality (AR) technology creates fun, engaging, and immersive user

|

Augmented Reality (AR) technology creates fun, engaging, and immersive user

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 3

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

A wide range of real-world applications, including computational photography

|

A wide range of real-world applications, including computational photography

|

||||||

|

|

@ -38,7 +48,7 @@ camera, in real-time, without the need for specialized hardware. Through use of

|

||||||

iris landmarks, the solution is also able to determine the metric distance

|

iris landmarks, the solution is also able to determine the metric distance

|

||||||

between the subject and the camera with relative error less than 10%. Note that

|

between the subject and the camera with relative error less than 10%. Note that

|

||||||

iris tracking does not infer the location at which people are looking, nor does

|

iris tracking does not infer the location at which people are looking, nor does

|

||||||

it provide any form of identity recognition. With the cross-platfrom capability

|

it provide any form of identity recognition. With the cross-platform capability

|

||||||

of the MediaPipe framework, MediaPipe Iris can run on most modern

|

of the MediaPipe framework, MediaPipe Iris can run on most modern

|

||||||

[mobile phones](#mobile), [desktops/laptops](#desktop) and even on the

|

[mobile phones](#mobile), [desktops/laptops](#desktop) and even on the

|

||||||

[web](#web).

|

[web](#web).

|

||||||

|

|

@ -99,7 +109,7 @@ You can also find more details in this

|

||||||

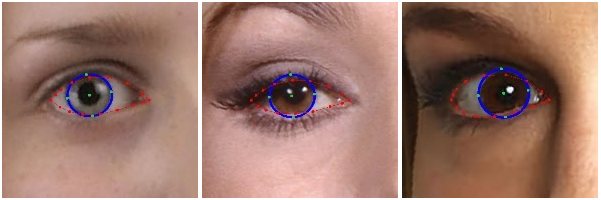

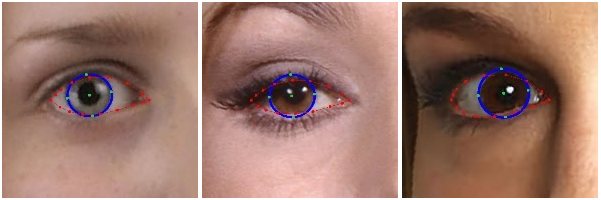

### Iris Landmark Model

|

### Iris Landmark Model

|

||||||

|

|

||||||

The iris model takes an image patch of the eye region and estimates both the eye

|

The iris model takes an image patch of the eye region and estimates both the eye

|

||||||

landmarks (along the eyelid) and iris landmarks (along ths iris contour). You

|

landmarks (along the eyelid) and iris landmarks (along this iris contour). You

|

||||||

can find more details in this [paper](https://arxiv.org/abs/2006.11341).

|

can find more details in this [paper](https://arxiv.org/abs/2006.11341).

|

||||||

|

|

||||||

|

|

|

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 13

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for this MediaPipe Legacy Solution as of March 1, 2023.

|

||||||

|

For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

MediaPipe KNIFT is a template-based feature matching solution using KNIFT

|

MediaPipe KNIFT is a template-based feature matching solution using KNIFT

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 15

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for this MediaPipe Legacy Solution as of March 1, 2023.

|

||||||

|

For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

MediaPipe is a useful and general framework for media processing that can

|

MediaPipe is a useful and general framework for media processing that can

|

||||||

|

|

@ -85,7 +95,7 @@ process new data sets, in the documentation of

|

||||||

|

|

||||||

MediaSequence uses SequenceExamples as the format of both inputs and

|

MediaSequence uses SequenceExamples as the format of both inputs and

|

||||||

outputs. Annotations are encoded as inputs in a SequenceExample of metadata

|

outputs. Annotations are encoded as inputs in a SequenceExample of metadata

|

||||||

that defines the labels and the path to the cooresponding video file. This

|

that defines the labels and the path to the corresponding video file. This

|

||||||

metadata is passed as input to the C++ `media_sequence_demo` binary, and the

|

metadata is passed as input to the C++ `media_sequence_demo` binary, and the

|

||||||

output is a SequenceExample filled with images and annotations ready for

|

output is a SequenceExample filled with images and annotations ready for

|

||||||

model training.

|

model training.

|

||||||

|

|

|

||||||

|

|

@ -12,6 +12,20 @@ nav_order: 30

|

||||||

{:toc}

|

{:toc}

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for

|

||||||

|

[these MediaPipe Legacy Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

as of March 1, 2023. All other

|

||||||

|

[MediaPipe Legacy Solutions will be upgraded](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

to a new MediaPipe Solution. The code repository and prebuilt binaries for all

|

||||||

|

MediaPipe Legacy Solutions will continue to be provided on an as-is basis.

|

||||||

|

We encourage you to check out the new MediaPipe Solutions at:

|

||||||

|

[https://developers.google.com/mediapipe/solutions](https://developers.google.com/mediapipe/solutions)*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

### [Face Detection](https://google.github.io/mediapipe/solutions/face_detection)

|

### [Face Detection](https://google.github.io/mediapipe/solutions/face_detection)

|

||||||

|

|

||||||

* Short-range model (best for faces within 2 meters from the camera):

|

* Short-range model (best for faces within 2 meters from the camera):

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 9

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## Example Apps

|

## Example Apps

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 12

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for this MediaPipe Legacy Solution as of March 1, 2023.

|

||||||

|

For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

MediaPipe Objectron is a mobile real-time 3D object detection solution for

|

MediaPipe Objectron is a mobile real-time 3D object detection solution for

|

||||||

|

|

@ -170,7 +180,7 @@ and a

|

||||||

The detection subgraph performs ML inference only once every few frames to

|

The detection subgraph performs ML inference only once every few frames to

|

||||||

reduce computation load, and decodes the output tensor to a FrameAnnotation that

|

reduce computation load, and decodes the output tensor to a FrameAnnotation that

|

||||||

contains nine keypoints: the 3D bounding box's center and its eight vertices.

|

contains nine keypoints: the 3D bounding box's center and its eight vertices.

|

||||||

The tracking subgraph runs every frame, using the box traker in

|

The tracking subgraph runs every frame, using the box tracker in

|

||||||

[MediaPipe Box Tracking](./box_tracking.md) to track the 2D box tightly

|

[MediaPipe Box Tracking](./box_tracking.md) to track the 2D box tightly

|

||||||

enclosing the projection of the 3D bounding box, and lifts the tracked 2D

|

enclosing the projection of the 3D bounding box, and lifts the tracked 2D

|

||||||

keypoints to 3D with

|

keypoints to 3D with

|

||||||

|

|

@ -613,7 +623,7 @@ z_ndc = 1 / Z

|

||||||

|

|

||||||

### Pixel Space

|

### Pixel Space

|

||||||

|

|

||||||

In this API we set upper-left coner of an image as the origin of pixel

|

In this API we set upper-left corner of an image as the origin of pixel

|

||||||

coordinate. One can convert from NDC to pixel space as follows:

|

coordinate. One can convert from NDC to pixel space as follows:

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

|

||||||

|

|

@ -20,6 +20,16 @@ nav_order: 5

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

Human pose estimation from video plays a critical role in various applications

|

Human pose estimation from video plays a critical role in various applications

|

||||||

|

|

|

||||||

|

|

@ -19,6 +19,16 @@ nav_order: 1

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

One of the applications

|

One of the applications

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 7

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

As of March 1, 2023, this solution is planned to be upgraded to a new MediaPipe

|

||||||

|

Solution. For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

## Overview

|

## Overview

|

||||||

|

|

||||||

*Fig 1. Example of MediaPipe Selfie Segmentation.* |

|

*Fig 1. Example of MediaPipe Selfie Segmentation.* |

|

||||||

|

|

|

||||||

|

|

@ -13,7 +13,21 @@ has_toc: false

|

||||||

{:toc}

|

{:toc}

|

||||||

---

|

---

|

||||||

|

|

||||||

Note: These solutions are no longer actively maintained. Consider using or migrating to the new [MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide).

|

**Attention:** *Thank you for your interest in MediaPipe Solutions. We have

|

||||||

|

ended support for

|

||||||

|

[these MediaPipe Legacy Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

as of March 1, 2023. All other

|

||||||

|

[MediaPipe Legacy Solutions will be upgraded](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

to a new MediaPipe Solution. The

|

||||||

|

[code repository](https://github.com/google/mediapipe/tree/master/mediapipe)

|

||||||

|

and prebuilt binaries for all MediaPipe Legacy Solutions will continue to

|

||||||

|

be provided on an as-is basis. We encourage you to check out the new MediaPipe

|

||||||

|

Solutions at:

|

||||||

|

[https://developers.google.com/mediapipe/solutions](https://developers.google.com/mediapipe/solutions)*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on June 1, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

MediaPipe offers open source cross-platform, customizable ML solutions for live

|

MediaPipe offers open source cross-platform, customizable ML solutions for live

|

||||||

and streaming media.

|

and streaming media.

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,16 @@ nav_order: 16

|

||||||

</details>

|

</details>

|

||||||

---

|

---

|

||||||

|

|

||||||

|

**Attention:** *Thank you for your interest in MediaPipe Solutions.

|

||||||

|

We have ended support for this MediaPipe Legacy Solution as of March 1, 2023.

|

||||||

|

For more information, see the new

|

||||||

|

[MediaPipe Solutions](https://developers.google.com/mediapipe/solutions/guide#legacy)

|

||||||

|

site.*

|

||||||

|

|

||||||

|

*This notice and web page will be removed on April 3, 2023.*

|

||||||

|

|

||||||

|

----

|

||||||

|

|

||||||

MediaPipe is a useful and general framework for media processing that can assist

|

MediaPipe is a useful and general framework for media processing that can assist

|

||||||

with research, development, and deployment of ML models. This example focuses on

|

with research, development, and deployment of ML models. This example focuses on

|

||||||

model development by demonstrating how to prepare training data and do model

|

model development by demonstrating how to prepare training data and do model

|

||||||

|

|

|

||||||

|

|

@ -1,5 +1,6 @@

|

||||||

---

|

---

|

||||||

layout: default

|

layout: forward

|

||||||

|

target: https://developers.google.com/mediapipe/framework/tools/visualizer

|

||||||

title: Visualizer

|

title: Visualizer

|

||||||

parent: Tools

|

parent: Tools

|

||||||

nav_order: 1

|

nav_order: 1

|

||||||

|

|

|

||||||

|

|

@ -48,7 +48,6 @@ class MergeToVectorCalculator : public Node {

|

||||||

}

|

}

|

||||||

|

|

||||||

absl::Status Process(CalculatorContext* cc) {

|

absl::Status Process(CalculatorContext* cc) {

|

||||||

const int input_num = kIn(cc).Count();

|

|

||||||

std::vector<T> output_vector;

|

std::vector<T> output_vector;

|

||||||

for (auto it = kIn(cc).begin(); it != kIn(cc).end(); it++) {

|

for (auto it = kIn(cc).begin(); it != kIn(cc).end(); it++) {

|

||||||

const auto& elem = *it;

|

const auto& elem = *it;

|

||||||

|

|

|

||||||

|

|

@ -13,6 +13,7 @@

|

||||||

# limitations under the License.

|

# limitations under the License.

|

||||||

#

|

#

|

||||||

|

|

||||||

|

load("@bazel_skylib//lib:selects.bzl", "selects")

|

||||||

load("//mediapipe/framework/port:build_config.bzl", "mediapipe_proto_library")

|

load("//mediapipe/framework/port:build_config.bzl", "mediapipe_proto_library")

|

||||||

load("//mediapipe/framework:mediapipe_register_type.bzl", "mediapipe_register_type")

|

load("//mediapipe/framework:mediapipe_register_type.bzl", "mediapipe_register_type")

|

||||||

|

|

||||||

|

|

@ -23,6 +24,14 @@ package(

|

||||||

|

|

||||||

licenses(["notice"])

|

licenses(["notice"])

|

||||||

|

|

||||||

|

selects.config_setting_group(

|

||||||

|

name = "ios_or_disable_gpu",

|

||||||

|

match_any = [

|

||||||

|

"//mediapipe/gpu:disable_gpu",

|

||||||

|

"//mediapipe:ios",

|

||||||

|

],

|

||||||

|

)

|

||||||

|

|

||||||

mediapipe_proto_library(

|

mediapipe_proto_library(

|

||||||

name = "detection_proto",

|

name = "detection_proto",

|

||||||

srcs = ["detection.proto"],

|

srcs = ["detection.proto"],

|

||||||

|

|

@ -336,9 +345,7 @@ cc_library(

|

||||||

"//conditions:default": [

|

"//conditions:default": [

|

||||||

"//mediapipe/gpu:gl_texture_buffer",

|

"//mediapipe/gpu:gl_texture_buffer",

|

||||||

],

|

],

|

||||||

"//mediapipe:ios": [

|

"ios_or_disable_gpu": [],

|

||||||

],

|

|

||||||

"//mediapipe/gpu:disable_gpu": [],

|

|

||||||

}) + select({

|

}) + select({

|

||||||

"//conditions:default": [],

|

"//conditions:default": [],

|

||||||

"//mediapipe:apple": [

|

"//mediapipe:apple": [

|

||||||

|

|

|

||||||

|

|

@ -18,15 +18,16 @@

|

||||||

|

|

||||||

#include "absl/strings/str_cat.h"

|

#include "absl/strings/str_cat.h"

|

||||||

#include "absl/strings/str_join.h"

|

#include "absl/strings/str_join.h"

|

||||||

|

#include "absl/strings/string_view.h"

|

||||||

|

|

||||||

namespace mediapipe {

|

namespace mediapipe {

|

||||||

namespace tool {

|

namespace tool {

|

||||||

|

|

||||||

absl::Status StatusInvalid(const std::string& message) {

|

absl::Status StatusInvalid(absl::string_view message) {

|

||||||

return absl::Status(absl::StatusCode::kInvalidArgument, message);

|

return absl::Status(absl::StatusCode::kInvalidArgument, message);

|

||||||

}

|

}

|

||||||

|

|

||||||

absl::Status StatusFail(const std::string& message) {

|

absl::Status StatusFail(absl::string_view message) {

|

||||||

return absl::Status(absl::StatusCode::kUnknown, message);

|

return absl::Status(absl::StatusCode::kUnknown, message);

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

@ -35,12 +36,12 @@ absl::Status StatusStop() {

|

||||||

"mediapipe::tool::StatusStop()");

|

"mediapipe::tool::StatusStop()");

|

||||||

}

|

}

|

||||||

|

|

||||||

absl::Status AddStatusPrefix(const std::string& prefix,

|

absl::Status AddStatusPrefix(absl::string_view prefix,

|

||||||

const absl::Status& status) {

|

const absl::Status& status) {

|

||||||

return absl::Status(status.code(), absl::StrCat(prefix, status.message()));

|

return absl::Status(status.code(), absl::StrCat(prefix, status.message()));

|

||||||

}

|

}

|

||||||

|

|

||||||

absl::Status CombinedStatus(const std::string& general_comment,

|

absl::Status CombinedStatus(absl::string_view general_comment,

|

||||||

const std::vector<absl::Status>& statuses) {

|

const std::vector<absl::Status>& statuses) {

|

||||||

// The final error code is absl::StatusCode::kUnknown if not all

|

// The final error code is absl::StatusCode::kUnknown if not all

|

||||||

// the error codes are the same. Otherwise it is the same error code

|

// the error codes are the same. Otherwise it is the same error code

|

||||||

|

|

|

||||||

|

|

@ -19,6 +19,7 @@

|

||||||

#include <vector>

|

#include <vector>

|

||||||

|

|

||||||

#include "absl/base/macros.h"

|

#include "absl/base/macros.h"

|

||||||

|

#include "absl/strings/string_view.h"

|

||||||

#include "mediapipe/framework/port/status.h"

|

#include "mediapipe/framework/port/status.h"

|

||||||

|

|

||||||

namespace mediapipe {

|

namespace mediapipe {

|

||||||

|

|

@ -34,16 +35,16 @@ absl::Status StatusStop();

|

||||||

// Return a status which signals an invalid initial condition (for

|

// Return a status which signals an invalid initial condition (for

|

||||||

// example an InputSidePacket does not include all necessary fields).

|

// example an InputSidePacket does not include all necessary fields).

|

||||||

ABSL_DEPRECATED("Use absl::InvalidArgumentError(error_message) instead.")

|

ABSL_DEPRECATED("Use absl::InvalidArgumentError(error_message) instead.")

|

||||||

absl::Status StatusInvalid(const std::string& error_message);

|

absl::Status StatusInvalid(absl::string_view error_message);

|

||||||

|

|

||||||

// Return a status which signals that something unexpectedly failed.

|

// Return a status which signals that something unexpectedly failed.

|

||||||

ABSL_DEPRECATED("Use absl::UnknownError(error_message) instead.")

|

ABSL_DEPRECATED("Use absl::UnknownError(error_message) instead.")

|

||||||

absl::Status StatusFail(const std::string& error_message);

|

absl::Status StatusFail(absl::string_view error_message);

|

||||||

|

|

||||||

// Prefixes the given string to the error message in status.

|

// Prefixes the given string to the error message in status.

|

||||||

// This function should be considered internal to the framework.

|

// This function should be considered internal to the framework.

|

||||||

// TODO Replace usage of AddStatusPrefix with util::Annotate().

|

// TODO Replace usage of AddStatusPrefix with util::Annotate().

|

||||||

absl::Status AddStatusPrefix(const std::string& prefix,

|

absl::Status AddStatusPrefix(absl::string_view prefix,

|

||||||

const absl::Status& status);

|

const absl::Status& status);

|

||||||

|

|

||||||

// Combine a vector of absl::Status into a single composite status.

|

// Combine a vector of absl::Status into a single composite status.

|

||||||

|

|

@ -51,7 +52,7 @@ absl::Status AddStatusPrefix(const std::string& prefix,

|

||||||

// will be returned.

|

// will be returned.

|

||||||

// This function should be considered internal to the framework.

|

// This function should be considered internal to the framework.

|

||||||

// TODO Move this function to somewhere with less visibility.

|

// TODO Move this function to somewhere with less visibility.

|

||||||

absl::Status CombinedStatus(const std::string& general_comment,

|

absl::Status CombinedStatus(absl::string_view general_comment,

|

||||||

const std::vector<absl::Status>& statuses);

|

const std::vector<absl::Status>& statuses);

|

||||||

|

|

||||||

} // namespace tool

|

} // namespace tool

|

||||||

|

|

|

||||||

|

|

@ -15,7 +15,9 @@

|

||||||

package com.google.mediapipe.components;

|

package com.google.mediapipe.components;

|

||||||

|

|

||||||

import static java.lang.Math.max;

|

import static java.lang.Math.max;

|

||||||

|

import static java.lang.Math.min;

|

||||||

|

|

||||||

|

import android.graphics.Bitmap;

|

||||||

import android.graphics.SurfaceTexture;

|

import android.graphics.SurfaceTexture;

|

||||||

import android.opengl.GLES11Ext;

|

import android.opengl.GLES11Ext;

|

||||||

import android.opengl.GLES20;

|

import android.opengl.GLES20;

|

||||||

|

|

@ -25,9 +27,12 @@ import android.util.Log;

|

||||||

import com.google.mediapipe.framework.TextureFrame;

|

import com.google.mediapipe.framework.TextureFrame;

|

||||||

import com.google.mediapipe.glutil.CommonShaders;

|

import com.google.mediapipe.glutil.CommonShaders;

|

||||||

import com.google.mediapipe.glutil.ShaderUtil;

|

import com.google.mediapipe.glutil.ShaderUtil;

|

||||||

|

import java.nio.ByteBuffer;

|

||||||

|

import java.nio.ByteOrder;

|

||||||

import java.nio.FloatBuffer;

|

import java.nio.FloatBuffer;

|

||||||

import java.util.HashMap;

|

import java.util.HashMap;

|

||||||

import java.util.Map;

|

import java.util.Map;

|

||||||

|

import java.util.concurrent.atomic.AtomicBoolean;

|

||||||

import java.util.concurrent.atomic.AtomicReference;

|

import java.util.concurrent.atomic.AtomicReference;

|

||||||

import javax.microedition.khronos.egl.EGLConfig;

|

import javax.microedition.khronos.egl.EGLConfig;

|

||||||

import javax.microedition.khronos.opengles.GL10;

|

import javax.microedition.khronos.opengles.GL10;

|

||||||

|

|

@ -44,6 +49,13 @@ import javax.microedition.khronos.opengles.GL10;

|

||||||

* {@link TextureFrame} (call {@link #setNextFrame(TextureFrame)}).

|

* {@link TextureFrame} (call {@link #setNextFrame(TextureFrame)}).

|

||||||

*/

|

*/

|

||||||

public class GlSurfaceViewRenderer implements GLSurfaceView.Renderer {

|

public class GlSurfaceViewRenderer implements GLSurfaceView.Renderer {

|

||||||

|

/**

|

||||||

|

* Listener for Bitmap capture requests.

|

||||||

|

*/

|

||||||

|

public interface BitmapCaptureListener {

|

||||||

|

void onBitmapCaptured(Bitmap result);

|

||||||

|

}

|

||||||

|

|

||||||

private static final String TAG = "DemoRenderer";

|

private static final String TAG = "DemoRenderer";

|

||||||

private static final int ATTRIB_POSITION = 1;

|

private static final int ATTRIB_POSITION = 1;

|

||||||

private static final int ATTRIB_TEXTURE_COORDINATE = 2;

|

private static final int ATTRIB_TEXTURE_COORDINATE = 2;

|

||||||

|

|

@ -56,12 +68,32 @@ public class GlSurfaceViewRenderer implements GLSurfaceView.Renderer {

|

||||||

private int frameUniform;

|

private int frameUniform;

|

||||||

private int textureTarget = GLES11Ext.GL_TEXTURE_EXTERNAL_OES;

|

private int textureTarget = GLES11Ext.GL_TEXTURE_EXTERNAL_OES;

|

||||||

private int textureTransformUniform;

|

private int textureTransformUniform;

|

||||||

|

private boolean shouldFitToWidth = false;

|

||||||

// Controls the alignment between frame size and surface size, 0.5f default is centered.

|

// Controls the alignment between frame size and surface size, 0.5f default is centered.

|

||||||

private float alignmentHorizontal = 0.5f;

|

private float alignmentHorizontal = 0.5f;

|

||||||

private float alignmentVertical = 0.5f;

|

private float alignmentVertical = 0.5f;

|

||||||

private float[] textureTransformMatrix = new float[16];

|

private float[] textureTransformMatrix = new float[16];

|

||||||

private SurfaceTexture surfaceTexture = null;

|

private SurfaceTexture surfaceTexture = null;

|

||||||

private final AtomicReference<TextureFrame> nextFrame = new AtomicReference<>();

|

private final AtomicReference<TextureFrame> nextFrame = new AtomicReference<>();

|

||||||

|

private final AtomicBoolean captureNextFrameBitmap = new AtomicBoolean();

|

||||||

|

private BitmapCaptureListener bitmapCaptureListener;

|

||||||

|

|

||||||

|

/**

|

||||||

|

* Sets the {@link BitmapCaptureListener}.

|

||||||

|

*/

|

||||||

|

public void setBitmapCaptureListener(BitmapCaptureListener bitmapCaptureListener) {

|

||||||

|

this.bitmapCaptureListener = bitmapCaptureListener;

|

||||||

|

}

|

||||||

|

|

||||||

|

/**

|

||||||

|

* Request to capture Bitmap of the next frame.

|

||||||

|

*

|

||||||

|

* The result will be provided to the {@link BitmapCaptureListener} if one is set. Please note

|

||||||

|

* this is an expensive operation and the result may not be available for a while.

|

||||||

|

*/

|

||||||

|

public void captureNextFrameBitmap() {

|

||||||

|

captureNextFrameBitmap.set(true);

|

||||||

|

}

|

||||||

|

|

||||||

@Override

|

@Override

|

||||||

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

|

public void onSurfaceCreated(GL10 gl, EGLConfig config) {

|

||||||

|

|

@ -147,6 +179,31 @@ public class GlSurfaceViewRenderer implements GLSurfaceView.Renderer {

|

||||||

|

|

||||||

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

|

GLES20.glDrawArrays(GLES20.GL_TRIANGLE_STRIP, 0, 4);

|

||||||

ShaderUtil.checkGlError("glDrawArrays");

|

ShaderUtil.checkGlError("glDrawArrays");

|

||||||

|

|

||||||

|

// Capture Bitmap if requested.

|

||||||

|

BitmapCaptureListener bitmapCaptureListener = this.bitmapCaptureListener;

|

||||||

|

if (captureNextFrameBitmap.getAndSet(false) && bitmapCaptureListener != null) {

|

||||||

|

int bitmapSize = surfaceWidth * surfaceHeight;

|

||||||

|

ByteBuffer byteBuffer = ByteBuffer.allocateDirect(bitmapSize * 4);

|

||||||

|

byteBuffer.order(ByteOrder.nativeOrder());

|

||||||

|

GLES20.glReadPixels(

|

||||||

|

0, 0, surfaceWidth, surfaceHeight, GLES20.GL_RGBA, GLES20.GL_UNSIGNED_BYTE, byteBuffer);

|

||||||

|